Although it was from only a couple of people, I had an enthusiastic response to a very tentative suggestion that it might be rewarding to see whether a polymath project could say anything useful about Frankl’s union-closed conjecture. A potentially worrying aspect of the idea is that the problem is extremely elementary to state, does not seem to yield to any standard techniques, and is rather notorious. But, as one of the commenters said, that is not necessarily an argument against trying it. A notable feature of the polymath experiment has been that it throws up surprises, so while I wouldn’t expect a polymath project to solve Frankl’s union-closed conjecture, I also know that I need to be rather cautious about my expectations — which in this case is an argument in favour of giving it a try.

A less serious problem is what acronym one would use for the project. For the density Hales-Jewett problem we went for DHJ, and for the Erdős discrepancy problem we used EDP. That general approach runs into difficulties with Frankl’s union-closed conjecture, so I suggest FUNC. This post, if the project were to go ahead, could be FUNC0; in general I like the idea that we would be engaged in a funky line of research.

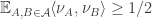

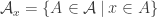

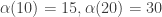

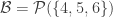

The problem, for anyone who doesn’t know, is this. Suppose you have a family that consists of

distinct subsets of a set

. Suppose also that it is union closed, meaning that if

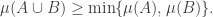

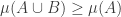

, then

as well. Must there be an element of

that belongs to at least

of the sets? This seems like the sort of question that ought to have an easy answer one way or the other, but it has turned out to be surprisingly difficult.

If you are potentially interested, then one good thing to do by way of preparation is look at this survey article by Henning Bruhn and Oliver Schaudt. It is very nicely written and seems to be a pretty comprehensive account of the current state of knowledge about the problem. It includes some quite interesting reformulations (interesting because you don’t just look at them and see that they are trivially equivalent to the original problem).

For the remainder of this post, I want to discuss a couple of failures. The first is a natural idea for generalizing the problem to make it easier that completely fails, at least initially, but can perhaps be rescued, and the second is a failed attempt to produce a counterexample. I’ll present these just in case one or other of them stimulates a useful idea in somebody else.

A proof idea that may not be completely hopeless

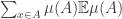

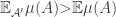

An immediate reaction of any probabilistic combinatorialist is likely to be to wonder whether in order to prove that there exists a point in at least half the sets it might be easier to show that in fact an average point belongs to half the sets.

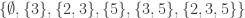

Unfortunately, it is very easy to see that that is false: consider, for example, the three sets ,

, and

. The average (over

) of the number of sets containing a random element is

, but there are three sets.

However, this example doesn't feel like a genuine counterexample somehow, because the set system is just a dressed up version of : we replace the singleton

by the set

and that's it. So for this set system it seems more natural to consider a weighted average, or equivalently to take not the uniform distribution on

, but some other distribution that reflects more naturally the properties of the set system at hand. For example, we could give a probability 1/2 to the element 1 and

to each of the remaining 12 elements of the set. If we do that, then the average number of sets containing a random element will be the same as it is for the example

with the uniform distribution (not that the uniform distribution is obviously the most natural distribution for that example).

This suggests a very slightly more sophisticated version of the averaging-argument idea: does there exist a probability distribution on the elements of the ground set such that the expected number of sets containing a random element (drawn according to that probability distribution) is at least half the number of sets?

With this question we have in a sense the opposite problem. Instead of the answer being a trivial no, it is a trivial yes — if, that is, the union-closed conjecture holds. That’s because if the conjecture holds, then some belongs to at least half the sets, so we can assign probability 1 to that

and probability zero to all the other elements.

Of course, this still doesn’t feel like a complete demolition of the approach. It just means that for it not to be a trivial reformulation we will have to put conditions on the probability distribution. There are two ways I can imagine getting the approach to work. The first is to insist on some property that the distribution is required to have that means that its existence does not follow easily from the conjecture. That is, the idea would be to prove a stronger statement. It seems paradoxical, but as any experienced mathematician knows, it can sometimes be easier to prove a stronger statement, because there is less room for manoeuvre. In extreme cases, once a statement has been suitably strengthened, you have so little choice about what to do that the proof becomes almost trivial.

A second idea is that there might be a nice way of defining the probability distribution in terms of the set system. This would be a situation rather like the one I discussed in my previous post, on entropy and Sidorenko’s conjecture. There, the basic idea was to prove that a set had cardinality at least

by proving that there is a probability distribution on

with entropy at least

. At first, this seems like an unhelpful idea, because if

then the uniform distribution on

will trivially do the job. But it turns out that there is a different distribution for which it is easier to prove that it does the job, even though it usually has lower entropy than the uniform distribution. Perhaps with the union-closed conjecture something like this works too: obviously the best distribution is supported on the set of elements that are contained in a maximal number of sets from the set system, but perhaps one can construct a different distribution out of the set system that gives a smaller average in general but about which it is easier to prove things.

I have no doubt that thoughts of the above kind have occurred to a high percentage of people who have thought about the union-closed conjecture, and can probably be found in the literature as well, but it would be odd not to mention them in this post.

To finish this section, here is a wild guess at a distribution that does the job. Like almost all wild guesses, its chances of being correct are very close to zero, but it gives the flavour of the kind of thing one might hope for.

Given a finite set and a collection

of subsets of

, we can pick a random set

(uniformly from

) and look at the events

for each

. In general, these events are correlated.

Now let us define a matrix by

. We could now try to find a probability distribution

on

that minimizes the sum

. That is, in a certain sense we would be trying to make the events

as uncorrelated as possible on average. (There may be much better ways of measuring this — I’m just writing down the first thing that comes into my head that I can’t immediately see is stupid.)

What does this give in the case of the three sets ,

and

? We have that

if

or

or

and

. If

and

, then

, since if

, then

is one of the two sets

and

, with equal probability.

So to minimize the sum we should choose

so as to maximize the probability that

and

. If

, then this probability is

, which is maximized when

, so in fact we get the distribution mentioned earlier. In particular, for this distribution the average number of sets containing a random point is

, which is precisely half the total number of sets. (I find this slightly worrying, since for a successful proof of this kind I would expect equality to be achieved only in the case that you have disjoint sets

and you take all their unions, including the empty set. But since this definition of a probability distribution isn’t supposed to be a serious candidate for a proof of the whole conjecture, I’m not too worried about being worried.)

Just to throw in another thought, perhaps some entropy-based distribution would be good. I wondered, for example, about defining a probability distribution as follows. Given any probability distribution, we obtain weights on the sets by taking

to be the probability that a random element (chosen from the distribution) belongs to

. We can then form a probability distribution on

by taking the probabilities to be proportional to the weights. Finally, we can choose a distribution on the elements to maximize the entropy of the distribution on

.

If we try that with the example above, and if is the probability assigned to the element 1, then the three weights are

and

, so the probabilities we will assign will be

and

. The entropy of this distribution will be maximized when the two non-zero probabilities are equal, which gives us

, so in this case we will pick out the element 1. It isn’t completely obvious that that is a bad thing to do for this particular example — indeed, we will do it whenever there is an element that is contained in all the non-empty sets from

. Again, there is virtually no chance that this rather artificial construction will work, but perhaps after a lot of thought and several modifications and refinements, something like it could be got to work.

A failed attempt at a counterexample

I find the non-example I’m about to present interesting because I don’t have a good conceptual understanding of why it fails — it’s just that the numbers aren’t kind to me. But I think there is a proper understanding to be had. Can anyone give me a simple argument that no construction that is anything like what I tried can possibly work? (I haven’t even checked properly whether the known positive results about the problem ruled out my attempt before I even started.)

The idea was as follows. Let and

be parameters to be chosen later, and let

be a random set system obtained by choosing each subset of

of size

with probability

, the choices being independent. We then take as our attempted counterexample the set of all unions of sets in

.

Why might one entertain even for a second the thought that this could be a counterexample? Well, if we choose to be rather close to

, but just slightly less, then a typical pair of sets of size

have a union of size close to

, and more generally a typical union of sets of size

has size at least this. There are vastly fewer sets of size greater than

than there are of size

, so we could perhaps dare to hope that almost all the sets in the set system are the ones of size

, so the average size is close to

, which is less than

. And since the sets are spread around, the elements are likely to be contained in roughly the same number of sets each, so this gives a counterexample.

Of course, the problem here is that although a typical union is large, there are many atypical unions, so we need to get rid of them somehow — or at least the vast majority of them. This is where choosing a random subset comes in. The hope is that if we choose a fairly sparse random subset, then all the unions will be large rather than merely almost all.

However, this introduces a new problem, which is that if we have passed to a sparse random subset, then it is no longer clear that the size of that subset is bigger than the number of possible unions. So it becomes a question of balance: can we choose small enough for the unions of those sets to be typical, but still large enough for the sets of size

to dominate the set system? We’re also free to choose

of course.

I usually find when I’m in a situation like this, where I’m hoping for a miracle, that a miracle doesn’t occur, and that indeed seems to be the case here. Let me explain my back-of-envelope calculation.

I’ll write for the set of unions of sets in

. Let us now take

and give an upper bound for the expected number of sets in

of size

. So fix a set

of size

and let us give a bound for the probability that

. We know that

must contain at least two sets in

. But the number of pairs of sets of size

contained in

is at most

and each such pair has a probability

of being a pair of sets in

, so the probability that

is at most

. Therefore, the expected number of sets in

of size

is at most

.

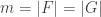

As for the expected number of sets in , it is

, so if we want the example to work, we would very much like it to be the case that when

, we have the inequality

.

We can weaken this requirement by observing that the expected number of sets in

.

If the left-hand side is not just greater than the right-hand side, but greater by a factor of say for each

, then we should be in good shape: the average size of a set in

will be not much greater than

and we’ll be done.

If is not much bigger than

, then things look quite promising. In this case,

will be comparable in size to

, but

will be quite small — it equals

, and

is small. A crude estimate says that we’ll be OK provided that

is significantly smaller than

. And that looks OK, since

is a lot smaller than

, so we aren’t being made to choose a ridiculously small value of

.

If on the other hand is quite a lot larger than

, then

is much much smaller than

, so we’re in great shape as long as we haven’t chosen

so tiny that

is also much much smaller than

.

So what goes wrong? Well, the problem is that the first argument requires smaller and smaller values of as

gets further and further away from

, and the result seems to be that by the time the second regime takes over,

has become too small for the trivial argument to work.

Let me try to be a bit more precise about this. The point at which becomes smaller than

is of course the point at which

. For that value of

, we require

, so we need

. However, an easy calculation reveals that

,

(or observe that if you multiply both sides by , then both expressions are equal to the multinomial coefficient that counts the number of ways of writing an

-element set as

with

and

). So unfortunately we find that however we choose the value of

there is a value of

such that the number of sets in

of size

is greater than

. (I should remark that the estimate

for the number of sets in

of size

can be improved to

, but this does not make enough of a difference to rescue the argument.)

So unfortunately it turns out that the middle of the range is worse than the two ends, and indeed worse by enough to kill off the idea. However, it seemed to me to be good to make at least some attempt to find a counterexample in order to understand the problem better.

From here there are two obvious ways to go. One is to try to modify the above idea to give it a better chance of working. The other, which I have already mentioned, is to try to generalize the failure: that is, to explain why that example, and many others like it, had no hope of working. Alternatively, somebody could propose a completely different line of enquiry.

I’ll stop there. Experience with Polymath projects so far seems to suggest that, as with individual projects, it is hard to predict how long they will continue before there is a general feeling of being stuck. So I’m thinking of this as a slightly tentative suggestion, and if it provokes a sufficiently healthy conversation and interesting new (or at least new to me) ideas, then I’ll write another post and launch a project more formally. In particular, only at that point will I call it Polymath11 (or should that be Polymath12? — I don’t know whether the almost instantly successful polynomial-identities project got round to assigning itself a number). Also, for various reasons I don’t want to get properly going on a Polymath project for at least a week, though I realize I may not be in complete control of what happens in response to this post.

Just before I finish, let me remark that Polymath10, attempting to prove Erdős’s sunflower conjecture, is still continuing on Gil Kalai’s blog. What’s more, I think it is still at a stage where a newcomer could catch up with what is going on — it might take a couple of hours to find and digest a few of the more important comments. But Gil and I agree that there may well be room to have more than one Polymath project going at the same time, since a common pattern is for the group of participants to shrink down to a smallish number of “enthusiasts”, and there are enough mathematicians to form many such groups.

And a quick reminder, as maybe some people reading this will be new to the concept of Polymath projects. The aim is to try to make the problem-solving process easier in various ways. One is to have an open discussion, in the form of blog posts and comments, so that anybody can participate, and with luck a process of self-selection will take place that results in a team of enthusiastic people with a good mixture of skills and knowledge. Another is to encourage people to express ideas that may well be half-baked or even wrong, or even completely obviously wrong. (It’s surprising how often a completely obviously wrong idea can stimulate a different idea that turns out to be very useful. Naturally, expressing such an idea can be embarrassing, but it shouldn’t be, as it is an important part of what we do when we think about problems privately.) Another is to provide a mechanism where people can get very quick feedback on their ideas — this too can be extremely stimulating and speed up the process of thought considerably. If you like the problem but don’t feel like pursuing either of the approaches I’ve outlined above, that’s of course fine — your ideas are still welcome and may well be more fruitful than those ones, which are there just to get the discussion started.

January 21, 2016 at 1:48 pm |

Perhaps a suitable initial step would be to start with something a little less ambitious, for example trying to prove that any union-closed family of sets has an element that occurs in at least 1% of the member-sets.

January 21, 2016 at 4:51 pm

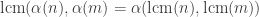

I agree that that seems very sensible. According to the survey article, the best that is known along those lines is that if you have sets, then some element is contained in at least

sets, then some element is contained in at least  of them.

of them.

January 21, 2016 at 4:56 pm |

[…] Polymath11 (?) Tim Gowers’s proposed a polymath project on Frankl’s conjecture. If it will get off the ground we will have (with polymath10) two projects running in parallel […]

January 21, 2016 at 4:59 pm |

Cool! let’s see how it goes! I will try to take part.

January 21, 2016 at 7:13 pm |

Let me also mention that a MathOverflow question http://mathoverflow.net/questions/219638/proposals-for-polymath-projects seeks for polymath proposals. There are some very interesting proposals. I am quite curious to see some proposals in applied mathematics, and various areas of geometry, algebra, analysis and logic. Also since a polymath project was meant to be “a large collaboration in which no single person has to work all that hard,” people are more than welcome to participate simultaneously in both projects.

January 21, 2016 at 7:17 pm |

And for polymath connoisseurs, I posed a sort of Meta question regarding polymath10 that I am contemplating about. https://gilkalai.wordpress.com/2015/12/08/polymath-10-post-3-how-are-we-doing/#comment-23624

January 21, 2016 at 8:41 pm |

David, I agree that trying to prove that there is always an element that appears in at least, say, 0.0001% of the sets is a good start—and it would be a substantial improvement. My feeling is that if the conjecture is false then there should be no constant maximum frequency.

One way to prove that there’s always an element in appearing in at least 0.0001% of the sets lies in a somewhat curious link to “small” families. Let’s say we have a universe of n elements and a set family A of m sets. Let’s, moreover, assume that A is separating, that is, no element is a clone of another. Then, if there are only few sets, namely m\leq 2n then the union-closed sets conjecture holds for A (ie, there is an element that appears in at least half of the sets). This can be seen by rather elementary arguments (see Thm 23 in the survey). Now, here comes the interesting part: if you can improve the factor of 2 in that statement to say 2.0001 then you suddenly have proved that in any union-closed family there is always some element appearing in a constant fraction of the sets (Thm 24). The argument is here:

Click to access HuResult.pdf

One last remark: Jens Maßberg recently improved the factor-2 result somewhat but not to 2.00001 or any constant improvement. The paper (http://arxiv.org/abs/1508.05718) mixes known techniques in a very nice way.

January 21, 2016 at 9:36 pm |

Has anyone made it far enough in Blinovsky’s preprint http://arxiv.org/abs/1507.01270 to see if his approach is viable? Unfortunately, language issues plus very terse writing makes it hard to understand what he is doing.

January 22, 2016 at 3:47 am

I think he has several breakthroughs “pending.” Even if his proof is correct, it can be useful to find another, more standard proof.

January 21, 2016 at 10:21 pm |

I suddenly realized that I hadn’t actually stated the conjecture in the post above. I’ve done that now.

January 22, 2016 at 12:30 am |

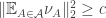

Here are two possible strengthenings. The first is to a weighted version. Suppose that we have a positive weight for each

for each  and that

and that  for every

for every  . Does it follow that there is an element

. Does it follow that there is an element  such that the sum of the weights of the sets containing

such that the sum of the weights of the sets containing  is at least half the sum of all the weights of the sets? If this strengthening isn’t obviously false, is it in fact equivalent (in some obvious way) to the original conjecture?

is at least half the sum of all the weights of the sets? If this strengthening isn’t obviously false, is it in fact equivalent (in some obvious way) to the original conjecture?

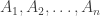

The other possibility is to the following statement: as long as there are at least elements in the union of all the sets in

elements in the union of all the sets in  , then there is a subset

, then there is a subset  of size

of size  such that

such that  for at least

for at least  of the sets in

of the sets in  .

.

Ah, I’ve just seen that the second “strengthening” is not a strengthening, since if the original conjecture is true, then one can pick an element in at least half the sets, then another element in at least half the sets that contain the first element, and so on. But I needed to write this comment to see that.

January 22, 2016 at 4:45 am |

Years ago I remember that Jeff Kahn said that he bet he will find a counterexample to every meaningful strengthening of Frankl’s conjecture. And indeed he shot down many of those and a few I proposed, including weighted versions. I have to look in my old emails to see if this one too.

January 22, 2016 at 9:34 pm

Here’s another one: http://mathoverflow.net/questions/228119

January 22, 2016 at 9:35 am |

A small remark is that both the suggestions I made in the post for how to pick a probability distribution on that would cause an average element to belong to at least half the sets have the following nice property: if you modify the example by duplicating elements of

that would cause an average element to belong to at least half the sets have the following nice property: if you modify the example by duplicating elements of  , then the probability distribution changes appropriately.

, then the probability distribution changes appropriately.

To put that more formally, suppose you have disjoint non-empty sets

disjoint non-empty sets  , and a set system

, and a set system  that consists of subsets of

that consists of subsets of  . You can form a new set system

. You can form a new set system  by taking all sets of the form

by taking all sets of the form  with

with  . This is a kind of “blow-up” of

. This is a kind of “blow-up” of  . If you now apply one of the two processes to

. If you now apply one of the two processes to  , then the probability that you give to

, then the probability that you give to  (that is, the sum of the probabilities assigned to its elements) will not depend on the choice of

(that is, the sum of the probabilities assigned to its elements) will not depend on the choice of  .

.

January 22, 2016 at 10:05 am |

In the spirit of additive combinatorics, what can be said about approximately closed under union families. That is, what if for a constant fraction of pairs of sets, their union also belongs to the family. Two natural questions are:

(1) is there a counter-example to Frankl conjecture in this case (with 1/2 possibly replaced by a smaller constant)

(2) does any such family contain a large family which is closed under unions?

January 22, 2016 at 10:36 am

It’s not quite clear to me what a counterexample means for (1). Obviously if only a constant fraction of pairs of sets have their union in the family, then the constant 1/2 has to be reduced, but it is not clear that there is a natural conjecture for how the new constant should depend on the fraction of unions we assume to be in the family. But maybe the precise question you have in mind, which looks interesting, is whether for every there exists

there exists  such that if a fraction

such that if a fraction  of pairs of sets in the family have their union in the family, then some element is contained in a fraction

of pairs of sets in the family have their union in the family, then some element is contained in a fraction  of the sets.

of the sets.

To prove this statement would be likely to be hard, since it is not known even if $c_1=1$. But as you say, it might be worth looking for a counterexample.

If that is indeed the question you mean, then presumably the motivation for (2), aside from its intrinsic interest, is that a positive answer to (2) would show that a positive answer to the question above would follow from a positive answer to the case, at least if “large” means “of proportional size”.

case, at least if “large” means “of proportional size”.

A thought about (2) is that a random family is probably a counterexample. If we just choose each subset of with probability 1/2, then approximately half the unions will belong to the family, but it looks as though the largest union-closed subfamily will be very small. So I think the best one can hope for with (2) is a subfamily that is somehow “regular”, in the sense that it resembles a random subfamily of a union-closed family, which would still be enough (given a suitable quasirandomness definition) to prove that some element is contained in a significant fraction of the sets, assuming that that is true for union-closed families.

with probability 1/2, then approximately half the unions will belong to the family, but it looks as though the largest union-closed subfamily will be very small. So I think the best one can hope for with (2) is a subfamily that is somehow “regular”, in the sense that it resembles a random subfamily of a union-closed family, which would still be enough (given a suitable quasirandomness definition) to prove that some element is contained in a significant fraction of the sets, assuming that that is true for union-closed families.

January 22, 2016 at 12:53 pm |

Two questions: 1) Does the Frankl’s conjecture extend when we replace the uniform distribution on the discrete cube by the districution?

districution?

2) If the family is weakly symmetric, namely closed under transitive permutation group, or even if it is regular, namely every element belongs to the same number of sets , then the conjecture should apply to every element. Perhaps this is an easier case.

It is interesting to note that for weakly symmetric union closed families assuming also a yes answer for 1) we will get (by Russo’s lemma) that is monotone non decreasing with p. (like for monotone families.)

is monotone non decreasing with p. (like for monotone families.)

January 22, 2016 at 2:11 pm

Let me check I understand the first question. I take to be the distribution that assigns to each subset

to be the distribution that assigns to each subset  the probability of picking that set if elements of

the probability of picking that set if elements of  are chosen independently with probability

are chosen independently with probability  . If we take all subsets of

. If we take all subsets of  , then the probability that a random subset contains

, then the probability that a random subset contains  is

is  , so the optimistic extension of the conjecture would be that if

, so the optimistic extension of the conjecture would be that if  is union closed then there is some

is union closed then there is some  such that the probability that a

such that the probability that a  -random subset of

-random subset of  contains

contains  given that it belongs to

given that it belongs to  is at least

is at least  .

.

January 22, 2016 at 3:35 pm

The way I think about it which might be equivalent to what you say (otherwise we will have two variants at the price of one) is: Is there a k such that the Fourier coefficient with respect to ,

,  is non positive. Of course we can ask if we can find an element which works simultaneously for all k.

is non positive. Of course we can ask if we can find an element which works simultaneously for all k.

(By Jeff’s heuristics the answer should be “no” for all these but I dont recall I thought about them.)

January 22, 2016 at 3:35 pm

I meant “for all p” not “for all k”.

January 22, 2016 at 8:47 pm |

Another modification of your first approach would be to ask if an average point in a separating family belongs to at least half the sets. But this is also false: consider the five sets ,

,  ,

,  ,

,  ,

,  . An average point belongs to only

. An average point belongs to only  of the sets.

of the sets.

I wonder if Jeff Kahn’s ‘bet’ would apply to the conjecture of Poonen mentioned in the survey article (Conjecture 14). Or does that not qualify as a sufficient strengthening?

January 22, 2016 at 9:41 pm

Let me see whether I can test my correlation-minimizing guess on this example. I have that . We may as well average this matrix with its transpose, which gives

. We may as well average this matrix with its transpose, which gives  . So it’s clear that to minimize the sum

. So it’s clear that to minimize the sum  we want to take the uniform distribution (so as to minimize the diagonal contribution). So the example above demolishes the first of my two suggestions in the post. I’m not sure I’ve got the stomach to think about the entropy construction just at the moment, but my immediate thought now is to wonder what it might be possible to do about the above failure: somehow the correct distribution “shouldn’t” have given equal weight to 3. Can one convert this feeling of moral outrage into a better construction?

we want to take the uniform distribution (so as to minimize the diagonal contribution). So the example above demolishes the first of my two suggestions in the post. I’m not sure I’ve got the stomach to think about the entropy construction just at the moment, but my immediate thought now is to wonder what it might be possible to do about the above failure: somehow the correct distribution “shouldn’t” have given equal weight to 3. Can one convert this feeling of moral outrage into a better construction?

January 23, 2016 at 11:47 am

Yes, the problem is that being big makes up for

being big makes up for  being small. So that way of defining

being small. So that way of defining  doesn’t work.

doesn’t work.

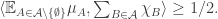

A still more naive idea would be to make proportional to

proportional to  . But whether this works at all, or makes anything easier to prove, I don’t know. This would correspond to the following strengthening of the conjecture:

. But whether this works at all, or makes anything easier to prove, I don’t know. This would correspond to the following strengthening of the conjecture:  (where

(where  is the number of sets in

is the number of sets in  ). (The example above satisfies this.)

). (The example above satisfies this.)

March 7, 2016 at 3:16 pm

Apparenty the above strengthening is false: see this comment.

January 23, 2016 at 10:52 am |

That comment got completely messed up in format. I’ll replace the less-than signs with non-HTML and repost it shortly (and the version above can be deleted).

January 23, 2016 at 10:55 am |

The following false proof seems worth posting, even though I think other comments here already rule out the suggested strengthening from being true. The error is straightforward, so I’ll leave it as an exercise for the reader for 24 hours or so, or until anyone reveals it in a comment (which you should feel free to do if it helps the discussion).

Reformulate the set-family A as a 0-1 matrix, with columns being characteristic functions of member sets, and rows being elements. Call any 0-1 matrix “legitimate” if all columns are distinct and all rows have a 1.

The conjecture is now: in a legitimate matrix, if the set of columns is union-closed, at least one row has density >= 1/2.

Reexpress it as: in a legitimate matrix, if all rows have density < 1/2, the set of columns is not union-closed.

Now strengthen it as follows: in a legitimate matrix, if the matrix as a whole has density < 1/2, the set of columns is not union-closed.

Now assume a counterexample and find one with fewer rows. This will prove the strengthened conjecture by contradiction, since if there is only one row, it’s obvious.

Case 0: some row is entirely 1s. The remaining part of the matrix has lower density than the whole matrix, and is legitimate, so just remove that row and continue.

Case 1: some row has density >= 1/2 (but is not all 1s). Put that row on top, and sort the columns so that row has all its 0s first. Split the matrix into three submatrices: that row, the part under that row’s 0s, the part under that row’s 1s. Since the whole matrix density is < 1/2 and is a weighted average of the densities of these parts, at least one part has density < 1/2. By assumption it’s not the top row, so it must be one of the submatrices under it (which are legitimate). Pick that one and continue.

Case 2: no row has density >= 1/2. Put any row on top, and split the matrix as in Case 1. This time, just note that the matrix with its top row removed has density < 1/2 (since it’s made of rows of density < 1/2), which is a weighted average of the two submatrix parts, so we can pick one with density < 1/2.

QED (not counting the error I noticed, and any other errors I *didn’t* notice).

January 23, 2016 at 11:02 am

I forgot to say, about the submatrices, that they are also union-closed (like the hypothetical counterexample). That’s not the error (it’s correct).

January 23, 2016 at 11:29 am

I think I see one flaw: when we split the matrix into submatrices, the two submatrices under the first row might not be legitimate, since they may have rows without a 1?

January 23, 2016 at 5:36 pm

A disturbing aspect of the argument is that it doesn’t seem to use anywhere the hypothesis that the columns are distinct. Since we know that the strengthened statement is false when the columns are not required to be distinct, we should have a general flaw-identifying mechanism. (But perhaps I have missed a place where the hypothesis is genuinely used.)

January 23, 2016 at 10:04 pm

@Alec: that’s indeed the error I know about — “0-rows” (containing no 1s) can be produced. It’s a fatal error, but I’ll post below about ideas for possible ways around it. (If you find any *other* error, it’s one I don’t know about.)

@gowers: it does use the columns being distinct — in the base case (1 row). Otherwise we could have [[0, 0, 1]] of density 1/3. AFAIK it’s not disturbing that it only uses that in that one place; do you agree?

OTOH it is disturbing that it never uses the union-closed property. (So if it didn’t have the error, I think it would “prove” that almost any 0-1 matrix with distinct columns and density < 1/2 is a contradiction.)

It turns out that these issues might be related, in the sense that some of the ideas I'll post for dealing with 0-rows might have a way of making use of the columns being union-closed. More on this shortly.

January 23, 2016 at 11:02 pm

I added a long comment at the bottom, “About getting around 0-rows … (in part, by making use of the union-closed condition)”.

January 23, 2016 at 11:59 am |

Something I’d like to do is try to give a precise formulation to the question, “Does there exist a non-trivial averaging argument that solves the conjecture?” As I pointed out in the post, the obvious first attempt — does there exist a probability distribution on such that the average number of sets containing a random

such that the average number of sets containing a random  is at least

is at least  — is unsatisfactory, because if we allow probability distributions that are concentrated at a point, then the question is trivially equivalent to the original question. So what exactly is it that one is looking for?

— is unsatisfactory, because if we allow probability distributions that are concentrated at a point, then the question is trivially equivalent to the original question. So what exactly is it that one is looking for?

One property that I would very much like is a property that I mentioned earlier: that the distribution does the right thing if one blows sets up. We can express the property as follows. Given the set-system , let

, let  be the algebra that it generates (that is, everything you can make out of

be the algebra that it generates (that is, everything you can make out of  using intersections, unions and set differences). Let

using intersections, unions and set differences). Let  be the atoms of this algebra and define a set-system

be the atoms of this algebra and define a set-system  to be the set of all

to be the set of all  such that

such that  . Let me also write

. Let me also write  for

for  .

.

Now say that another set-system is isomorphic to

is isomorphic to  if

if  is isomorphic to

is isomorphic to  in the obvious sense that there is some permutation of the ground set of

in the obvious sense that there is some permutation of the ground set of  that makes it equal to

that makes it equal to  . And let us extend that by saying that

. And let us extend that by saying that  is isomorphic to

is isomorphic to  (with

(with  being the isomorphism — it is an isomorphism of lattices or something like that) and taking the transitive closure.

being the isomorphism — it is an isomorphism of lattices or something like that) and taking the transitive closure.

The property I would want is that the probability distribution one constructs for is a lattice invariant. That is, if two set systems become identical after applying

is a lattice invariant. That is, if two set systems become identical after applying  and permuting ground sets, then corresponding atoms get the same probabilities.

and permuting ground sets, then corresponding atoms get the same probabilities.

In particular, this seems to say that if permuting and

and  leads to an automorphism of

leads to an automorphism of  , then

, then  and

and  should be given equal probability. This is not enough to rule out the trivial argument though, since if some element is in half the sets, we can assign all the probability to that element and any other element that is indistinguishable from it.

should be given equal probability. This is not enough to rule out the trivial argument though, since if some element is in half the sets, we can assign all the probability to that element and any other element that is indistinguishable from it.

Another property I would like, but don’t have a precise formulation of, is “continuity”. That is, if you don’t change much, then the probability distribution doesn’t change much either.

much, then the probability distribution doesn’t change much either.

It feels to me as though it might be good to ask for some quantity to be minimized or maximized, as in my two attempts in the post.

And a final remark is that we don’t have to take the average number of sets containing a point: we could take the average of some strictly increasing function of that number. As long as that average is at least

of that number. As long as that average is at least  then we will be done.

then we will be done.

That is as far as I’ve got so far with coming up with precise requirements.

January 23, 2016 at 2:47 pm |

I wonder whether the following question is trivial-or the answer known: if one considers the set of N elements, to be specific, {1,2,…,N} and assigns to it the uniform measure, so p(k)=1/N, for k an element of this set, there are 2^N subsets. These sets contain elements of the original one more than once. If one chooses a set with some probability, is it known, how p(k) changes? One can imagine organizing these as a binary tree and trying to characterize paths along it-how would the property “closed under union” be expressed in this context?

January 23, 2016 at 4:24 pm

I don’t understand the question. You seem to have defined to be

to be  , so how can it change? Can you give a more formal formulation of what you are asking?

, so how can it change? Can you give a more formal formulation of what you are asking?

January 23, 2016 at 6:13 pm |

I’m sorry-what I’m trying to get at is how the set, whose elements are the subsets of the original set, that are union-closed could be constructed, from the set of all subsets of the original set and trying to think of a simple (maybe too simple) example. For, while p(k)=1/N for the original set, in my example, by selecting subsets, this may be different, within some collection of subsets, constructed by a given rule. I was trying to work through the first example of the survey article.

January 23, 2016 at 10:54 pm |

About getting around 0-rows in the “false proof” I gave above (in part, by making use of the union-closed condition):

Most of the arguments in the “false proof” extend to a weighted definition of “whole matrix density”, which uses a distribution on X (specified in advance) to weight the rows. Then if we knew which rows were going to turn out to be 0-rows (once we reached them in some submatrix with fewer columns — they were not 0-rows to begin with), we could specify their weights as 0; then we could remove those rows from a submatrix without increasing the density of the remaining part.

I know of only two problems with this:

1. since changing those weights changes how we evaluate submatrix density, it can change which submatrix we choose at any stage, perhaps at multiple stages much earlier than when the 0-row (which motivates the weight change) comes up. So if we make an argument of the form “when a 0-row arises, slightly lower that row’s weight and start over”, then several far earlier submatrix choices might change.

2. the possible existence of 0-columns means the recursion can end with one submatrix being a single 0-column. This was ruled out by having 0-rows, but since it can come up when we recurse, we have to deal with it somehow.

Issue (2) seems like a technicality, but I don’t know how to fix it or how serious it is.

Issue (1) is interesting. The problem with “adjust weights until they work” would be if we get into a “loop”; this seems possible, since submatrix choices change how we’ll want to adjust them. (And it must be possible, since as said before, fixing it (and issue (2)) would mean proving most density 1/3 matrices don’t exist.)

But the union-closed condition relates the rows of the two submatrices we choose between, at a given stage.

If the right submatrix (under 1’s) has row R all 0, then so must the left one (under 0’s), for the same R. (Otherwise we can construct a union of columns which should exist in the right one but doesn’t, taking any column on the right unioned with any column containing a 1 in row R on the left.)

So a 0-row on the right would be a 0-row on the left too, and thus a 0-row in the whole, and is therefore ruled out. Thus the right submatrix has no 0-rows, which means any change to this stage’s choice of left or right submatrix, motivated by finding a 0-row immediately afterwards, would be changing the choice from left to right (never from right to left).

Furthermore, I think a similar argument can say a similar thing about a choice made at an earlier stage, though I haven’t worked out exactly what it says, since this method of relating 0-rows should work for smaller submatrices too (defined by grouping columns based on the content of their first k rows, not only with k = 1 as before), provided their “indices” (content of first k rows) are related by the right one’s bits (matrix elements) being strictly >= than the left one’s bits.

So this gives me some hope that the union-closed condition can control the way row-weight changes affect the choice of submatrices, in a way that prevents “long term oscillations” in a strategy which gradually adjusts row weights to try to postpone the problem of finding a 0-row with nonzero weight (by reducing the weight of such rows).

January 23, 2016 at 11:00 pm

Minor clarifications in the above:

“This was ruled out by having 0-rows” -> “This is ruled out by such a submatrix having 0-rows”.

“submatrix choices change how we’ll want to adjust them” — by changing which rows end up being 0-rows when we reach them.

January 23, 2016 at 11:12 pm

I also guess that when lowering a row’s weight since it contains a 0-row (in the left submatrix) that might come up in the next stage, you can often increase some later row’s weight to avoid affecting any submatrix choices from earlier stages (by not changing the total weighted density of the current matrix you’re looking at); this change to two weights would then only change the upcoming choice, not any earlier choices.

January 23, 2016 at 11:17 pm

Wait, that last guess is wrong as stated, since different earlier choices were looking at rows widened by different sets of columns, so perhaps no single way of making compensating row-weight changes would work for all choices (and the suggested way, changing just one other weight, would not in general work for more than one of those choices). So I don’t know whether this possibility helps or not. In general I think it means you could fix the first j stages (i.e. prevent having to revise the first j choices) if you had j nonzero rows (below the top row) at that point. That’s a guess, not verified.

January 23, 2016 at 11:50 pm

About “issue (2)”:

A matrix corresponding to the 3-set family { empty set, S, X } can fit all the criteria (distinct columns, union closed, density < 1/2, no 0-rows) and yet exists, disproving the “strengthened conjecture” even with union closed columns taken into account. (And I think this example has already been given, somewhere in this discussion.)

So “issue (2)” above is indeed serious. Nonetheless it feels to me somewhat orthogonal to the given ideas for issue (1).

In this example, making it “separating” would handle it, as would making some equivalent condition about row weights; requiring density < 1/2 under *all* row weight distributions rather than just one would be too strong, but under *enough* row weight distributions (in some sense) could conceivably solve it.

Conclusion: both issues are serious, but the above ideas for issue (1) still seem interesting to me.

July 7, 2016 at 5:16 pm

If one row ‘majorizes’ the other, i.e. one element belongs to all the sets that the other one belongs to, then the lower Hamming weight row can always be deleted — this element is not needed in computing the maximum of densities #A_i / #A where A_i is the subfamily consisting of all the sets containing the element i. In other words, if the original matrix is a counterexample to the original non-strengthened conjecture, so has to be the new matrix, and vice versa. However, with the strengthened conjecture this relationship does not seem to hold, as the density of the whole matrix may go up. Perhaps this means the row of lower Hamming weight has to be taken with weight 0 into the distribution?

July 7, 2016 at 6:03 pm

My goal was to avoid 0-rows in the submatrices. But I guess it does not work. If we change the 0s into 1s where the minor and major row differ, and allow identical columns — this can not help for the base case, only hurt, in the only place where the distinctness of columns assumption is used. But unfortunately this change may turn a counterexample for the strengthened hypothesis into a non-counterexample (even if this is not the case for the original hypothesis).

January 24, 2016 at 4:40 pm |

It can be useful to have a stock of ‘extreme’ examples on which to test hypotheses, so here’s an example of an (arbitrarily large) family that has just one abundant set: for , let

, let  . Then

. Then  has

has  members; the element

members; the element  belongs to all but one of them; but everything else belongs to only

belongs to all but one of them; but everything else belongs to only  , which is just under half of them.

, which is just under half of them.

January 24, 2016 at 4:58 pm

Thanks for that — I’ll definitely bear it in mind when trying to devise ways of using to define a probability distribution on

to define a probability distribution on  for which an averaging argument works.

for which an averaging argument works.

January 24, 2016 at 9:50 pm |

Let me mention an idea before I think about why it doesn’t work, which it surely won’t. In general, I’m searching for a natural way of choosing a random element of not uniformly but using the set-system

not uniformly but using the set-system  somehow. A rather obvious way — and already I’m seeing what’s wrong with it — would be to choose a random set

somehow. A rather obvious way — and already I’m seeing what’s wrong with it — would be to choose a random set  and then a random element

and then a random element  , in both cases chosen uniformly.

, in both cases chosen uniformly.

What’s wrong with that? Unfortunately, it fails the isomorphism-invariance condition: if we take the set system consisting of and

and  , then the probability that we choose the element 1 will be

, then the probability that we choose the element 1 will be  , which is not independent of

, which is not independent of  .

.

With Alec’s example above, the probability of choosing is going to be roughly

is going to be roughly  , because a random set that contains

, because a random set that contains  will have about

will have about  elements. This is sort of semi-promising, as it does bias the choice slightly towards the element we want to choose. I haven’t checked whether it makes the average number of elements OK.

elements. This is sort of semi-promising, as it does bias the choice slightly towards the element we want to choose. I haven’t checked whether it makes the average number of elements OK.

But if we were to duplicate all the other elements a large number of times, we could easily make it extremely unlikely that we would choose the element , so the general approach still fails. Is there some way of capturing its essence but having isomorphism invariance as well?

, so the general approach still fails. Is there some way of capturing its essence but having isomorphism invariance as well?

January 24, 2016 at 10:37 pm

A modification of the idea that I don’t have time to think about for the moment is to take the bipartite graph where is joined to

is joined to  if

if  and find its stationary distribution. It’s not clear to me what use that would be, given that ultimately we are interested in the uniform distribution on

and find its stationary distribution. It’s not clear to me what use that would be, given that ultimately we are interested in the uniform distribution on  , but at least it feels pretty natural and I think satisfies the isomorphism condition.

, but at least it feels pretty natural and I think satisfies the isomorphism condition.

January 26, 2016 at 8:17 am

Let me try and recast that constructively. Let be a union-closed family with ground set

be a union-closed family with ground set  . Choose an arbitrary

. Choose an arbitrary  . Then define a sequence

. Then define a sequence  iteratively: given

iteratively: given  , choose

, choose  uniformly at random from

uniformly at random from  , and then choose

, and then choose  uniformly at random from

uniformly at random from  . Then the hope is that for sufficiently large

. Then the hope is that for sufficiently large  the expectation of

the expectation of  is at least

is at least  .

.

Is that right? It would be interesting to test this out on some examples.

January 26, 2016 at 9:11 am

Hmm, that doesn’t work: is an obvious counterexample.

is an obvious counterexample.

January 26, 2016 at 12:54 pm

Ah yes, I need to work out what I meant by “stationary distribution” there, since it clearly wasn’t the limiting distribution of a random walk on the bipartite graph where you go to each neighbour with equal probability (and have a staying-still probability to make it converge), as that does not satisfy the isomorphism-invariance condition.

Let me begin with a completely wrong argument and then try to do a Google-page-rank-ish process to make it less wrong. The completely wrong argument is that a good way to choose an element that belongs to lots of sets is to choose a random non-empty

that belongs to lots of sets is to choose a random non-empty  and then a random

and then a random  . Why might that be good? Because it favours elements that belong to lots of sets. And why is it in fact not good? Because it doesn’t penalize the other elements enough. The

. Why might that be good? Because it favours elements that belong to lots of sets. And why is it in fact not good? Because it doesn’t penalize the other elements enough. The  example illustrates this: although we pick

example illustrates this: although we pick  with probability very slightly greater than 1/2, that is not enough to tip the average … oh wait, yes it is. We choose 1 with probability

with probability very slightly greater than 1/2, that is not enough to tip the average … oh wait, yes it is. We choose 1 with probability  and all other elements with probability

and all other elements with probability  , so the expected number of sets containing a point is

, so the expected number of sets containing a point is  .

.

So I need to work slightly harder to find a counterexample. I’ll do that this afternoon unless someone has beaten me to it.

January 26, 2016 at 2:23 pm

As a first step, let me try to find a nice expression for the expected number of sets in that contain a random element of a random non-empty set in

that contain a random element of a random non-empty set in  . Let the non-empty sets in

. Let the non-empty sets in  be

be  and let

and let  and

and  . Then if the randomly chosen set is

. Then if the randomly chosen set is  , the probability that

, the probability that  contains a random

contains a random  is

is  , so the expected number of sets containing a random

, so the expected number of sets containing a random  is

is  and the expectation of that over all

and the expectation of that over all  is

is  , which is a fairly bizarre expression.

, which is a fairly bizarre expression.

January 26, 2016 at 3:02 pm

Maybe it’s not as bad as all that actually. Let’s define the average overlap density with to be the average of

to be the average of  over all

over all  . This average is

. This average is  , so the expectation at the end of the previous comment is the sum over all

, so the expectation at the end of the previous comment is the sum over all  of the average overlap density with

of the average overlap density with  .

.

Although this quantity is not an isomorphism invariant, there are limits to what can be done to it by duplicating elements.

What about your example where consists of all subsets of

consists of all subsets of  that are either empty or contain

that are either empty or contain  ? I think the average overlap density will be slightly greater than 1/2 (because almost all sets contain

? I think the average overlap density will be slightly greater than 1/2 (because almost all sets contain  ), but I can try to defeat that by duplicating the elements

), but I can try to defeat that by duplicating the elements  many times. Equivalently, I will put a measure on the set

many times. Equivalently, I will put a measure on the set  that gives measure 1 to all of

that gives measure 1 to all of  and measure

and measure  to

to  , and I will use that measure to define the overlap densities.

, and I will use that measure to define the overlap densities.

Now I need to be a little more careful with the calculations. I’ll begin by ignoring terms in and hoping that the linear terms don’t cancel out. So if I pick a non-empty set

and hoping that the linear terms don’t cancel out. So if I pick a non-empty set  from the system, and if it has measure

from the system, and if it has measure  , then the average overlap density with

, then the average overlap density with  will be

will be

.

. probability of choosing the empty set.

probability of choosing the empty set.

The last factor is there because there is a

For now I shall ignore that last factor, since it is the same for all sets, and I’ll put it back in at the end.

If , then we can approximate

, then we can approximate  by

by  , while if

, while if  we get 1. So I find that I need to know the average of

we get 1. So I find that I need to know the average of  when each

when each  is chosen with probability

is chosen with probability  . I don’t know what this average is, and it doesn’t seem to have a nice closed form, so let’s call it

. I don’t know what this average is, and it doesn’t seem to have a nice closed form, so let’s call it  . That gives us an average overlap density (to first order in

. That gives us an average overlap density (to first order in  ) of

) of

.

. again, we get

again, we get

.

. tends to zero, this tends to

tends to zero, this tends to  , so it seems that we have a counterexample.

, so it seems that we have a counterexample.

Now remembering about the factor

As

I think should be around

should be around  by concentration of measure. If that is correct, then for

by concentration of measure. If that is correct, then for  greater than around

greater than around  we’ll get an average overlap density greater than 1/2. So we really do have to do a lot of duplication to kill off the

we’ll get an average overlap density greater than 1/2. So we really do have to do a lot of duplication to kill off the  contribution. Of course, there may well be simpler examples where much less duplication is necessary.

contribution. Of course, there may well be simpler examples where much less duplication is necessary.

January 26, 2016 at 3:12 pm

I should remark that implicit in all that is a simple observation, which is the following. Define the average overlap density of the entire set system to be

to be

,

, is over the non-empty sets but the average over

is over the non-empty sets but the average over  is over all sets in

is over all sets in  . Then if the average overlap density is

. Then if the average overlap density is  , there must be an element contained in at least

, there must be an element contained in at least  of the sets. This is true for any set system and not just a set system that is closed under unions.

of the sets. This is true for any set system and not just a set system that is closed under unions.

where the average over

In the case of the system the average overlap density is

the average overlap density is

, which is very slightly greater than 1/2.

, which is very slightly greater than 1/2.

So here is a question: are there union-closed set systems with arbitrarily small average overlap density?

January 26, 2016 at 4:33 pm

I think we can get an arbitrarily small average overlap density. Here’s an example:

Take n >> k. We take and modify it a bit. Specifically, for every k-set

and modify it a bit. Specifically, for every k-set ![S \subset [n]](https://s0.wp.com/latex.php?latex=S+%5Csubset+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , define a new element

, define a new element  , and add it to exactly those elements of

, and add it to exactly those elements of  which contain

which contain  .

.

This family is union-closed. Also, nearly every set in it is dominated by the new elements , but each element

, but each element  is only in one in every

is only in one in every  sets. So the average overlap density is only a shade over

sets. So the average overlap density is only a shade over  .

.

January 26, 2016 at 5:15 pm

I’m trying to understand this. Let’s take two disjoint -sets

-sets  and

and  . Then the modified sets will be

. Then the modified sets will be  and

and  . Their union will be

. Their union will be  , which doesn’t seem to be of the form subset-

, which doesn’t seem to be of the form subset- -of-

-of-![[n]](https://s0.wp.com/latex.php?latex=%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) union set-of-all-

union set-of-all- -such-that-

-such-that- -is-a-

-is-a- -subset-of-

-subset-of- . So either I’m making a stupid mistake (which is very possible indeed) or this family isn’t union closed.

. So either I’m making a stupid mistake (which is very possible indeed) or this family isn’t union closed.

January 26, 2016 at 5:19 pm

Oh! You are quite right, that family isn’t remotely union-closed. Back to the drawing board.

One particularly nice feature of this “average overlap density” strengthening is that the special exclusion for the family consisting only of the empty set becomes completely natural – as that family doesn’t have an average overlap density.

January 26, 2016 at 8:29 pm

I wonder if the average overlap density can ever be less than .

.

It attains the value for power sets, as well as some others, e.g.

for power sets, as well as some others, e.g.  . But it seems hard to find examples where it’s less than

. But it seems hard to find examples where it’s less than  .

.

January 26, 2016 at 9:13 pm

My longish comment above with the s in it was supposed to be an example of a union-closed family with average overlap density less than 1/2. It was a modification of an example of yours. To describe it more combinatorially, take a very large

s in it was supposed to be an example of a union-closed family with average overlap density less than 1/2. It was a modification of an example of yours. To describe it more combinatorially, take a very large  and take sets

and take sets  of size

of size  and a set

and a set  of size 1, all these sets being disjoint. Then

of size 1, all these sets being disjoint. Then  consists of the empty set together with all unions of the

consists of the empty set together with all unions of the  that contain

that contain  .

.

If I now pick a random non-empty set in the system, it consists of some union of the with

with  together with the singleton

together with the singleton  . The average overlap density with the set will be fractionally over 1/2 (by an amount that we can make arbitrarily small by making

. The average overlap density with the set will be fractionally over 1/2 (by an amount that we can make arbitrarily small by making  as large as we choose) if we intersect it with a random other set of the same form, but when we throw in the empty set as well, we bring the average down to just below 1/2 (by an amount proportional to

as large as we choose) if we intersect it with a random other set of the same form, but when we throw in the empty set as well, we bring the average down to just below 1/2 (by an amount proportional to  ).

).

The “problem” with this set system is that it is a bit like the power set of![[m]](https://s0.wp.com/latex.php?latex=%5Bm%5D&bg=ffffff&fg=333333&s=0&c=20201002) with the empty set duplicated — in a sense that is easy to make precise, it is a small perturbation of that.

with the empty set duplicated — in a sense that is easy to make precise, it is a small perturbation of that.

Now that I’ve expressed things this way, I’m starting to wonder whether we can do something more radical along these lines to obtain a smaller average overlap density. Roughly the idea would be to try to do a lot more duplication of small sets and somehow perturb to make them distinct.

Indeed, maybe one can take any union-closed set system , blow up every element into

, blow up every element into  elements, take unions of the resulting sets with some singleton, and throw in the empty set. It looks to me as though by repeating that with suitably large

elements, take unions of the resulting sets with some singleton, and throw in the empty set. It looks to me as though by repeating that with suitably large  one could slowly bring down the average intersection density to something arbitrarily small, but I haven’t checked, as I’m thinking as I write.

one could slowly bring down the average intersection density to something arbitrarily small, but I haven’t checked, as I’m thinking as I write.

January 26, 2016 at 9:21 pm

Ah yes, I was making a mistake: ignore my last comment!

January 26, 2016 at 9:53 pm

Actually, I’m a bit puzzled by your calculation. Suppose . (You don’t make any assumptions about

. (You don’t make any assumptions about  .) Then the set system (in full, before you replace the duplicates with the

.) Then the set system (in full, before you replace the duplicates with the  weighting) has the shape

weighting) has the shape  where

where  is large. But we already know that this has average overlap density more than

is large. But we already know that this has average overlap density more than  . This seems inconsistent with your conclusion, but I’m not sure where the mistake is.

. This seems inconsistent with your conclusion, but I’m not sure where the mistake is.

January 26, 2016 at 10:07 pm

Let me see what happens with . Now the sets (in the second version) are

. Now the sets (in the second version) are  , and

, and  for two disjoint sets

for two disjoint sets  and

and  of size

of size  (disjoint also from

(disjoint also from  ).

). is

is  . The average overlap density with

. The average overlap density with  is

is  , as it is with

, as it is with  . And finally the average overlap density with

. And finally the average overlap density with  is

is  . To a first approximation (taking

. To a first approximation (taking  to be infinity) we get a total average of

to be infinity) we get a total average of  , so the initial sanity check is passed. But again it looks as though the averages are very slightly larger.

, so the initial sanity check is passed. But again it looks as though the averages are very slightly larger.

The average overlap density with

I have to do something else now, but I’m not sure what’s going on here either. But it’s a win-win — either the counterexample works, or the idea of using this as the basis for an averaging argument lives for a little longer.

January 27, 2016 at 9:12 am

I think I’ve seen my mistake, at least in the second version of the argument (Jan 26th, 9:13pm). I comment there that the set system “duplicates” the empty set. That is indeed true, but it gives rise to two competing effects. On the one hand, for each set that properly contains , the average overlap density is indeed very slightly less than 1/2 (because both

, the average overlap density is indeed very slightly less than 1/2 (because both  and

and  intersect it in a set of density almost zero). On the other hand, the average intersection density with the set

intersect it in a set of density almost zero). On the other hand, the average intersection density with the set  is almost 1, which has a tendency to lift things up.

is almost 1, which has a tendency to lift things up.

Ah, and in the first version of the argument I made a stupid mistake in my algebra. The expression towards the end for the average overlap density should have been

to

to  .

.

the difference being the “+2” which I gave as “+1”. This is enough to tip the balance from being

So I’m definitely back to not knowing of an example where the average overlap density is less than 1/2.

January 27, 2016 at 9:53 am

For what it’s worth, I’ve run a program that generates random separating UC families and computes the a.o.d., and it hasn’t found any counterexamples, with equality occurring only for power sets.

(I don’t have a feeling yet for how much trust to place in such experiments. The generation algorithm I used depends on parameters . It starts with a ground set of size

. It starts with a ground set of size  , generates

, generates  random subsets each containing each element with probability

random subsets each containing each element with probability  , and forms the smallest separating UC family containing them. I’ve tried various combinations of parameters, but haven’t run the program for very long. Anyway the experiment indicates that any counterexample would have to be quite structured.)

, and forms the smallest separating UC family containing them. I’ve tried various combinations of parameters, but haven’t run the program for very long. Anyway the experiment indicates that any counterexample would have to be quite structured.)

January 27, 2016 at 10:23 am

Additionally, for what little it’s worth, there are no counterexamples in (which is the largest powerset in which it is reasonable to enumerate the union-closed families).

(which is the largest powerset in which it is reasonable to enumerate the union-closed families).

January 27, 2016 at 11:28 am

Small correction to my description of the random generating algorithm: it doesn’t form the ‘smallest separating UC family containing’ the random sets (there may not be one); it forms the smallest UC family containing them and then reduces it by removing duplicates.

January 27, 2016 at 3:35 pm

I’ve had a thought for a potential way of making the conjecture nicer, but it risks tipping it over into falsehood.

Suppose we take two non-empty sets and

and  . Their contribution to the average overlap density is

. Their contribution to the average overlap density is

.

.

.

. and

and  . What’s more, those functions are very natural: they are just the

. What’s more, those functions are very natural: they are just the  normalizations of the characteristic functions of

normalizations of the characteristic functions of  and

and  . Let me denote the normalized characteristic function of

. Let me denote the normalized characteristic function of  by

by  .

.

This is slightly unpleasant, but becomes a lot less so if instead we replace it by the smaller quantity

What’s nice about that is that, disregarding the factor 2, it is the inner product of the functions

Then I think another conjecture that implies FUNC, at least for set systems that do not contain the empty set, is that if is a union-closed family of that type, then

is a union-closed family of that type, then

.

.

.

.

But of course the left-hand side can be simplified: it is just

Just on the point of posting this comment, I think I’ve realized why this is a strengthening too far. The rough reason is that the previous conjecture was more or less sharp for the power set of a set , and then when you replace the average intersection density by this more symmetrical version you’ll get something smaller, because you’ll often have sets

, and then when you replace the average intersection density by this more symmetrical version you’ll get something smaller, because you’ll often have sets  and

and  of different size. But that doesn’t rule out proving something interesting by this method. For example, perhaps there is a constant

of different size. But that doesn’t rule out proving something interesting by this method. For example, perhaps there is a constant  such that

such that

.

.

for every union-closed set system

January 27, 2016 at 3:44 pm

A stress test. Let’s take a system of nested sets with very rapidly increasing size. Then the inner product of any two of the

of nested sets with very rapidly increasing size. Then the inner product of any two of the  will be almost zero unless the two are the same set. So unfortunately this strengthened conjecture, even in its weakened form, is dead.

will be almost zero unless the two are the same set. So unfortunately this strengthened conjecture, even in its weakened form, is dead.

January 27, 2016 at 5:47 pm

Some sort of vector formulation is still possible, but it doesn’t really change much I think. We can define to be the

to be the  -normalized characteristic function (that is,

-normalized characteristic function (that is,  ), and then the claim is that

), and then the claim is that

and then a random

and then a random  , while the function on the right-hand side is just the function that counts how many sets you belong to. So it is not enough of a reformulation to count as a different take on the problem.

, while the function on the right-hand side is just the function that counts how many sets you belong to. So it is not enough of a reformulation to count as a different take on the problem.

The reason this doesn’t help much is that the function on the left-hand side is simply the probability measure you get by choosing a random

January 24, 2016 at 9:53 pm |

Over on the G+ post (https://plus.google.com/+TimothyGowers0/posts/QJPNRithDDM) one of the commenters suggested looking at the graph-theoretic formulation of Bruhn et al (Eur J. Comb. 2015), which I just added to the Wikipedia article (https://en.wikipedia.org/wiki/Union-closed_sets_conjecture#Equivalent_forms). According to that formulation, it’s equivalent to ask whether every bipartite graph has two adjacent vertices that both belong to at most half the maximal independent sets. So one possible class of counterexamples would be bipartite graphs in which both sides are vertex-transitive but in which the sides cannot be swapped, such as the semi-symmetric graphs. It seems like the union-closed set conjecture would imply that such highly-symmetric bipartite graphs always have another hidden symmetry, in that the graphs on both sides of the bipartition all appear in equally many maximal independent sets. Is something like this already known?

January 27, 2016 at 8:59 am

Apologies — it took me a while to notice this comment in my moderation queue (where it went because it contained two links).

January 24, 2016 at 11:02 pm |

Speaking of introducing probability measures, here’s another direction that might be worth thinking about.

The standard conjecture implicitly uses the counting measure to compare the size of to the size of

to the size of  . What happens if one replaces this counting measure by something else?

. What happens if one replaces this counting measure by something else?

An extreme case is the probability measure supported on . In this case, there’s obviously no

. In this case, there’s obviously no  such that sampling from the measure results in an

such that sampling from the measure results in an  -containing set with probability

-containing set with probability  .

.

But explicitly excluding the empty set yields a seemingly more sensible question:

Conjecture: suppose that is a union-closed family and

is a union-closed family and  is a probability measure on

is a probability measure on  . Then there exists some

. Then there exists some  with

with  .

.