The title of this post is meant to serve various purposes. First and foremost, it is a cheap trick designed to attract attention. Secondly, and relatedly, it is a nod to the amusing events of the last week or so. [Added later: they were from the last week or so when I wrote that sentence.] But there is a third reason that slightly excuses the first two, which is that the current state of play with the EDP project has a very PNP-ish feel to it. Indeed, that has been the case for a while: we are trying to find a clever decomposition of a diagonal matrix, which is a difficult search problem, even though we can be fairly confident that if somebody came up with a good candidate for a decomposition, then checking that it worked would be straightforward. And just in case that is not true, let’s make it trivially true by saying that we are searching for a decomposition that can easily be checked to work. If it can easily be checked to work, then it can easily be checked that it can easily be checked to work (the algorithm being to try to check it and see whether you succeed). But now I want to air a suggestion that reduces the search problem to another one that has similar properties but may be easier.

A brief word also on why I am posting again on EDP despite the fact that we are nowhere near 100 comments on the previous post. The main reason is that, now that the rate of commenting has slowed to a trickle, it is far from clear that the same rules should apply. I think the 100-comment rule was a good sufficient condition for a new post, but now I think I want to add a couple more: if there is something to say and quite a long time has elapsed since the previous post, or if there is something to say that takes a while to explain and is not a direct continuation of the current discussion, then it seems a good idea to have a new post. And both these conditions apply.

[Added later: this is a rather strange post written over a few weeks during which my thoughts on the problem were constantly changing. So everything that I say, particularly early on, should be taken with a pinch of salt as I may contradict it later. One approach to reading the post might be to skim it, read the very end a bit more carefully, and then refer back to the earlier parts if you want to know where various ideas came from.]

A simple question about functions on .

Let me cut to the chase and ask a question that I find quite nice and that can be understood completely independently of all the discussion of EDP so far. I will then try to motivate the question and sketch a proof that a positive answer to this question would imply EDP.

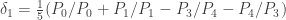

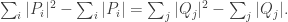

The question, in isolation, is this. Does there exist, for every constant a sequence

with the following properties?

1.

2. for every

3.

Without the third condition, the answer is easily seen to be yes, since one can take and all the other

to be zero.

After writing that question, I had to stop for a day or so, during which I realized that the answer was a trivial no. Indeed, if and

then

so

which rules out condition 3.

How could I have asked such a dull question? Well, the question may be dull but I think there remains some interest in the motivation for it, especially as it leads to a related question that may be harder to answer but have equal potential to be useful. If you want to cut to the new chase, then skip to the bottom of this post, where I shall ask a different question that again involves finding a function that satisfies various conditions (though this time the function is of two variables).

Where the question came from.

But if you would prefer some motivation, then here is how the first question arose. We would like to find a decomposition of the identity on the rationals. (By this I mean the matrix where

and

range over

) We want this decomposition to take the form

where each

and each

is the characteristic function of a HAP, and the sum of the

is small. (What precisely this smallness condition is I’ll discuss later.)

Now let me consider briefly a very general question related to the facetious title of this post: how is that mathematicians often manage to solve difficult problems with an NP flavour? That is, how is it that when they are faced with searching for an X that does Y, and most Xs clearly don’t do Y, they nevertheless often succeed in finding an X that does do Y. Obviously the answer is not a brute-force search, but what other kinds of searches are there?

One very useful method indeed is to narrow down the search space. If I am looking for an X that does Y, then one line of attack is to look for an X that does Y and Z. If I am lucky, there will exist Xs that do both Y and Z, and the extra condition Z will narrow down the search space enough to make it feasible to search.

One of the best kinds of properties Z to add is symmetry properties, since if we insist that X is symmetric in certain ways then we have fewer independent parameters. For example, if we want to find a solution to a PDE, then it may be that by imposing an appropriate symmetry condition one can actually ensure that there is a unique solution, and finding something that is unique is often easier than finding something that is far from unique (because somehow your moves are more forced).

Let us write for the characteristic function of the HAP

Then we are looking for a decomposition as a linear combination of functions of the form

which we have been writing as

What symmetry conditions can we impose?

An obvious one to start with is that we could insist that and

That is, we could attempt to find a decomposition of the form

(Note that

ranges over the rationals and

over the positive integers.) Actually, I think that’s more of a simplicity condition than a symmetry condition, but the same cannot be said of the next condition, which is to insist that

should be independent of

This tells us that the decomposition “looks the same everywhere” and takes the form

Now what is the smallness property we need of the coefficients ? I won’t fully justify this here, because I have already discussed how to deal with the fact that the rationals form an infinite set. Let me simply say that if the

for which

are bounded, then we are done if

can be made arbitrarily small. (Roughly speaking, we are interested in the ratio of the average diagonal entry to the average

per

Since the average diagonal entry of the identity is 1 and

depends only on

we are interested in

)

Let be the function

Then we would like to express the identity as a linear combination of the

with coefficients with a small absolute sum. A good start might be to calculate

It is the number of

such that

and

for some positive integers

If

and

are minimal integers such that

then the only

that “divide”

and

are numbers of the form

where

and

We then get

and

so we need

to be at most

and also at most

But the maximum of

and

is

so we need

to be at most

Since any positive integer satisfying this condition works, we ind that

One nice thing to observe about this is that it depends only on and not on

and

themselves. This turns our question into a one-dimensional one (but unfortunately it is the question above that has a boring answer).

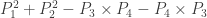

But let’s see why that is. We are now in a position where we want to write the identity as a linear combination of the functions But now we know that if we write

what we are trying to do is write the function

as a linear combination of the

(since we want

if

and 0 otherwise.

How are we to deal with the functions given that they have unpleasant integer parts involved? There turns out to be a nice answer to this: look at the differences

We see that

if

is a multiple of

and 0 otherwise. Now

where

So the condition

translates to the condition

(interpreting

to be 0). And

which we want to be 1 when

and 0 otherwise, which is where conditions 1 and 2 come from.

If we could get conditions 1-3 then I’m pretty sure we’d have a solution to EDP. But we can’t, so where does that leave us? In particular, are we forced to give up the very appealing idea of having a decomposition that is “the same everywhere”?

Rectangular products.

Actually no. It shows that we cannot combine the following two features of a decomposition: being the same everywhere, and using only “square” products — that is, products of the form rather than more general “rectangular” products of the form

What happens if we allow ourselves a bit of extra generality? We would like to do this in a minimal way, so for the time being let us assume that

and

have the same common difference.

At first it may seem as though we gain nothing, because there is a sort of polarization identity operating. Observe first that if we decompose as a sum of matrices

then we can replace each

by its symmetrized form

and we will have a decomposition of

into symmetric parts. So without loss of generality we can assume that the coefficient of

in any decomposition is the same as the coefficient of

If

is longer than

then we can now use the fact that

to replace the rectangular products by a comparably small combination of square products. However, there is an important difference, which is that we are now allowing ourselves (square) products of HAPs of the form Perhaps surprisingly, this gives us a large amount of extra flexibility.

To demonstrate this, I need to go through calculations similar to the ones above, but slightly more complicated (though with a “magic” simplification later that makes things nice again). First, it turns out to be convenient to define to be the characteristic function of the HAP

(One indication of this is that it has length

or to be more accurate

a piece of pedantry that turns out to be unexpectedly important later.) Now let us define

to be

We can calculate

in a similar way to the way we calculated

earlier. Let

be minimal such that

and let

be the largest rational that divides both

and

which tells us once again that

and

For

to contribute to the sum, we again need

to be of the form

for some positive integer

but now we need

and

to lie in the interval

Without loss of generality

Then our requirements are that

and

Since

is an integer, this is equivalent to requiring that

and

The number of that satisfy this is obviously

Again, the max with zero is extremely important to us, and not just because it makes the expression correct. So now we have our formula:

As in the previous one-dimensional case, we have ugly integer parts to contend with, not to mention the max with zero. But once again we can simplify matters by looking at telescoping sums and the “derivative” of our function, except that this time we want two-dimensional analogues. What we shall do is this (I think it is fairly obviously the natural thing to do so I won’t try to justify it in detail). Define a function by

We would now like to express an infinite linear combination as a linear combination of the

(Note that

is identically zero if

Hence the range of summation.) Since we don’t actually know what the

are, let us just imagine that we have some coefficients

that satisfy

Then

But turns out to be something we can understand very well, because the functions

are unexpectedly simple. Recall that

where and

are minimal such that

So in principle we can calculate

This looks unpleasant until we make a couple of simple observations. First of all, note that

is constant on rectangles of sidelengths

and

(Here I am taking

and

fixed and varying

and

) More precisely, there is a tiling of

into such rectangles, and

is constant on each of these rectangles. As you go horizontally from one rectangle to another to its right,

increases by 1, and as you go vertically upwards it decreases by 1. If you put all these facts together, you find that

on all multiples of

and is zero everywhere else.

What does this tell us about the coefficients ? Well, we want

to be 1 if

and

otherwise. It follows that whenever

and

are coprime, with

then we want

to be 1 if

and

otherwise. This is very similar to our one-dimensional criterion, but with the huge difference that the sets along which we are summing the function

are disjoint. (They are lines through the origin, or rather intersections of those lines with

)

We also have a smallness criterion. Recall that in the one-dimensional case we needed to be arbitrarily small (interpreting

as 0). Here we need the very similar property that

is arbitrarily small. Let me briefly remark that there is nothing to stop us defining however we like for values of

with

and it will be convenient to exploit this.

So there is a rather simple looking question: can we find a function such that the “

-norm of the mixed partial derivatives” is arbitrarily small, the sum along the diagonal is 1, and the sum over all other lines of positive rational gradient is 0?

Somehow this question doesn’t feel as though it should be that hard, since the constraints don’t seem to interact all that much. So it would be natural to think that either there is a simple reason for no such existing, just as there was in the one-dimensional case, or there is a fairly simple construction of such an example.

Solving the two-dimensional question.

If there is a construction, how might one expect it to work? There are two reasonable possibilities: either one writes something quite clever straight down (perhaps involving trigonometric functions somehow to get the sums to be zero along the lines of gradient not equal to 1), or one goes for a just-do-it proof. My feeling (with the benefit of some hindsight) is that there are few enough constraints for a just-do-it approach to be appropriate, and in any case attempting a just-do-it proof can hardly fail to increase one’s understanding of the task, whether or not the attempt succeeds.

I should interrupt what I’m writing to say that I’m almost certain that a just-do-it approach works. By that I mean that I’m sitting here about to write down what I think is a correct proof, but that proof has been sitting in my head for the last day or so and has not been written down. And I’m pretty sure that my arguments that this would solve EDP can be tightened up and turned into a rigorous proof too, though that will need to be checked very carefully. So right now I don’t see why we don’t have a complete proof of EDP.

I should also at this point make a general remark about Polymath. I’ve been spending quite a lot of time thinking about the problem on my own, which is contrary to the spirit of Polymath and therefore demands an apology — but also an explanation. The explanation is that I have been trying to write this post for a long time — I started it nearly a week ago — but my efforts have been interrupted by plane journeys and ICM activities, including blogging the ICM. However, plane journeys, long waits for opening ceremonies, lonely moments in my hotel room, etc., are very conducive to mathematical thought, and since most of the argument I am writing here is rather simple (it was just a question of finding the right idea to get it started), I have found that the length of what I need to put in the post has been increasing faster than the length of the post itself. And one other mitigating factor is that there has been very little reaction to my recent comments on the previous post, where I first set out some of this approach (see in particular the two most recent comments), so I feel more entitled to go it alone for a while than I would have if the project had been roaring ahead at the sort of speed it was going at earlier in the year. Needless to say, the proof, if it turns out to work, is still very much a joint effort — this most recent argument depends crucially on the insights that have emerged from the more active discussion phase — and will be another paper of D. H. J. Polymath.

I must repeat that this post is being written over the course of many days. Several hours have passed since I finished the last paragraph, and during them I thought a bit harder about the step I was most worried about, which was dealing with the fact that the rationals are infinite and that at some point one must do some kind of truncation. I now think that that step will be more problematic than I had thought, and it seems to me to be about 50-50 whether it will be possible to get it to work. Having said that, I feel as though something non-trivial has emerged from the argument I shall complete in a moment, and I will be quite surprised (not to mention disappointed) if it leads nowhere. What I’m really saying is that I have an argument that does exactly what seems to be needed, except that the sums involved are a bit too formal. Now it could be that establishing convergence in some appropriate sense is where the real difficulty of the problem lies. In that case, this approach will be much less useful than I thought. But I am hoping that it is more of a technical problem. No time to think about it right now as I am going to a performance of A Disappearing Number, a play about which I shall undoubtedly have more to write later.

WELL OVER A WEEK LATER

The first thing to say is that EDP definitely isn’t yet solved. I’ve also reformulated the problem again, in a way that I find clearer. I could give a long description of how the new perspective evolved from the one above, but I think instead I’ll just sketch what happened.

Solving the two-dimensional question, continued

The first thing to observe is that if we take the characteristic function of a rectangle, then the sum of the is 4, since the only contributions come from the four corners.

Therefore, all we have to do is this. We shall build up an infinite matrix as a linear combination of rectangles — that is, functions of the form where

and

are arithmetic progressions with common difference 1. We start by choosing a large integer

We then put entries

at every point

That ensures that the sum along the main diagonal is 1, provided that we do not do anything else to that diagonal. However, it also causes the sums along several other lines to be non-zero, so there is work to do. Note that the sum of coefficients so far is

since there is just one rectangle and its coefficient is

To do the rest of the work, let us enumerate all rationals greater than 1 as and deal with each gradient in turn. What “deal with” means is that we shall add in some very small multiple of a rectangle in such a way that the sum along the line of the gradient we are dealing with is now zero, and will agree that from then on we will not change any entry in that line. Can we do this? Well, let’s suppose that we are dealing with the gradient

So far, the sum of the entries of the matrix along the line of gradient

is

say. So we must find a rectangle that contains many integer points along the line of gradient

. And we also need it not to intersect any line of gradient

with

But if we go far enough up the line with gradient

we will find ourselves at a very great distance from the lines we are trying to avoid, so we can find a very large rectangle that is disjoint from those lines and contains many integer points of the form

If it contains

such points, then we make the coefficient of that rectangle equal to

Since we can get

to be as big as we like, we can get this coefficient to be as small as we like. So we have “dealt with” the gradient

and the problem is solved.

Why doesn’t that solve EDP?

Let me start by saying why I thought it might solve EDP. I thought that if the sum of coefficients associated with each was arbitrarily small, then that would be saying that in a certain sense the average weight of the coefficients was arbitrarily small. Since the average size of the diagonal entries is 1, this ought somehow to give us what we want.

To understand why this doesn’t work, and probably can’t be made to work, I find it helpful to reformulate the problem again. To do so, let us think about the operators once again. (These first came in fairly near the beginning of the section entitled “Rectangular products.”) In particular, let us think what one of these operators does to the function

that is 1 at

and 0 everywhere else.

In fact, let us be slightly more general, as it will be useful to us later. What is the value of at

For it to be non-zero we need

and

and then it is 1. So if we sum over all

then we get the number of

with that property. Now each

with that property is of the form

for some

so when we sum over

we are counting the number of ways of writing

as

for some

and some

That is, if we define to be the number of ways of writing

as

with

and

then

That is another way of saying that

where

is a multiplicative convolution.

If you don’t like following calculations but want to know what the moral of all that is, then here you are. Let us define the multiplicative convolution of two functions and

to be the function

given by the formula

Then the operator takes a function

to the multiplicative convolution of

with the function

which is itself the multiplicative convolution of the characteristic functions of the sets

and

(That is, as I’ve already said,

is the number of ways of writing

as a fraction with numerator in

and denominator in

)

Now we’re trying to express the identity as a small linear combination of these convolution operators, so we can turn the problem into a one-dimensional problem: to write the function (which is the identity for the multiplicative convolution) as a small linear combination of functions

I am about 99% sure that if we could do that with a finite linear combination then we would have a proof of EDP. I used to think that “whatever works for finite should also work for infinite but with absolutely summable coefficients,” but it seems that this is wrong because even if the coefficients can be made very small, the functions themselves may be getting quite large in the norms we care about, so the convergence may be much too weak.

That does indeed seem to be the case here. If you convert the just-do-it argument above into an argument to express in terms of the functions

then you get pointwise (absolute) convergence, but that is not strong enough.

Let me just elaborate on the remark that this problem can be solved directly by means of a just-do-it argument. I won’t give the full argument, but I’ll just point out that for each rational you have the option of doing the following. You choose a very large integer

and a very much larger integer

You then set

and

Since

is much larger than

is very close to

for every

and

Moreover, the number of pairs

such that

is

(assuming that

and

are coprime, that is). So if we want to get rid of a value

at the point

then we can subtract the function

which has a small coefficient and is supported on only a very small neighbourhood of

What is not so good about it is that the norm of

is roughly

which is not small at all.

Thinking slightly further about this, I see that what I said above about pointwise convergence was a bit too pessimistic: for example, we can easily organize for the convergence to be uniform. And indeed, if we think of as being, in its broad shape, roughly like a set of size close … actually, what is it like?

Rational numbers sort of repel each other, so if we’ve devised and

such that all points in

are close to

then all points that are not actually equal to

will tend to have rather large denominators, and therefore not to occur very frequently. So let’s hazard a guess that for the purposes of thinking about

norms, we can think of

as having a spike at

where the value is

and

other values that are all around 1. That would mean that the pth power of the

norm was around

Thinking of

and

as constants, it looks as though we might expect some kind of good behaviour to kick in when

passes 2. Remembering that we’re actually interested in

times the

norm, we get something like

But if the value

we were trying to get rid of was small, we can multiply that by a small factor

Ah, but the problem is that there are an awful lot of those

s to worry about, and they add up in an

-ish way.

What that boils down to is that this kind of greedy keep-away-from-everything-else approach doesn’t give us any kind of useful convergence, which we need if we are to get the averaging argument to work. I realize that I haven’t been too clear about what kind of convergence would work, but there is one kind that is definitely OK, and that is if we have a finite linear combination. So let me now ask a completely precise question.

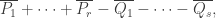

A sufficient (I’m almost sure) condition for EDP

Let and

be subsets of

We define

to be the function whose value at

is the number of ways of writing

as

with

and

I claim that EDP follows if for every

there exists a way of writing the function

(which is defined on

and takes the value 1 at 1 and 0 everywhere else) as a finite linear combination

where the

and

are intervals of positive integers and

Summary.

In this post I have been looking at the representation-of-diagonals approach over the rationals with the extra symmetry constraint that the HAP products are all of the form where

and that the coefficient of

is independent of

This symmetry condition forces the diagonal map we are trying to represent to be the identity. (As I write this it occurs to me that we might be able to take an arbitrary efficient representation of a diagonal matrix and average it over all multiples in order to obtain an efficient representation of the identity. So perhaps this “new” approach is equivalent to the old one — which would be quite encouraging as it would increase the chances that the new one can be made to work.)

Thinking through what this means, we find that we can reformulate the problem and obtain the question asked in the previous section.

Where next for EDP?

To finish, I’d like to ask if anyone has views about how they would like the EDP project to proceed. We are in uncharted waters at the moment: we have a project that started with a burst of activity — in fact it was sustained enough to count as more than just a burst — but now it has slowed right down and I’m not sure it will ever regain anything like its earlier intensity. And yet I myself am still enthusiastic about it, and perhaps I’m not alone in that.

My question is what should happen if it turns out that there are only two or three people, or even just one, who feel like working on the problem. In that case, there are various options. We could simply continue to post comments on this blog and not worry if there are very few participants or if the rate of posting is small. Or we could move the project to a different phase, more like a normal collaboration, with longer periods of private thought, but from time to time write comments or posts to make sure that any important developments are kept public (so that, in particular, anyone who wanted to join in would be free to do so). Or we could turn the project into a completely conventional collaboration, always with the understanding that the author of the eventual paper would be D. H. J. Polymath. And that’s not quite the most conventional it could go. The final possibility would be to close the project altogether, write a paper summarizing all the partial results, and then “free up” the problem for anyone who wants to think about it privately — if they used any of Polymath’s ideas they could simply refer to the paper that contained those ideas.

I think I favour keeping the project going as a Polymath project but with longer periods of private thought, but I don’t favour this strongly enough that I couldn’t be persuaded to change my mind if others feel differently. If that is the way we go, then my final question is, are there people out there who would like to be part of a small group of reasonably dedicated participants, or am I on my own now?

September 3, 2010 at 8:45 pm |

I have made some progress on the graph theoretic approach but I have not written anything up because of lack of time. If your offer still stands to make a post outlining how a new approach would work I’d be willing to give it a go, but I’m not sure if that would inject any new energy.

September 3, 2010 at 9:01 pm |

I think keeping this as a polymath project with longer periods of thought is a reasonable way to continue. This project interests me but I don’t know how much I can contribute to it. I don’t know what other people’s level of contribution will be.

September 3, 2010 at 9:36 pm |

[…] Polymath5 By kristalcantwell There is a new thread for Polymath5 here. […]

September 4, 2010 at 9:37 am |

Since now it is the reverse from the beginning (when the rate of comments was driving the rate of new posts), there’s the new possibility of setting a fairly regular pace for new posts, like a real weekly workgroup. This might then have a beneficial effect on the commitment of the participants (allowing it to fit in one’s regular schedule).

So the transition to a polymath with longer periods of thought seems very natural. Also, keeping this as a polymath would allow students to still learn from your thought process, and any non-working ideas to be recorded. (I’m probably not able to contribute anything apart from a little numerics and plots, but would be very happy to learn how the problem can be solved).

September 4, 2010 at 11:08 am |

I don’t know whether everyone who wants to express an opinion about this has done so, but I’m not getting the impression that people are falling over themselves to participate fully, and I’m also getting the impression that if that is the case, then people wouldn’t mind if I worked privately but wrote regular posts to say how I was getting on and give details about any new thoughts I might have had — always on the understanding that anybody who wanted to contribute could at any stage join in, and that if suddenly four or five people became enthusiastic then the collaboration would revert to the old style of working.

Incidentally, I find that one of the good things about Polymath that is quite independent of the number of people participating is that it forces you to make your thoughts clear enough to be explained to other people. So even a monomath project has some value.

One other thing I wanted to say is that I sometimes worry that when I write a post such as the one above that contains some calculations, people are a little bit put off by the calculations and feel that to contribute they would need to invest a large amount of effort just to understand what on earth I was saying. I tried to write the last few sections so that one could understand those and ignore the rest of the post, but I realize that there is more that I could have said. So I’d like to ask whether this is partly the case. Should I be trying to write something like a paper, with precise results (along the lines of “If we could do A then we could do B”), precise conjectures, and plenty of chat in between? I’m tempted to do that anyway, because I think it could easily be modified to form part of whatever paper results from the project. (I’m assuming that there will be some kind of paper, whether or not the problem is solved.)

September 4, 2010 at 3:51 pm

Yes, I think it would be very interesting and useful to have “something like a paper” about where exactly you currently are and what the next steps ought to be.

September 5, 2010 at 7:20 pm

I’m still interested in the project but I haven’t had much time to devote to it lately.

September 5, 2010 at 8:03 pm

I’m now preparing a post in which I hope to give a rigorous proof of why the decomposition discussed above would imply EDP if it existed for arbitrarily small I think we now have a genuinely new avenue to explore, and that just like most of the other avenues it lends itself to a mixture of theoretical and numerical investigations.

I think we now have a genuinely new avenue to explore, and that just like most of the other avenues it lends itself to a mixture of theoretical and numerical investigations.

September 4, 2010 at 11:55 am |

Do we have such a finite decomposition of for any

for any  ?

?

September 4, 2010 at 12:46 pm

That’s a good question, to which I do not know the answer. It fits well with something I was thinking a little earlier, which is that there might be more hope of finding interesting decompositions with this one-dimensional problem than there was with the earlier two-dimensional problem.

The general problem is to represent one vector as a linear combination

as a linear combination  with

with  as small as possible. I haven’t quite worked out what that is — is it simply a linear programming problem? Or is it a dual linear programming problem? In particular, is it a problem for which there are very efficient packages out there waiting to be used? If so, then perhaps there is a hope of using a mixture of theory and experiment to prove a lower bound for discrepancy that’s better than our current record of 3. But even if we didn’t manage that, I think any decomposition with

as small as possible. I haven’t quite worked out what that is — is it simply a linear programming problem? Or is it a dual linear programming problem? In particular, is it a problem for which there are very efficient packages out there waiting to be used? If so, then perhaps there is a hope of using a mixture of theory and experiment to prove a lower bound for discrepancy that’s better than our current record of 3. But even if we didn’t manage that, I think any decomposition with  would be interesting. (I don’t rule out, however, that there is some fairly simple decomposition that gives something like

would be interesting. (I don’t rule out, however, that there is some fairly simple decomposition that gives something like  and that the real interest starts below that.)

and that the real interest starts below that.)

One other thing I’m not quite clear about is the extent to which examples such as the sequences of length 1124 translate into constraints on the decompositions one can hope to find. The reason for that is that passing to the rationals means that there isn’t a completely instant quantitative translation from one problem to the other. In principle, that might be good news as it might mean that in order to obtain a decomposition with, say, one would not have to look at functions with huge supports.

one would not have to look at functions with huge supports.

September 4, 2010 at 2:29 pm

It looks like a convex optimization problem with linear constraints, which should be solvable quite efficiently – although the number of variables is large (of order if we consider all subintervals of

if we consider all subintervals of  ). I’ll have a go at it with some small

). I’ll have a go at it with some small  and see what happens …

and see what happens …

September 4, 2010 at 3:22 pm

I think one can get away with just variables by considering functions just of the form

variables by considering functions just of the form  The reason is that we have a sort of polarization identity here. (I think I made the same point but in a different context somewhere in the post above.) First of all, note that the value of

The reason is that we have a sort of polarization identity here. (I think I made the same point but in a different context somewhere in the post above.) First of all, note that the value of  at

at  is the same as the value of

is the same as the value of  at

at  Therefore, given any decomposition of

Therefore, given any decomposition of  we can replace each

we can replace each  by

by  and obtain a symmetric decomposition: that is, one that always gives the same coefficient to

and obtain a symmetric decomposition: that is, one that always gives the same coefficient to  that it gives to

that it gives to

Next, notice that, equating sets with their characteristic functions,![[r,s]\times[u,v]+[u,v]\times[r,s]](https://s0.wp.com/latex.php?latex=%5Br%2Cs%5D%5Ctimes%5Bu%2Cv%5D%2B%5Bu%2Cv%5D%5Ctimes%5Br%2Cs%5D&bg=ffffff&fg=333333&s=0&c=20201002) is equal to

is equal to

To convert these functions into functions defined on we just think of each point of

we just think of each point of  as a rational number and sum up. (By “sum up” I mean that for a rational like 2/3 we would add up the values at

as a rational number and sum up. (By “sum up” I mean that for a rational like 2/3 we would add up the values at  etc.) Therefore,

etc.) Therefore,  is equal to

is equal to

Thus, if we can decompose into functions of the form

into functions of the form  with sum of coefficients at most

with sum of coefficients at most  then we can decompose into functions of the form

then we can decompose into functions of the form  with sum of coefficients at most

with sum of coefficients at most

It’s not clear to me whether for small the gain you get from having only

the gain you get from having only  variables is more or less important than that factor 4. But it certainly allows you to look at much larger

variables is more or less important than that factor 4. But it certainly allows you to look at much larger  so seems worth trying — after all, if it is possible to decompose

so seems worth trying — after all, if it is possible to decompose  into functions of the form

into functions of the form  with a reasonably small constant

with a reasonably small constant  then one has a stronger result.

then one has a stronger result.

September 4, 2010 at 9:19 pm

Ah yes, that looks useful.

In fact the problem can be expressed as a linear optimization problem, at the expense of doubling the number of variables and adding some constraints: one just has to write , with

, with  , and minimize

, and minimize  .

.

I’ve written a program in Python using the ‘cvxopt’ package to solve this. For a first attempt I allowed all possible pairs of intervals. It turns out that is achievable, using subsets of

is achievable, using subsets of  . The program came up with the solution:

. The program came up with the solution:  ,

,  ,

,  ,

,  , and

, and  (to abbreviate your notation slightly). It’s easy to check that this works.

(to abbreviate your notation slightly). It’s easy to check that this works.

I’ll do some more investigation …

September 4, 2010 at 9:22 pm

Sorry, I meant to write:

September 4, 2010 at 10:44 pm

Simple as it is, I find that example rather encouraging because it genuinely relies on the new symmetric set-up, in the sense that the function is not supported on the diagonal of

is not supported on the diagonal of

I suppose one should check whether a tensor-power trick can be used to convert this example into one that gives you a constant for arbitrary

for arbitrary  It would be a bit of a miracle if it did.

It would be a bit of a miracle if it did.

Hmm … I can’t really do this in my head, but it feels as though the answer is that it doesn’t, because a product of HAPs is a multidimensional HAP. But I may check this just in case something can be got to work.

Hmm again … can one do anything by taking the kth multiplicative convolution power of both sides of your identity? The right-hand side would not immediately be of the right form, but it’s not instantly clear to me that it can’t be decomposed into something of the right form. We would have terms, each multiplied by

terms, each multiplied by  so we would need to find a way of decomposing each term into functions of the desired form with coefficients adding up to significantly less than

so we would need to find a way of decomposing each term into functions of the desired form with coefficients adding up to significantly less than

I’m putting that idea up in an old-style Polymath spirit — I’m not sure when I’ll be able to get round to thinking about it properly.

September 5, 2010 at 7:54 am

A few more data. Optimizing over all possible pairs of intervals, new records for are set at:

are set at:  (

( ),

),  (

( ),

),  (

( ),

),  (

( ),

),  (

( ).

).

Restricting to symmetric rectangles , we first attain

, we first attain  at

at  . The solution here is:

. The solution here is:  ,

,  ,

,  ,

,  ,

,  ,

,  , and

, and

where is shorthand for

is shorthand for  .

.

This attains . The next record-breaker for the symmetric case is

. The next record-breaker for the symmetric case is  , where we attain

, where we attain  , with a combination of

, with a combination of  intervals each with coefficient

intervals each with coefficient  .

.

September 5, 2010 at 8:29 am

… And the next is , where we attain

, where we attain  , with a combination of

, with a combination of  intervals each with coefficient

intervals each with coefficient  .

.

And the next is , where we attain

, where we attain  , with a combination of

, with a combination of  intervals each with coefficient

intervals each with coefficient  . For the record:

. For the record:

September 5, 2010 at 9:30 am

Hmm, there’s a pattern in that last decomposition. It’s:

No time to think more about this now, but it seems quite encouraging …

September 5, 2010 at 12:31 pm

I find the mere existence of these decompositions, whether patterned or not (though obviously patterns dangle in front of us the hope of generalization), pretty encouraging, though at this stage it’s not clear how encouraging they are. One thing I’m also finding is that, despite the appeal of having a 1D formulation, it’s perhaps easier to go back to the (equivalent) 2D set-up with lines in What I mean is that if we want to check that a decomposition

What I mean is that if we want to check that a decomposition  works, then the easiest way of doing so (by hand, that is) is to look at the function

works, then the easiest way of doing so (by hand, that is) is to look at the function  and check that the sum along the main diagonal is 1 and the sum along all other lines through the origin is 0.

and check that the sum along the main diagonal is 1 and the sum along all other lines through the origin is 0.

What I like about this is that in a certain sense it is strictly easier than what we were trying to do before. That is, before, we were trying to represent a diagonal as a linear combination of HAP products. Now all we need is that if you stand at the origin and do a sort of scan, it looks as though you have a diagonal matrix, even though in fact you may not. Another way of putting that is that you have lines going through the origin as test functions and if the inner product of with any one of those lines is what it would be if you had a diagonal matrix, then you count the decomposition as being OK. And that’s a lot weaker than requiring the decomposition to give you a diagonal matrix.

with any one of those lines is what it would be if you had a diagonal matrix, then you count the decomposition as being OK. And that’s a lot weaker than requiring the decomposition to give you a diagonal matrix.

What I’ll do when I get the chance, unless you’ve already done so by then, is examine that pattern and see if it can be generalized. I think it is no coincidence that the record occurred at a nice smooth number like 60: if you have pairs where

where  and

and  are large primes, then they are hard to deal with, but the interval

are large primes, then they are hard to deal with, but the interval ![[54,60]](https://s0.wp.com/latex.php?latex=%5B54%2C60%5D&bg=ffffff&fg=333333&s=0&c=20201002) is full of smooth numbers. (OK there’s a bit of a problem with 59, but the contribution from

is full of smooth numbers. (OK there’s a bit of a problem with 59, but the contribution from ![[59,60]](https://s0.wp.com/latex.php?latex=%5B59%2C60%5D&bg=ffffff&fg=333333&s=0&c=20201002) deals with that.)

deals with that.)

September 5, 2010 at 12:56 pm |

Alec, as you’ve probably worked out in the meantime for yourself, this idea brings us down to . Why? Take any large natural number

. Why? Take any large natural number  , set

, set  , and note that

, and note that  , where

, where ![P_r=[n/r-1, n/r]](https://s0.wp.com/latex.php?latex=P_r%3D%5Bn%2Fr-1%2C+n%2Fr%5D&bg=ffffff&fg=333333&s=0&c=20201002) for

for  ,

, ![Q=[n-m, n-1]](https://s0.wp.com/latex.php?latex=Q%3D%5Bn-m%2C+n-1%5D&bg=ffffff&fg=333333&s=0&c=20201002) and

and ![R=[n-m, n]](https://s0.wp.com/latex.php?latex=R%3D%5Bn-m%2C+n%5D&bg=ffffff&fg=333333&s=0&c=20201002) . This yields a value of

. This yields a value of  for

for  , which, of course, tends to

, which, of course, tends to  as

as  goes to infinity.

goes to infinity.

September 7, 2010 at 10:02 am

Christian, I’ve just found this comment of yours amongst my spam. I’ve no idea why it went there as it didn’t seem to have any obvious spam-like features, so apologies for that.

One other small remark is that if you want the TeX to compile you have to write the word “latex” immediately after the dollar sign. For instance, to produce I would write “$***** \int_0^1f(x)dx$” except that instead of “*****” I would write “latex”. I have edited your comment by inserting the “latex”s.

I would write “$***** \int_0^1f(x)dx$” except that instead of “*****” I would write “latex”. I have edited your comment by inserting the “latex”s.

September 7, 2010 at 11:32 am

Tim, many thanks for editing the formulae, and for the explanation of how to do so myself in the future. The following equation is just meant as “experiment” without relevance to EDP:

September 5, 2010 at 1:40 pm |

Small remark: Alec’s pattern is interesting, but it’s not clear how to generalize it so as to decrease . Since a small

. Since a small  means

means  , and since

, and since  governs the number of intervals while

governs the number of intervals while  is the number of times we hit the diagonal (if I understood correctly), then somehow we should have “not many” intervals. So I’m not sure what to do with the sum of many small intervals in Alec’s example (at least it shouldn’t grow too fast as

is the number of times we hit the diagonal (if I understood correctly), then somehow we should have “not many” intervals. So I’m not sure what to do with the sum of many small intervals in Alec’s example (at least it shouldn’t grow too fast as  decreases).

decreases).

September 5, 2010 at 3:20 pm

I’m not sure I fully follow what you are saying, but I agree with you to the extent that it looks very unlikely that one could solve the problem with one big interval that is cancelled out, except on the diagonal, by lots of small ones.

September 5, 2010 at 4:17 pm

Let me try to put what you say in my own words, because I’m not sure I know what you mean by and

and  Suppose we have intervals

Suppose we have intervals  and coefficients

and coefficients  (

( ) such that

) such that  sums to zero along every line with gradient not equal to 1. What value of

sums to zero along every line with gradient not equal to 1. What value of  does it give us? Well, the sum along the main diagonal is

does it give us? Well, the sum along the main diagonal is  and the sum of the absolute values of the coefficients is

and the sum of the absolute values of the coefficients is  So we get

So we get  In the special case where each

In the special case where each  is

is  we get

we get  To make that more transparent, if the sum is

To make that more transparent, if the sum is  then we get

then we get  So we want a significant imbalance in the sizes of the

So we want a significant imbalance in the sizes of the  and

and

Another necessary condition is that the sum of off the diagonal is zero. (To see this, just sum the sums over all non-diagonal lines.) In the case of the

off the diagonal is zero. (To see this, just sum the sums over all non-diagonal lines.) In the case of the  and

and  this tells us that

this tells us that  So, roughly speaking, we want the squares of the sizes of the negatively counted intervals and the positively counted intervals to be well balanced, but the sizes themselves not to be well balanced.

So, roughly speaking, we want the squares of the sizes of the negatively counted intervals and the positively counted intervals to be well balanced, but the sizes themselves not to be well balanced.

A small thought is that this puts a number-theoretic constraint on the sizes of the and

and  that could perhaps be used to narrow down the search, especially if one just searches for

that could perhaps be used to narrow down the search, especially if one just searches for  sums.

sums.

Going back to Thomas’s point, if we have one big interval, of size say, and try to cancel it out with lots of little intervals of bounded size, then it won’t work because we’ll need

say, and try to cancel it out with lots of little intervals of bounded size, then it won’t work because we’ll need  little intervals in order to get the off-diagonal sum equal to zero, and then we have

little intervals in order to get the off-diagonal sum equal to zero, and then we have  and the imbalance in the sums of the sizes of the intervals equal to

and the imbalance in the sums of the sizes of the intervals equal to  so we will never do better than some absolute constant for

so we will never do better than some absolute constant for  But if our little intervals have size

But if our little intervals have size  say, then we have roughly

say, then we have roughly  of them, and an imbalance of more like

of them, and an imbalance of more like  So that gives us

So that gives us

The point I’m making here is that for all we know it might be possible to prove EDP by finding one big interval and cancelling it out using lots of small intervals, provided only that the sizes of the small intervals tend to infinity. (I call them small because I don’t care if they have size where

where  is the size of the big interval.)

is the size of the big interval.)

September 5, 2010 at 5:13 pm

Sorry for the sloppy notation and poor wording, and thank you for these details, and the other constraints. Yes that’s what I had in mind: the interplay between the number of intervals and the behaviour

and the behaviour  on the diagonal.

on the diagonal.

September 5, 2010 at 8:20 pm

Yes, the pattern only generalizes in an obvious way to the extent of giving us arbitraily close to

arbitraily close to  . Indeed, for any

. Indeed, for any  , we can write

, we can write

since each of the off-diagonal terms in the blocks is cancelled by a term in the difference between the two large blocks.

blocks is cancelled by a term in the difference between the two large blocks.

Perhaps this merely confirms Tim’s suggestion that the problem might only become interesting for or something. But it seems worth asking whether we can generalize the pattern further to give us

or something. But it seems worth asking whether we can generalize the pattern further to give us  by taking a sum of blocks of size

by taking a sum of blocks of size  plus a small number of larger blocks – although there may be a simple reason why that can’t work.

plus a small number of larger blocks – although there may be a simple reason why that can’t work.

September 5, 2010 at 2:55 pm |

At some point I also thought of writing up a post going back to some earlier avenues and questions. The Decomposition approach and related SDP and LP is very nice and it has several features that makes it the most promising, but sometime down the road it will be useful to think or write down some older approaches and problems. In any case, keeping this project open for several additional months seems preferable to me.

September 5, 2010 at 3:26 pm

Any efforts in that direction would be greatly appreciated. For instance, one thing that I would be sorry not to return to is the behaviour of multiplicative functions produced by various greedy and not so greedy algorithms. There seemed to be some very interesting phenomena there that we failed to understand even heuristically.

I haven’t pinned this down 100% and have therefore not said too much about it, but on a couple of occasions I have had thoughts related to decompositions that seem to lead either to the very nice observation that Terry made concerning reducing the problem to one about multiplicative functions or to something very like it. So it may be that there will be some kind of convergence of the different approaches. Another idea that it would be good to resurrect is the one that I know interests you, and interest me too — of trying to show that EDP is true for wide classes of sequences, such as sequences that do not correlate with character-like functions. A partial result like that, especially if it could be proved with a better bound than can be expected for EDP in general, could be very enlightening.

September 5, 2010 at 11:24 pm |

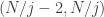

Just to summarize what Alec obtains in this comment and the build-up to it, we now have a method that’s in retrospect fairly simple for getting a decomposition that will get us down to The idea is this. You choose

The idea is this. You choose  that is divisible by all of

that is divisible by all of  You then take the difference between the square

You then take the difference between the square ![[N-k,N]^2](https://s0.wp.com/latex.php?latex=%5BN-k%2CN%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) and the square

and the square ![[N-k,N-1]^2.](https://s0.wp.com/latex.php?latex=%5BN-k%2CN-1%5D%5E2.&bg=ffffff&fg=333333&s=0&c=20201002) This gives you all points of the form

This gives you all points of the form  or

or  with

with  We now want to cancel out all of those except the point

We now want to cancel out all of those except the point  To cancel out the points

To cancel out the points  and

and  you use the square

you use the square ![[N/j-1,N/j]^2.](https://s0.wp.com/latex.php?latex=%5BN%2Fj-1%2CN%2Fj%5D%5E2.&bg=ffffff&fg=333333&s=0&c=20201002) The reason that works is that the line through the point

The reason that works is that the line through the point  also goes through the point

also goes through the point  and it goes through none of the other points that we have left. And similarly for the reflected line on the other side of the diagonal. So we end up doing all the calculations we wanted to do.

and it goes through none of the other points that we have left. And similarly for the reflected line on the other side of the diagonal. So we end up doing all the calculations we wanted to do.

As a little experiment, let’s think what would happen if we took the square![[N/j-2,N]^2](https://s0.wp.com/latex.php?latex=%5BN%2Fj-2%2CN%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) instead. Now we have a few more lines going through points in the square. The points above the main diagonal are

instead. Now we have a few more lines going through points in the square. The points above the main diagonal are

and

and  Multiplying all these through by

Multiplying all these through by  gives us the points

gives us the points

and

and

Ah, that itself isn’t ideal, but what if is even and we take the square

is even and we take the square ![[N/2-k/2,N/2]^2](https://s0.wp.com/latex.php?latex=%5BN%2F2-k%2F2%2CN%2F2%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) and subtract the square

and subtract the square ![[N/2-k/2,N/2-1]^2](https://s0.wp.com/latex.php?latex=%5BN%2F2-k%2F2%2CN%2F2-1%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) ? We can use that to knock out all points of the form

? We can use that to knock out all points of the form  with

with  even, I think. If we get rid of all the others in a trivial way, does that improve

even, I think. If we get rid of all the others in a trivial way, does that improve  ? And if it does, can we continue along similar lines and get

? And if it does, can we continue along similar lines and get  smaller still? I have to stop for the day, so I can’t think about this just yet.

smaller still? I have to stop for the day, so I can’t think about this just yet.

September 6, 2010 at 8:16 am

Sorry, that obviously won’t improve on 1/2 because the bulk of the work is still done by intervals of length 2.

September 6, 2010 at 4:54 pm |

I’d like to mention an idea that I think is unlikely to work, but it feels like the next obvious thing to try after Alec’s construction. His construction works by taking what I like to think of as a sort of rotated-L-shaped “fly trap”![[N-k,N]^2-[N-k,N-1]^2](https://s0.wp.com/latex.php?latex=%5BN-k%2CN%5D%5E2-%5BN-k%2CN-1%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) and making sure that exactly one fly gets caught in each place. A “fly” is a point in one of the other squares that flies out along a straight line that starts at the origin.

and making sure that exactly one fly gets caught in each place. A “fly” is a point in one of the other squares that flies out along a straight line that starts at the origin.

The “trivial” method of achieving this is to have exactly one fly (or rather, symmetric-about-diagonal pair of flies) per little square, and to make so big and smooth that we can find such a thing for every point of the form

so big and smooth that we can find such a thing for every point of the form  or

or  But that will never get us

But that will never get us  better than

better than  because we have so many squares of width 2. So we want a construction where most of the squares are significantly bigger.

because we have so many squares of width 2. So we want a construction where most of the squares are significantly bigger.

What I’m wondering is whether we can keep the fly trap idea, but do it much more economically by trying to hit almost all points in the trap rather than all of them. The basic idea would be that we could take squares of unbounded size and use those to create enough flies to hit a proportion of the points in the trap, and then deal with the remaining points in a completely trivial way, knocking them out one by one. The trivial part would be uneconomical, but since the proportion of points belonging to the trivial part would be small, this wouldn’t matter.

of the points in the trap, and then deal with the remaining points in a completely trivial way, knocking them out one by one. The trivial part would be uneconomical, but since the proportion of points belonging to the trivial part would be small, this wouldn’t matter.

To give a slightly more precise idea of how a fly trap might catch the flies from a larger square, let us consider a square![[r,s]^2](https://s0.wp.com/latex.php?latex=%5Br%2Cs%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) where

where  is of unbounded size (but we can think of it as a very large constant) and much smaller than

is of unbounded size (but we can think of it as a very large constant) and much smaller than

Now let’s suppose that is divisible by every number from

is divisible by every number from  to

to  Then every point

Then every point ![(a,b)\in[r,s]^2](https://s0.wp.com/latex.php?latex=%28a%2Cb%29%5Cin%5Br%2Cs%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) with

with  has some multiple of the form

has some multiple of the form  with

with  and every point

and every point ![(a,b)\in[r,s]^2](https://s0.wp.com/latex.php?latex=%28a%2Cb%29%5Cin%5Br%2Cs%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) with

with  has some multiple of the form

has some multiple of the form  with

with  These points belong to a “fly trap”, but the difference now is that the width of the fly trap is much bigger (as a function of

These points belong to a “fly trap”, but the difference now is that the width of the fly trap is much bigger (as a function of  ) than it was in Alec’s construction: in order for

) than it was in Alec’s construction: in order for  to be divisible by every number between

to be divisible by every number between  and

and  it must be very much bigger than

it must be very much bigger than  or

or  ; but the width of the trap is at least

; but the width of the trap is at least  or thereabouts.

or thereabouts.

What this demonstrates is merely that it is possible for all the points in a rectangle of unbounded size to get caught in a fly trap. What it does not show is that we can use up more than a handful of points in the fly trap this way. My guess is that we can’t — that’s what I plan to think about next.

(General remark: I’m temporarily back in normal Polymath mode, since there is some interaction going on.)

September 7, 2010 at 6:59 am |

I agree that finding a decomposition with would be very interesting. Getting

would be very interesting. Getting  blocks to cancel seems to be much harder than with

blocks to cancel seems to be much harder than with  blocks!

blocks!

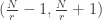

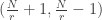

Here’s just a tiny thought in that direction. Closely related to the decomposition

is the decomposition

If we add these two decompositions together, the pairs of terms![[\frac{N}{r} - 1, \frac{N}{r}]^2 + [\frac{N}{r}, \frac{N}{r} + 1]^2](https://s0.wp.com/latex.php?latex=%5B%5Cfrac%7BN%7D%7Br%7D+-+1%2C+%5Cfrac%7BN%7D%7Br%7D%5D%5E2+%2B+%5B%5Cfrac%7BN%7D%7Br%7D%2C+%5Cfrac%7BN%7D%7Br%7D+%2B+1%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) fill all but two of the points of a

fill all but two of the points of a  square,

square, ![[\frac{N}{r} - 1, \frac{N}{r} + 1]^2](https://s0.wp.com/latex.php?latex=%5B%5Cfrac%7BN%7D%7Br%7D+-+1%2C+%5Cfrac%7BN%7D%7Br%7D+%2B+1%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) , with the middle one counted twice.

, with the middle one counted twice.

What if we try to form a decomposition with the sum

We’re left with the terms and

and  . But I can’t see how to construct a fly-trap to cancel these.

. But I can’t see how to construct a fly-trap to cancel these.

September 7, 2010 at 10:52 am |

One thought I had about fly traps is that we should expect them to be difficult to construct. To see what I mean by this, let’s imagine that we tried to create a fly trap out of a square![[r,s]^2.](https://s0.wp.com/latex.php?latex=%5Br%2Cs%5D%5E2.&bg=ffffff&fg=333333&s=0&c=20201002) If we just choose a fairly random pair of integers

If we just choose a fairly random pair of integers  and

and  (perhaps with

(perhaps with  not much bigger than

not much bigger than  ) then we can expect

) then we can expect ![[r,s]^2](https://s0.wp.com/latex.php?latex=%5Br%2Cs%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) to contain many pairs

to contain many pairs  of coprime integers, since there is a positive probability that two random integers are coprime. OK, they’re not quite random here because they are fairly close to each other, but I don’t think that makes a substantial difference. Indeed, if

of coprime integers, since there is a positive probability that two random integers are coprime. OK, they’re not quite random here because they are fairly close to each other, but I don’t think that makes a substantial difference. Indeed, if  then they are guaranteed to be coprime, if

then they are guaranteed to be coprime, if  and they are both odd then they are guaranteed to be coprime, and in general if

and they are both odd then they are guaranteed to be coprime, and in general if  and

and  is coprime to

is coprime to  then they are guaranteed to be coprime. So it seems to me that if

then they are guaranteed to be coprime. So it seems to me that if  isn’t tiny then we get a rich supply of coprime pairs in the square

isn’t tiny then we get a rich supply of coprime pairs in the square ![[s-r]^2.](https://s0.wp.com/latex.php?latex=%5Bs-r%5D%5E2.&bg=ffffff&fg=333333&s=0&c=20201002)

The problem with coprime pairs is that there is nothing for them to trap, since each one is the first integer point along the line from the origin.

So how might we create a nice big and fairly simple rectilinear region that does not contain lots of coprime pairs? So far we have used the following simple technique: find a very smooth and simply take an inverted L shape with its corner at

and simply take an inverted L shape with its corner at  Each point in that shape will have a coordinate equal to

Each point in that shape will have a coordinate equal to  and since

and since  has lots of small factors, it is quite hard to be coprime to

has lots of small factors, it is quite hard to be coprime to

Now suppose we wanted to thicken up that inverted L shape a bit. What we would ideally like is an interval![[N,N+r]](https://s0.wp.com/latex.php?latex=%5BN%2CN%2Br%5D&bg=ffffff&fg=333333&s=0&c=20201002) such that most of the points in it are smooth enough to have common factors with almost all positive integers. But that gets us into worrying ABC territory — we can expect it to be difficult to cram lots of highly smooth integers into a small interval. (I’ve now looked up the ABC conjecture and I’m not sure after all that it is relevant. But I still don’t see how to get an interval that’s full of integers each with lots of distinct small prime factors, which is what would be needed for this to work.)

such that most of the points in it are smooth enough to have common factors with almost all positive integers. But that gets us into worrying ABC territory — we can expect it to be difficult to cram lots of highly smooth integers into a small interval. (I’ve now looked up the ABC conjecture and I’m not sure after all that it is relevant. But I still don’t see how to get an interval that’s full of integers each with lots of distinct small prime factors, which is what would be needed for this to work.)

A thickened inverted L can be thought of as a whole lot of inverted Ls all pushed together. It has the advantage that it can be represented very efficiently in terms of rectangles, but it’s not clear that we need such extreme efficiency, so perhaps a better idea is not to insist that they are all pushed together. That avoids the number-theoretic problems just discussed. So the plan would be something like this. First choose some largish positive integer Next, find a number of large integers

Next, find a number of large integers  all around the same size, and all of them products of some of the first

all around the same size, and all of them products of some of the first  primes (not necessarily avoiding multiplicity). Since the sum of the reciprocals of the primes diverges, we can choose

primes (not necessarily avoiding multiplicity). Since the sum of the reciprocals of the primes diverges, we can choose  large enough that

large enough that  is very small, and if we do that then even if

is very small, and if we do that then even if  is a random subset of

is a random subset of  then

then  will be small. So in that way we have a rich supply of integers that are of size somewhere around

will be small. So in that way we have a rich supply of integers that are of size somewhere around  and that are coprime to only a very small proportion of other integers.

and that are coprime to only a very small proportion of other integers.

We could use these to create a sort of reception committee of fly traps. (The phrase “reception committee” is chosen for the benefit of Cambridge backgammon fans.)

What I would be hoping for here is something like this. Suppose we now take a smallish square![[u,v]^2.](https://s0.wp.com/latex.php?latex=%5Bu%2Cv%5D%5E2.&bg=ffffff&fg=333333&s=0&c=20201002) We would like it to cancel out some of the points in all those fly traps. Let’s consider a typical point

We would like it to cancel out some of the points in all those fly traps. Let’s consider a typical point ![(a,b)\in[u,v]^2](https://s0.wp.com/latex.php?latex=%28a%2Cb%29%5Cin%5Bu%2Cv%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) and let us suppose that

and let us suppose that  and

and  are of roughly the same size. Then as we take multiples of

are of roughly the same size. Then as we take multiples of  we will expect them to hit any given fly trap with probability roughly

we will expect them to hit any given fly trap with probability roughly  So we might hope that if we have about

So we might hope that if we have about  fly traps, then we will typically expect to hit one and only one of them. But even better might be to have

fly traps, then we will typically expect to hit one and only one of them. But even better might be to have  fly traps for some large constant

fly traps for some large constant  so that concentration of measure could kick in and tell us that with high probability we will hit about

so that concentration of measure could kick in and tell us that with high probability we will hit about  of the fly traps. What I’m hoping for here is that we could use probabilistic methods: we choose a bunch of random small squares

of the fly traps. What I’m hoping for here is that we could use probabilistic methods: we choose a bunch of random small squares ![[u,v]^2](https://s0.wp.com/latex.php?latex=%5Bu%2Cv%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) and argue that the points in the fly traps mostly get hit about the same number of times. (I am very far from sure that this is correct, since it ought to depend on “how non-coprime” the coordinates of the points in the flytraps are. But perhaps some Erdős-Kac type phenomenon would tell us that most points were about the same in this respect.)

and argue that the points in the fly traps mostly get hit about the same number of times. (I am very far from sure that this is correct, since it ought to depend on “how non-coprime” the coordinates of the points in the flytraps are. But perhaps some Erdős-Kac type phenomenon would tell us that most points were about the same in this respect.)

The general idea here would be to hit most of the points in the fly traps about the same number of times, and then divide through the small squares by that number and subtract. This would leave us with an approximate cancellation, and the remaining error could be mopped up with a trivial decomposition.

This is all a bit of a fantasy at the moment, but I think it’s the kind of thing we need to try.

September 7, 2010 at 12:37 pm |

Here are a few more thoughts about the reception committee of fly traps idea.

I won’t give the full details, but at first I thought I had an argument to show that it couldn’t work, but then I realized that it might just be possible, though I am at the moment ignoring certain aspects of the problem that are probably important. To be precise, I am ignoring the question of whether we can find sufficiently many smooth large integers that are all reasonably close to each other.

The parameters we need to choose are the number of inverted Ls and their lengths. (To be clear, let us define the length of the set of integer points along the lines from to

to  and

and  to be

to be  And let’s call that set

And let’s call that set  After a bit of experimenting, I realized that we need really rather a lot of layers to the fly trap. Let me try to explain roughly why. Given a point in

After a bit of experimenting, I realized that we need really rather a lot of layers to the fly trap. Let me try to explain roughly why. Given a point in  that is not on the main diagonal, it is within

that is not on the main diagonal, it is within  of the point

of the point  so there cannot be any positive integer points on the line joining that point to the origin with

so there cannot be any positive integer points on the line joining that point to the origin with  coordinate less than

coordinate less than  (since then it would be within less than 1 of the main diagonal). But that means that the probability of a multiple of a point in one of the small squares hitting a given inverted

(since then it would be within less than 1 of the main diagonal). But that means that the probability of a multiple of a point in one of the small squares hitting a given inverted  can be at most

can be at most  which means that for the argument to work we will need at least

which means that for the argument to work we will need at least  layers.

layers.

I won’t say more, but those are the kinds of calculations that underlie the details of the following suggestion. I would like to choose roughly inverted Ls of length roughly

inverted Ls of length roughly  between

between  and

and  I would like them all to be of the form

I would like them all to be of the form  with each

with each  having enough small prime factors for almost all positive integers to have common factors with

having enough small prime factors for almost all positive integers to have common factors with  Note that each inverted L can be created with a contribution of 2 to the sum of the absolute values of the coefficients, so so far we have got up to

Note that each inverted L can be created with a contribution of 2 to the sum of the absolute values of the coefficients, so so far we have got up to  for this.

for this.

Now for the cancelling part. This we shall create as a whole lot of fairly randomly chosen squares![[r_i,s_i]^2](https://s0.wp.com/latex.php?latex=%5Br_i%2Cs_i%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) where each

where each  has order of magnitude

has order of magnitude  and each

and each  has order of magnitude

has order of magnitude  That means that each square contains roughly

That means that each square contains roughly  points off the diagonal, so in order to do the cancellation, we need about

points off the diagonal, so in order to do the cancellation, we need about  of them. (In practice, I would expect to take more like

of them. (In practice, I would expect to take more like  of them and divide them all by

of them and divide them all by  , just to smooth things out a bit.) If the probabilistic heuristic works, then each point will … oh dear, I’ve made a mistake.

, just to smooth things out a bit.) If the probabilistic heuristic works, then each point will … oh dear, I’ve made a mistake.

My mistake is that if we have squares of width

squares of width  then we’ll be forced to go all the way up to

then we’ll be forced to go all the way up to  which means that for the probabilistic heuristic to work we’ll need

which means that for the probabilistic heuristic to work we’ll need  layers. Let me go back and do a few more calculations.

layers. Let me go back and do a few more calculations.

September 7, 2010 at 1:02 pm |

This deserves a new comment. Let’s suppose that we have inverted Ls of width

inverted Ls of width  between

between  and

and  Then the number of points we have to cancel is

Then the number of points we have to cancel is  (I’m stating everything up to an absolute constant.) For the probabilistic heuristic to work, we need the points we use to do the cancelling to have coordinates bounded above by

(I’m stating everything up to an absolute constant.) For the probabilistic heuristic to work, we need the points we use to do the cancelling to have coordinates bounded above by  (or else the probability that they hit one of the layers of the reception committee is significantly less than 1 and we end up with lots of uncancelled points). But the number of points with coordinates bounded above by

(or else the probability that they hit one of the layers of the reception committee is significantly less than 1 and we end up with lots of uncancelled points). But the number of points with coordinates bounded above by  that are close enough to the diagonal to cancel any of the points in the reception committee is at most

that are close enough to the diagonal to cancel any of the points in the reception committee is at most  So we need

So we need  to be at least

to be at least  which implies that we need

which implies that we need  to be at least

to be at least  But that’s a problem because we now have so many points we want to cancel that we are forced to have the little squares centred at points that are higher up than

But that’s a problem because we now have so many points we want to cancel that we are forced to have the little squares centred at points that are higher up than