I have no pressing reason for asking the question I’m about to ask, but it is related to an old post about when two proofs are essentially the same, and it suddenly occurred to me while I was bathing my two-year-old son.

Consider the problem of showing that the product of any consecutive positive integers (or indeed any integers, not that that is a significant extension) is divisible by

I think the proof that most experienced mathematicians would give is the slick one that

divided by

is

and so is the number of ways of choosing

objects from

objects. Since the latter must be an integer, so must the former.

One might argue that this is not a complete proof because one must show that really is the number of ways of choosing

objects from

but that is not hard to do.

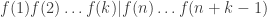

It is not hard to imagine a less experienced mathematician — somebody just starting out at university, say — failing to think of this argument. If so, then they might attack the problem with brute force. To show that divides any product

of

consecutive positive integers, it is (necessary and) sufficient to show that every prime goes at least as many times into

as it does into

And that is actually not all that hard to prove. Let me show it for 3, but the argument is completely general.

A first guess at how many times 3 goes into is the number of multiples of

that are at most

or

However, this is wrong since it forgets the fact that some of those multiples of 3 are also multiples of 9.

Since for each multiple of 9 we “forgot” a factor of 3, we could add on the number of multiples of 9. But then we’re forgetting multiples of 27, and so on. So in fact the number of times that 3 goes into is the number of multiples of 3 that are in the set

plus the number of multiples of 9 plus the number of multiples of 27 plus the number of multiples of 81, and so on.

How many times does go into

? Exactly the same argument works: it is the number of multiples of 3 that belong to the set

plus the number of multiples of 9, etc. etc.

The final step of the argument is to observe that for any positive integer the number of multiples of

that belong to the set

is less than or equal to the number of multiples of

that belong to the set

Why? Well, suppose you want to choose

to minimize the number of multiples of

you get. The obvious thing to do is make

one more than a multiple of

so that it takes as long as possible to hit the first multiple, then as long as possible to hit the second, etc. Since 0 is a multiple of every

we are done.

The question I’m now asking is whether these are two completely different arguments, or whether there is some sophisticated perspective from which it is possible to regard them as sort of the same. The fact that the second one makes heavy use of the fundamental theorem of arithmetic suggests that they really are different, but it could be that what is really happening is that the second argument is taking the first argument and somehow splitting it up into pieces in an unnecessary way.

September 19, 2010 at 12:52 am |

The paper when are two algorithms the same argues that it is impossible to define a meaningful equivalence relation for algorithms. That seems relevant here. Personally I would think that if anything, it is even harder to do so for proofs, but curious to see other reactions.

September 21, 2010 at 10:23 am

Thanks for that very interesting link, and sorry your comment spent so long in the moderation queue.

September 19, 2010 at 1:48 am |

Instead of answering your question (which, at the moment, I cannot do), I would like to provide an interesting reference: In the fourth section of his “Disquisitiones Arithmeticae”, cf. art. 127, Gauss gives the second of the two proofs you describe.

In art. 126, he shows that if two sequences of positive integers are given, such that every prime power divides at least as many terms from the second sequence as is divides from the first, then the product of the first sequence divides the product of the second sequence.

Then comes art. 127, that starts with the lemma that the criterion applies to the sequences and

and  . Then he mentions that as a corollary we get that

. Then he mentions that as a corollary we get that

is an integer, a conclusion to which he refers as a “propositio ex numerorum figuratorum theoria nota, sed a nemine, ni fallimur, hactenus directe demonstrata”. So it seems that Gauss considers these two proofs (the combinatorial one to which he is just alluding, and the arithmetical one that he has actually given) to be in some sense substantially different from each other. But it is not clear whether this says anything about the question that I suppose you have in mind.

is an integer, a conclusion to which he refers as a “propositio ex numerorum figuratorum theoria nota, sed a nemine, ni fallimur, hactenus directe demonstrata”. So it seems that Gauss considers these two proofs (the combinatorial one to which he is just alluding, and the arithmetical one that he has actually given) to be in some sense substantially different from each other. But it is not clear whether this says anything about the question that I suppose you have in mind.

September 19, 2010 at 4:03 am |

Nice question. My initial reaction is that the proofs do appear to be genuinely different, but this could just be because I am not seeing a hidden connection.

Both proofs extend easily enough to show that the multinomial coefficients are integers, so this doesn’t help in separating them.

are integers, so this doesn’t help in separating them.

The second proof feels like it ought to have some extension to other number fields, e.g. Gaussian integers, though I can’t immediately come up with such a generalisation. If so, it would probably earn the right to be called a different proof from the combinatorial one, since it is only the non-negative rational integers that have a cardinality interpretation.

The first proof is a bijective proof; it foliates the space of injections from {1,…,k} to {1,…,n} into leaves that are bijective with the symmetry group of {1,…,k}. The second proof is non-bijective. According to the category theorists, this suggests that the first proof is saying a lot more than the integrality of a certain ratio, but can be enriched (or “categorified”) to say something about a more interesting structure. This is a feature which most proofs don’t seem to have. (A few years ago I asked for a categorification of the Cauchy-Schwarz inequality, and didn’t really get any satisfactory resolution to that question.)

September 19, 2010 at 7:14 am |

In the first proof one exhibits a subgroup of a symmetric group isomorphic to the product of two symmetric groups; then the quotient counts the number of cosets. In the second proof one instead computes the orders of the Sylow -subgroups of the groups in question. So I would say that the first proof is “categorical” where the second proof is “arithmetic.”

-subgroups of the groups in question. So I would say that the first proof is “categorical” where the second proof is “arithmetic.”

September 19, 2010 at 4:39 pm |

The nCr proof seems to be constructive, it exhibits an object of the required size.

Not sure if it helps but here are 2 other proofs.

http://wargle.blogspot.com/2009/12/computing-combinatorials.html

The second of which is definitely the same as the prime counting argument above but uses the formula for highest power of a prime in n! to make it a bit more formal. It basically comes down to the fact that

[n/p] >= [(n-k)/p] + [k/p]

where [] is the integer floor function. The difference between the LHS and the RHS is the highest power of p in the in n!/(k!(n-k!)). Call this H(p, n, k)

If you could find a way to decompose the object constructed in the nCr proof into a product of sets of size p^H(p, n, k) then I think you would have shown they are equivalent.

September 19, 2010 at 10:19 pm |

The proofs also seems different to me. The first comes in 2 variations. One is the combinatorial direct explanation and the other is via the reccurence relation. One extension we can examine is the fact that the Gaussian binomial coefficients are polynomial in q. (and not just rational function of q as directly follow from the definition.) There is a combinatorial proof via counting permutations of a ‘1’s and b ‘2’s via the number of inversions, or a via the reccurence formula. I am not aware of a proof extensing the second proof in the post, i.e. showing that every irreducuble polynomial appears more often in the denumerator compared to the enumerator but maybe its possible to show it.

September 20, 2010 at 12:34 pm

Of course, the fact that the Gaussian coefficients counts the number of k-dimensionsl supspaces of an n dimensional space over a field with q elements also implies that they are described by a polynomial in q and this argument also seems rather different than the other arguments. (It does not show that the coefficients of the polynomial are positive integers.)

September 20, 2010 at 6:43 pm

“I am not aware of a proof extensing the second proof in the post, i.e. showing that every irreducuble polynomial appears more often in the denumerator compared to the enumerator but maybe its possible to show it.”

I believe that’s very straightforward: for each n, q^n-1 is equal to the product of (irreducible) cyclotomic polynomials \Phi_d(q) over all d dividing n, and this is precisely where the second proof enters the game and wins.

I am also slightly puzzled by the remark in your second comment (to which I cannot reply directly): the interpretation of Gaussian binomial coefficients via k-dimensional subspaces in an n-dimensional space does prove that the coefficients are nonnegative, because these coefficients just count cells in the cell decomposition of the Grassmannian…

September 21, 2010 at 1:45 am

Right. (regarding the second proof). So this example is not so useful for the question in the post. It is also correct the coefficients count cell in the Grasmanian which show the nonnegativity. (The mere fact that the Guassian numbers count something and hence are integers for prime power q’s suffice to show that it is a polynomial but not the nonnegativity.)

September 21, 2010 at 1:56 am

Is there some analog for the second proof for polynomials (namely you replace natural numbers by polynomials) where all irreducible polynomials enters the picture? E.g.: Is the product of all polynomials of degrees n-k+1,…,n with coefficients in a field of q elements divisible by the product of all polynomials of degress 1,2,…,k?? or something like this?

September 19, 2010 at 11:09 pm |

[…] This post was mentioned on Twitter by ajlopez and Homö Sapiens, FMFG. FMFG said: Are these the same proof? http://bit.ly/a6sQr4 /cc @feedly […]

September 20, 2010 at 12:48 pm |

Maybe I am totally wrong, but at a first glance it seems to me that the second proof extends to Z/mZ, but I have not checked yet. Perhaps, this would help to separate the proofs.

September 20, 2010 at 5:54 pm

Well, it gets a bit tricky if k and m are not coprime, but basically I think this is the case. Certainly the second proof extends to negative integer values of n. With a bit of care I think it even extends to n being an adelic integer (keeping k fixed).

Incidentally there is a variant of the first proof which proceeds by induction using the Pascal’s triangle formula . But this is very close to the combinatorial proof.

. But this is very close to the combinatorial proof.

September 20, 2010 at 7:22 pm

I used Pascal’s formula to close the gap Tim mentions in his above proof, i.e., as a part of the combinatorial proof. How can one use it to give an alternative proof? Would this qualify as a different proof that is the same when looked at from a sophisticated perspective?

September 20, 2010 at 7:47 pm

If one tries to extend the first proof to integer values of n, one just has to consider two cases. If one of the numbers n, n+1,…, n+k-1 is zero, their product is divisible by k!. If not, there exist k consecutive positive integers whose product is the same if we ignore the sign. Does this not qualify for an extension?

September 20, 2010 at 7:50 pm

I think the two proofs have been expressed as being direct proofs Qiaochu Yuan’s comment above about the first one counting cosets seems to me to illuminate this direct structure.

Given any set of k items we can arrange them in k! different orders. So each ordered choice of k items from n+k-1 items is part of a collection of k! ordered sets corresponding to the same underlying unordered choice. These collections are clearly disjoint. The result follows.

There is another inductive proof, which goes: the result is trivial for k=1, and for n=1 and all k. Assume true up to k-1 for all n, and for k up to n.

Then (n+1)(n+2) … (n+k) – n(n+1)(n+2) … (n+k-1)=k(n+1)(n+2) … (n+k-1)

A – B = kC. By hypothesis k! divides B and (k-1)! divides C, so k! divides A.

Can this proof be done without induction or an equivalent property. For example, can the fact that (for all r) r! is the number of orders of r distinguishable objects be established without induction?

September 21, 2010 at 9:34 am

@Mark Bennett: I do not even know how to define k! for all natural numbers k without the induction axiom.

September 20, 2010 at 6:18 pm |

To get an idea of what you have in mind, it would be helpful for me to have an example of two proofs that are seemingly totally different, but from a sophisticated point of view can be regarded as sort of the same, and an example of “completely” different proofs.

Unfortunately, I could not find such an example myself.

September 23, 2010 at 1:09 am |

If we take Arvind’s paper seriously, and extend it from algorithms to programs, doesn’t the Curry-Howard isomorphsim (programs are proofs and types are propositions) argue the same thing about proofs?

September 23, 2010 at 1:33 am |

I think that paper shows that you cannot usefully define what equivalence means for algorithms beyond “they have the same output on the same inputs”. It does not show that you can’t show that some are inequivalent. It even says

“Thus, when one considers a specific device and asks whether an algorithm’s space requirements are an essential

characteristic of the algorithm, that is, whether one should count two

algorithms as different just because one uses much more space than the

other, then answer is likely to be “yes” once the space requirements

are large enough but “no” if they are small.”

If you have axioms A1, …, to AN and you can show that your statement is undecidable but adding axiom B makes it true (with proof PB) and adding axiom C also makes it true (with proof PC) and axiom B is not equivalent to axiom B, then PB and PC cannot be the same proof.

Is there an example of this? Things that can be proven with and without the axiom of choice seem like good candidates.

September 23, 2010 at 11:18 am

One of the examples in the algorithms paper is a way of showing non-transitivity of any potential notion of “sameness”. The idea is to take a task that can be performed in stages, with two different ways of doing each stage. Suppose the task takes stages. Then one can define

stages. Then one can define  algorithms by picking

algorithms by picking  between 0 and

between 0 and  and using the first method for the first

and using the first method for the first  stages and the second method for the remaining stages. Then one is inclined (for certain examples) to say that any two consecutive algorithms are essentially the same, but that the first and last algorithms are not essentially the same.

stages and the second method for the remaining stages. Then one is inclined (for certain examples) to say that any two consecutive algorithms are essentially the same, but that the first and last algorithms are not essentially the same.

A natural question, therefore, is whether a sorites-type argument can be used to show something similar about proofs. I’m sure it can, but it would be nice to have a good example rather than, say, something artificial that has an algorithm embedded into it.

Maybe one approach would be a proof by induction where there are two very different ways of proving the inductive step. Then one could prove the first steps of the induction in one way and the remaining

steps of the induction in one way and the remaining  steps in the other. Of course, the intermediate proofs would be extremely artificial, but a general notion of sameness of proofs should be able to cope with artificial proofs as well as natural ones.

steps in the other. Of course, the intermediate proofs would be extremely artificial, but a general notion of sameness of proofs should be able to cope with artificial proofs as well as natural ones.

Having said all that, it seems fairly obvious, especially after reading that paper, that the right approach to the question “When are two proofs the same?” is not to struggle to find an equivalence relation, and probably not even to find a metric, but rather to find good ways of refining the question. By that I mean that there are many interesting ways that two proofs can be related to each other, and one should not try to cram all those potential relationships into one single yes/no relationship.

Here are three examples of the kinds of relationships I am talking about.

1. Sometimes two proofs have the same general structure, but at some point you need to construct an object with certain properties, and sometimes there are many genuinely different ways of constructing that object. In such a situation it seems natural to say that the proofs use basically the same idea but that there is some flexibility about that detail. (Of course, if constructing the object in question is the main difficulty of the argument, then one would not want to say that the two proofs are the same.)

2. Sometimes one proof follows the same steps as another, except that it does one of the steps in a more laborious way (perhaps for good reasons such as wanting a more explicit argument).

3. Sometimes one proof is nonconstructive but in a way that can easily be made constructive, and the other proof is the constructive version.

More generally, proofs have internal structure, and there seems to be more hope of talking about when components of two proofs are essentially the same than when two proofs in their entirety are the same. (For instance, thinking about components of proofs immediately deals with the sorites-type examples.)

September 23, 2010 at 9:46 am |

With Terence Tao’s number-field-sugestion in mind, but “projecting” back to the “usual” integers, one can formulate and prove various statements of which the following is an interesting special case: Let ,

,  ,

,  , etc. denote the Fibonacci numbers. Then for all positive integers

, etc. denote the Fibonacci numbers. Then for all positive integers  and

and  , the product

, the product  divides the product

divides the product  .

.

Questions: (1) Does anybody see a purely combinatorial of this (not necessarily involving copulating rabbits)?

(2) Is there any sense in which we can say that the first proof for the integrality of binomial coefficients also shows the above assertion?

September 23, 2010 at 11:01 am

The natural common generalization of the Fibonacci example and the positive integers seems to me to be the following, which has number theory woven into the question itself. Let be a function such that

be a function such that  implies

implies  Then

Then  for every

for every

Let me just check that that’s the right condition. Let be a prime and for each

be a prime and for each  let

let  be minimal such that

be minimal such that  (with the convention that this is infinite if there is no such

(with the convention that this is infinite if there is no such  ). Then

). Then  and the numbers

and the numbers  such that

such that  is a multiple of

is a multiple of  but not of

but not of  are precisely the multiples of

are precisely the multiples of  that are not multiples of

that are not multiples of  If

If  is an interval, then the number of times

is an interval, then the number of times  goes into

goes into  is the sum over all

is the sum over all  of the number of multiples of

of the number of multiples of  that lie in

that lie in  So the second proof carries over straightforwardly.

So the second proof carries over straightforwardly.

It doesn’t seem completely obvious that one couldn’t give a more combinatorial proof, but first one would probably want a more combinatorial characterization of functions with the above property and I don’t at the moment have any ideas about that.

September 23, 2010 at 10:07 pm

First, I would like to thank you for editing my LaTeX time and again, if there should be anything at all at which I do reasonably well, then it’s definitely not using computers. Also, before writing anything at all, I should admit that I’m currently suffering from a really bad cold, and have taken a slight overdose of painkillers, so maybe it’s going to be nonsensical.

Consider the following sequence: ,

,  ,

,  ,

,  ,

,  ,

,  ,

,

for all

for all  . At the moment, I believe that

. At the moment, I believe that  divides

divides  whenever

whenever  divides

divides  , but that

, but that  equals

equals  and is not dividing

and is not dividing  , i.e.

, i.e.  , contrary to expectation.

, contrary to expectation.

September 23, 2010 at 10:56 pm

OK let me try to see where my argument is wrong. Let’s go for the sequence 1,2,4,2,1,4,1, which is the minimal sequence that starts 1,2,4 and has the property that always divides

always divides  I’ll take

I’ll take  Then

Then

and

and  My mistake is the simple one that it just isn’t true that the number of multiples of 2 in an interval of length 3 is minimized when that interval starts at 1.

My mistake is the simple one that it just isn’t true that the number of multiples of 2 in an interval of length 3 is minimized when that interval starts at 1.

I think perhaps what I need is the condition that if and only if

if and only if  but I haven’t checked. Since

but I haven’t checked. Since  this stronger property does at least apply to the Fibonacci sequence.

this stronger property does at least apply to the Fibonacci sequence.

September 23, 2010 at 10:49 pm |

Christian, I think the problem is that although f(n) divides f(m) when n divides m, there is no guarantee that on dividing by f(n), there is enough left of f(m) to abosrb f(x) for all the other factors, x, of m.

Consider f(1) = 1, f(2) = 6, f(3) = 6, f(4) = 6, f(5) = 5, f(6) = 6, f(7) = 7

f(1)f(2)f(3) = 1.6.6

but

f(5)f(6)f(7) =5.6.7

With f(n)=n the numerator gains new factors just fast enough to keep up with the denominator but nothing in your condition forces that.

I think f(a).f(b) divides f(a.b) is the condition you want. It would force f(6) to be a multiple of 36 in the counterexample above.

September 24, 2010 at 9:56 am

Fergal, the condition you give does not work for a sequence which is constant=2.

To get any useful condition, divide out first by the hcf of the sequence.

The sequence of powers of 2 (or r) has the multiplicative property, each member of the sequence divides every subsequent member, and the hcf of members is 1.

September 24, 2010 at 10:17 am

That last comment is a bit thin – a constant sequence has the multiplicative property we’re looking for without satisfying f(a).f(b) divides f(a.b). The powers sequence satisfies the multiplicative property without satisfying any of the conditions I was thinking about in which some members of the sequence are forced to be coprime.

November 11, 2010 at 8:40 am |

The question of “proof identity”–whether two proofs are the same–is a venerable topic in mathematical logic. Hilbert originally listed it as his 24th problem but then omitted it, leaving the familiar 23. An article about that is here: http://www.maa.org/news/Thiele.pdf

A couple of more recent articles about proof identity are below. I don’t understand them but they look interesting anyway. They are from wikipedia.

http://arxiv.org/pdf/cs.LO/0610123

Click to access LFCS07.pdf

November 11, 2010 at 12:48 pm |

My memory slipped and I neglected to check before posting. Hilbert’s 24th problem as described in the MAA article was not proof identity per se, but the related problem of what proof of a theorem is “simplest”. It is discussed also in the other two articles mentioned.

December 4, 2010 at 5:06 pm |

Somehow there is a bit difference between “feeling” that two proofs are the same and finding a formal way to phrase it. The latter is possible sometimes, but could require more work than each of the two proofs in question. Let me describe an example from my own work, which you might find useful.

The Jacobi triple product identity is a classical, one of the commonly used identities. In his survey, Sylvester presented three proofs: a bijective, an involutive (by using an explicit sign-reversing involution), and another bijective which he attributed to Hathaway. In the next 120 years, over a dozen of bijective proofs have been published. In my bijection surveyI argued that that all these proofs are exactly the same up to a change of variables. This includes Sylvester and Hathaway’s proof, which the previous authors confused anyway (sometimes very loudly). Later on, I discovered that the involutive proof is in fact a “projection” of the bijective proof, a claim which I was able to make completely formal here. In other words, we can now prove that Sylvester’s three proofs (and all subsequent combinatorial proofs) are the same.

I should mention that the basic “projection” idea goes back to Andrews (1979), who informally observed that the celebrated Franklin’s involution for Euler’s pentagonal theorem follows from a bijection of a more involved identity, also due to Sylvester. Also, just because two proofs are the same does not mean they are redundant – their different presentations can inspire different generalizations and other ideas.

April 9, 2011 at 11:10 am |

\documentclass{article}

\usepackage{latexsym,amssymb}

\begin{document}

\medskip

For what it’s worth, here is an argument which combine features of those above.

It is, of course, longer than either.

\medskip

\noindent {\bf PROOF 1:} We prove by induction on $k$ that the product of any $k$

consecutive integers is divisible by $k!$. This is true when $k = 1$, so suppose

that $k > 1$ and that the result is established for smaller positive integers.

\medskip

Let $n,n+1,\ldots, n+k-1$ be any $k$ consecutive integers. Choose any $t$

with $1 \leq t \leq k-1$. Then (by induction) the product of the first $t$ of these

is divisible by $t!$, and the product of the next $k-t$ is divisible by $(k-t)!$.

Hence the product of all $k$ of them is divisible by $t!(k-t)!$. Suppose then that

the product is not divisible by $k!$. Then there is a prime $p$ such that the

power of $p$ dividing $k!$ is strictly greater than the power of $p$ dividing

$t!(k-t)!$ for each $t$ with $1 \leq t \leq k-1$. In particular, any such $p$

divides $k$, otherwise taking $t = 1$ gives a contradiction. But now set

$t = p^{a}b$ where $a,b$ are positive integers and $p$ does not divide $b.$

Then the binomial coefficient $\frac{t!}{p^{a}!(t-p^{a})!}$ is not divisible

by $p$, as it’s $$\frac{t(t-1)\ldots (t-(p^{a} -1))}{p^{a}(p^{a}-1) \ldots 2 \times 1},$$

and for $0 \leq i \leq p^{a}-1$ the highest power of $p$ dividing $p^{a}-i$ and $t-i$ is the same.

This last argument is used in one proof of Sylow’s theorem

(by H.Wielandt, and G.A. Miller before). Hence we are done by induction \emph{unless} $k = p^{a}$,

and in that case, we need a different argument to show that the power of $p$ dividing $k!$

also divides our $k$-long product. Suppose then that $k = p^{a}$ for some prime $p$ and

positive integer $a$. Then no two of the integers $n,n+1,\ldots ,n+k-1$ are congruent

(mod k). But there are $k$ of them, so every congruence class (mod k) is represented

once and only once in the list. Since the power of $p$ dividing $m_{i}k+i$ is at least as great

as the power of $p$ dividing $i$ for $1 \leq i \leq k$ and any integer $m_{i}$, we see that the power

of $p$ dividing $k!$ divides the product $n(n+1)\ldots (n+k-1)$ in this case too.

April 10, 2011 at 9:24 am |

consecutive integers is divisible by $k!$. This is true when $k = 1$, so suppose

that $k > 1$ and that the result is established for smaller positive integers.

\medskip

Let $n,n+1,\ldots, n+k-1$ be any $k$ consecutive integers. Choose any $t$

with $1 \leq t \leq k-1$. Then (by induction) the product of the first $t$ of these

is divisible by $t!$, and the product of the next $k-t$ is divisible by $(k-t)!$.

Hence the product of all $k$ of them is divisible by $t!(k-t)!$. Suppose then that

the product is not divisible by $k!$. Then there is a prime $p$ such that the

power of $p$ dividing $k!$ is strictly greater than the power of $p$ dividing

$t!(k-t)!$ for each $t$ with $1 \leq t \leq k-1$. In particular, any such $p$

divides $k$, otherwise taking $t = 1$ gives a contradiction. But now set

$t = p^{a}b$ where $a,b$ are positive integers and $p$ does not divide $b.$

Then the binomial coefficient $\frac{t!}{p^{a}!(t-p^{a})!}$ is not divisible

by $p$, as it’s $$\frac{t(t-1)\ldots (t-(p^{a} -1))}{p^{a}(p^{a}-1) \ldots 2 \times 1},$$

and for $0 \leq i \leq p^{a}-1$ the highest power of $p$ dividing $p^{a}-i$ and $t-i$ is the same.

This last argument is used in one proof of Sylow’s theorem

(by H.Wielandt, and G.A. Miller before). Hence we are done by induction \emph{unless} $k = p^{a}$,

and in that case, we need a different argument to show that the power of $p$ dividing $k!$

also divides our $k$-long product. Suppose then that $k = p^{a}$ for some prime $p$ and

positive integer $a$. Then no two of the integers $n,n+1,\ldots ,n+k-1$ are congruent

(mod k). But there are $k$ of them, so every congruence class (mod k) is represented

once and only once in the list. Since the power of $p$ dividing $m_{i}k+i$ is at least as great

as the power of $p$ dividing $i$ for $1 \leq i \leq k$ and any integer $m_{i}$, we see that the power

of $p$ dividing $k!$ divides the product $n(n+1)\ldots (n+k-1)$ in this case too.

\end{document}

$

April 12, 2011 at 11:02 am |

Sorry for messing up the latex above (twice). It may not be relevant to the overall philosophical question, but as for the original two proofs used to illustrate the question, it seems to me that the line between what is arithmetical and what is combinatorial is difficult to draw. Once one knows about prime factorizations, it is easy to write down an explicit formula

for the power of a given prime p dividing n!, using the integer part function,

dividing n by successively higher powers of p which is more or less what goes on in the second proof originally given (one can also of course do it apparently more arithmetically and in one step by subtracting from n the sum of the digits in the p-adic expansion of n, and dividing the result by p-1). But, as was realised by Chebyshev, once you can do that, the fact that there are other ways to estimate n! (eg, Stirling’s formula) tells quite a lot about the distribution of prime numbers.

April 12, 2011 at 7:28 pm |

It also seems to me that (actually a simpler version of) the second proof generalizes, working with polynomials of the form $latex $x^k -1$ $,

to give something like Gil Kalai might have had in mind. It is necessary

to have a fairly minimal understanding of how to factorise these

into cyclotomic polynomials, but once one assumes that, I think one

can reason as follows to show that $latex $(x-1)(x^2-1) \ldots (x^k-1)$ $

divides $latex $(x^n -1)(x^{n+1}-1) \ldots x^{n+k-1} -1)$ $

as a polynomial for any positive integer n.

For any integer $m$ the cyclotomic polynomial $latex $\phi_{m}(x)$ $

divides $latex $x^{r} – 1$ $ whenever the positive integer m divides the

positive integer r. Essentially, the original second argument then tells us that

for each positive integer b less than or equal to $k$, the cyclotomic polynomial $latex $\phi_{b}(x)$ $ divides the second k-long product

at least as often as often as it does the first. Hence the second

product of polynomials is an integral polynomial times the first

(I don’t think one actually needs unqueness of factorization here,

just the “natural” factorization of the given polynomials as

products of cyclotomic polynomials. Then one can specialize

to $latex $x = 1$ $ to get the integer result ( after taking out

a factor of $latex $(x-1)^{k} $ $).

Whether this says anything about the “sameness” of the proofs is

another question. It seems to suggest maybe that the

second proof is more general, and somehow “stronger”.

April 12, 2011 at 11:03 pm |

Apologies again. I see that my last comment duplicated Vladimir Dotsenko’s

follow up to Gil Kalai.

April 14, 2011 at 1:01 am |

Actually, maybe the apology is not entirely necessary, or at least, it may

be worth making the following remark. If we work with cyclotomic

polynomials, (and we understand enough about them beforehand), then

the proof using cyclotomic polynomials is genuinely simpler. Specializing

to x = 1 gives the first proof, and for the second proof, using the polynomials

(then specializing to x =1) is more straightforward in a way, because

you get the necessary power of the cyclotomic polynomial first time,

there is no need to worry about having missed higher powers, as was

necessary with rational primes. I won’t try to put the latex here.

April 16, 2011 at 5:42 pm |

A related question to the first is: how would you prove that when a and b are positive integers, (ab)! is divisible by b!(a!)^b? Once you have realised that it should be true, it isn’t hard to to prove directly by checking powers of individual primes. But a more structural proof is to note that the symmetric group on ab letters has a “natural” subgroup, a wreath product, S_a wr S_b, which is the semidirect product of a “base group” (itself a direct product of b copies of S_a) with the symmetric group S_b, where the latter group acts as automorphisms of the base group by permuting components in the obvious way.This raises the general question of the point at which a structural insight can lead to proof of a genuinely new result which was

inaccessible (or at least not obvious) beforehand. It seems clear that this

point will not be well-defined.

April 30, 2011 at 10:22 am |

And what about the proof by “differentiating” the rising factorial $k$ times?

As to the original question, I suspect that the two proofs are incomparable (i.e. have different generalizations).

May 16, 2011 at 1:54 am |

[…] https://gowers.wordpress.com/2010/09/18/are-these-the-same-proof/ […]

August 18, 2014 at 10:07 am |

I don’t know whether these two proofs are same, but here is an alternate proof.

n

Σ 1 = n/1!

i1=1

n i1

Σ Σ 1 = n(n+1)/2!

i1=1 i2=1

n i1 i2

Σ Σ Σ 1 = n(n+1)(n+2)/3!

i1=1 i2=1 i3=1

…

n i1 i2 i_k-1

Σ Σ Σ … Σ 1 = n(n+1)(n+2)…(n+k-1)/k!

i1=1 i2=1 i3=1 ik=1

Since the LHS is just summing 1 multiple times, it is clearly an integer. Hence k! divides n(n+1)(n+2)…(n+k-1).

October 25, 2016 at 3:17 am |

[…] Are these the same proof? […]

June 13, 2019 at 11:52 am |

[…] Are these the same proof? […]

March 20, 2022 at 4:51 am |

[…] A brilliant alternative proof that this is an integer is given by Tim Gowers. […]