Here then is the project that I hope it might be possible to carry out by means of a large collaboration in which no single person has to work all that hard (except perhaps when it comes to writing up). Let me begin by repeating a number of qualifications, just so that it is clear what the aim is.

1. It is not the case that the aim of the project is to find a combinatorial proof of the density Hales-Jewett theorem when . I would love it if that was the result, but the actual aim is more modest: it is either to prove that a certain approach to that theorem (which I shall soon explain) works, or to give a very convincing argument that that approach cannot work. (I shall have a few remarks later about what such a convincing argument might conceivably look like.)

2. I think that the chances of success even for this more modest aim are substantially less than 100%. So let me be less fussy still. I will regard the experiment as a success if it leads to anything that could count as genuine progress towards an understanding of the problem. However, that success has a quantitative aspect to it: what I am really interested in is progress that results from multiple collaboration. If what actually happens is that several people make intelligent remarks, but without building on each other’s remarks, then what will have been gained will still be worthwhile but it will be less interesting from the point of view of this experiment.

3. I’ve thought of one more ground rule, and also a general guideline that clarifies a couple of the existing rules. The extra rule is that there needs to be some possibility for the experiment to be declared to have finished. What I suggest here is that if a consensus emerges that no further progress is likely, I (or perhaps somebody else) will write a summary of the main conclusions that have been reached, and it will then become public property in the way that a conventional mathematics preprint would be. That is, others would be welcome to make use of it in their own publications if they wanted to. Probably the summary would be posted on the ArXiV (after people had had a chance to suggest changes) but not conventionally published. The guideline, which is designed to stop people going away and doing a lot of work in private — the whole point is that we should all display our thought processes — is that you should not write anything that requires you to go away and do some calculations on a piece of paper. As I said in the other guidelines, if all goes well there will eventually be a place for detailed working out of ideas, but people should do that only after first establishing that that would be welcomed.

In order to encourage the style I’m hoping for, I shall practise what I preach, in a sense anyway, by giving my initial thoughts on the problem in small chunks. That is, I’ll write them as though they were a lot of comments that could in theory have been made by several different people instead of just one. I’ll number them with letters of the alphabet (so that they don’t get confused with the numbered comments on this post).

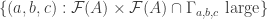

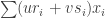

A. Might it be possible to prove the density Hales-Jewett theorem for by imitating the triangle-removal proof that dense subsets of

contain corners?

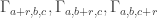

B. If one were going to do that, what would the natural analogue of the tripartite graph associated with a dense subset be? To put that question more precisely, if we’ve got a dense subset of

, then how might we define a tripartite graph

in such a way that triangles in

correspond to (possibly degenerate) combinatorial lines?

C. It’s perhaps worth pointing out that there is a natural definition of a degenerate combinatorial line: it’s one where the set of coordinates that vary from 1 to 3 is empty.

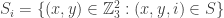

D. If we just look at the vertex sets and

in the case of subsets

, we have a very simple definition for an edge: it’s a pair

that belongs to

.

E. Does split up nicely as a Cartesian product?

F. Well, you can write it as , but that doesn’t help much.

G. Why doesn’t it help?

H. Because if we represent a typical point as with

and

, then a Hales-Jewett line is not a triple of the form

,

,

. That’s partly because we can’t even make sense of adding

, but more fundamentally because even if we could invent a useful definition of addition in this context, we still wouldn’t get a Hales-Jewett line.

I. It was clear in advance that that was not going to work, because the splitting up as a Cartesian product was completely non-canonical.

J. By the way, there’s a nice way of deducing the corners result from density Hales-Jewett. Let me quickly explain it, omitting a few details. Suppose we take a set with the special property that whether or not a sequence

belongs to

depends only on the numbers of

s,

s and

s in

. That is, let

be the set of all triples

of non-negative integers such that

, let

be some subset of

, and let

be the set of sequences

such that

, where

stands for the number of

s in

. Now suppose you have a combinatorial line

. If

is the number of variable coordinates in

, then

must contain a triple of points of the form

(where

,

and

are the numbers of

s,

s and

s amongst the fixed coordinates of

).

Now is a large triangular subset of a triangular grid, and one can (if one really feels like it) shear it so that it becomes a right-angled triangle instead of an isosceles triangle. In this way it is not hard to show that the assertion that every dense subset of

contains the vertices of an equilateral triangle of the form

,

,

is equivalent to the corners result.

Unfortunately it’s not the case that dense subsets of correspond to dense subsets of

(because almost every point in

has similar numbers of

s,

s and

s) but there are dodges for getting round that.

K. That’s very helpful, because we almost certainly want whatever definition we come up with for the graph to give rise to the definition that we used for

when the subset of

is of the special kind that depends only on the numbers of coordinates with the three possible values.

L. All right then. That tells us that for subsets of that just depend on numbers of different types of coordinates, we want edges of the

graph to correspond in some sensible way to points in

that have a particular number of

s and a particular number of

s.

M. That suggests that in the general case we want some data about the s and

s in

to determine

, just as the number of

s and

s determines the numbers of all three types of coordinates. And of course this is trivial: instead of looking at the numbers of the three types, look at the sets where they occur.

N. Indeed, there is an obvious tripartite graph we can define. Let its vertex sets, which I’ll call ,

and

, all be copies of the power set of

: i.e., in each vertex set the vertices are subsets of

. Given two disjoint sets

and

, there is a unique point

that takes the value

in

, the value

in

, and the value

everywhere else. Join

to

if that point

belongs to the given subset

. And do the obviously corresponding things for the

and

graphs.

O. Does that work? That is, if we have a triangle in that graph, does it correspond to a combinatorial line?

P. Yes. Here’s a quick proof. Suppose we have ,

and

, and suppose that they form a triangle in the graph. By the way we defined the edges, the sets

,

and

are disjoint. For

let’s write

for the set of

such that

. Then in the set

we can find a point

such that

and

, and also a point

in

with

and

, and finally a point

in

with

and

. Now let

. Since for every point

we have

, we find that

,

and

. In other words, the three sequences

,

and

, which all lie in

, all take values

on

,

on

and

on

, and

is

everywhere on

,

is

everywhere on

and

is

everywhere on

. This gives us a combinatorial line except in the degenerate case

.

Q. Wow. That means we’re done by triangle removal doesn’t it?

R. Unfortunately it doesn’t. The problem is that that tripartite graph is not dense. In fact, it’s not even close to dense. To see why not, just pick a random pair of subsets and

. They cannot be joined in the

graph unless they are disjoint, and the probability of that happening is absolutely tiny.

S. That sounds a bit bad, but aren’t there sparse versions of Szemerédi’s regularity lemma?

T. I’m not sure it’s all that likely that there’s a version that applies when the density is exponentially small.

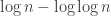

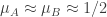

U. That’s a misleading way of putting it when the graph itself is exponentially large. If we let , so that there are

vertices in each of the three vertex sets, then the density of the graph is going to be of the form

for some constant

. But that’s still pretty sparse, it has to be said.

V. Here’s another problem. The existing sparse regularity lemmas tend to assume that you’re sitting inside a highly random sparse graph, but are dense relative to that graph. In our case, the natural graph that we’re sitting inside is the one where you join two sets if and only if they are disjoint and ignore whether they define a sequence that belongs to

. It’s possible that the graph defined above will turn out to be dense relative to this graph. However,

is not by any stretch of the imagination random, or quasirandom. For example, if you look at the

part, you can define

to be the subset of

where all sets have size at most

, and the same for

, and it’s not hard to check that the density of the part of the graph from

to

is substantially greater. (Basically, conditioning on sets being a bit smaller than average makes them quite a lot more likely to be disjoint.)

W. Yes, but at least the graph is very concretely defined. Perhaps one could do some kind of regularity argument relative to

that exploited the fact that it has a nice simple definition.

X. Here’s a small and slightly encouraging observation. What does a typical edge look like? Well, there’s a one-to-one correspondence between

edges and sequences in

. Since a typical sequence in

has roughly equal numbers of

s,

s and

s, a typical edge in the

graph consists of two disjoint sets of size about

.

Y. Unfortunately it’s still very sparse if we somehow weight our sets so that almost all of them have size about , since two sets of size

have a tiny probability of being disjoint.

Z. Aren’t there tricks we could do to find dense bits of the graph ? For example, we know that if

and

are random small sets (of size

, say) then with high probability they are disjoint. Might there be some way of restricting to “the small part of

“?

AA. How could one do that when almost all sequences have roughly equal numbers of s,

s and

s? The dense set

might consist just of well-balanced sequences.

BB. It’s not as impossible as it sounds, because we can use an averaging trick. Suppose we want to restrict ,

and

to small sets. What we can do is this. First we randomly choose a very small subset

of

. Then for every

we randomly choose an element of

. Finally, we do everything we want inside

. That is, we define a graph by joining two subsets

and

of

if they are disjoint, and define associated sequences by letting

and

determine the values inside

and the random choice of values outside

give us the rest. This gives us a structure of the kind we are talking about, and the average density of points in

in this structure will be close to the density of

if

is small enough, because random sequences that are unbalanced inside a random small set

will not be especially unbalanced globally.

CC. That’s an interesting thought, but I think it leads to a problem. Suppose our graph consists of pairs

of disjoint small subsets of

, and similarly for our

graph and our

graph. Where are the degenerate triangles? You can’t have a sequence with very few

s in

, very few

s in

and very few

s in

all at the same time.

DD. That’s a serious objection, but I still think the averaging trick could be quite useful for this problem.

EE. Here’s a different idea. The big problem is not so much that is sparse as that it is not quasirandom. But perhaps we could make it quasirandom by putting weights on the edges (and perhaps if necessary the vertices as well, since the averaging trick above will enable us to get sets of positive density even with funny vertex weights). The basic idea would be to penalize edges that joined small sets together, because somehow those sets were “cheating” by being small. Is there some natural set of weights that one can just write down?

[The history of this idea is that earlier this afternoon I had got up to Y when I had to go and fetch my daughter from school. While walking there I couldn’t help thinking about the problem and this idea, which I have not explored, occurred to me. So even the effort of writing down my thoughts has been quite stimulating. Because I haven’t yet thought about this idea properly, I’m still in that delicious state of thinking it might be the key to the whole problem. But I’ve also been in this game long enough to know that the chances of that are very small. It’s tempting to investigate it, but I’m not going to as this is also an ideal opportunity to throw out an idea and wait for somebody else to destroy it (or, just perhaps, to develop it) instead.]

FF. A natural way to find such weights would be to start by trying to make every vertex have constant (weighted) degree.

GG. It’s easy to see that you can’t make quasirandom by weighting the edges. For example, suppose you let

and

both be the set of all subsets of

that contain

. These two sets have density

and yet there is no edge of

between them.

[The history of this idea is that although I resolved not to think about the idea put forward in EE., I was on a car journey and I just couldn’t help it. That goes for the next couple of responses to it too, which I could help even less because at that point I was starting to get worried that the whole thing was indeed hopeless.]

HH. That sounds pretty bad.

II. Is it really as bad as all that? I can see that it’s a problem if you have very dense parts of the graph, because then you don’t have a global upper bound for the density of subgraphs when you are trying to prove a regularity lemma. But if you have parts of the graph that are very sparse, or even entirely free of edges, it’s not so obviously a problem because there could still be an upper bound. In other words, perhaps it’s enough for to be “upper quasirandom” rather than quasirandom.

JJ. Another idea is that you just try to do everything relative to . That is, you define the density of an induced subgraph of

to be the number of edges in that subgraph divided by the number of edges in the corresponding induced subgraph of

. Could that work?

KK. Going back to vertex weights, here are two remarks. First, the averaging trick shows that for more or less any weighting on the vertices, if you can prove the theorem with that weighting (which affects which sets count as dense) then you can prove the theorem with the uniform weighting. I could write a formal lemma to this effect if anybody wanted, but for now I think the informal statement is enough.

The second remark is that a natural weighting on sets is to make the weight of a set equal to

. Then the size of a typical set is

, and the probability distribution on the collection of all subsets of

is the same as the probability distribution of the set of places where a random sequence in

takes any given value.

LL. In response to II, I don’t think it’s possible to make even upper quasirandom by introducing edge weights. The idea to do so was roughly that one should penalize edges that join small sets because those were more likely to be disjoint. But making sets small is not the only way to make them more likely to be disjoint. For example, you could make

consist of all sets that are slightly biased towards the first half of

(meaning that their intersection with the first

integers is slightly bigger than their intersection with the second

integers) and

could consist of all sets that are slightly biased towards the second half of

. Of course, one could legislate against that particular example, but the same trick can be played for any partition of

into two equal-sized subsets. It seems to be impossible to legislate against this, since, for trivial reasons, every set will be biased with respect to some partitions.

[The history of that idea was that I was sitting in the car waiting for a bus to arrive that had my daughter in it. Once again I just couldn’t help my mind wandering in a Hales-Jewetty direction.]

How might one completely kill off the above approach?

The tripartite graph that we form out of has few triangles in the following sense: every edge

in the

graph is contained in just one triangle (the other vertex of which is

). If one is to prove the theorem by means of an adaptation of the triangle-removal lemma, one would want the following graph-theoretic statement to be true: let

be any subgraph of

such that each edge is contained in at most one triangle. Then it is possible to remove

edges from

to make it triangle free. A counterexample to this more general statement would almost certainly make the approach hopeless. (I say “almost certainly” because one might just be able to use some extra structural property of the graph derived from

. But that would change the nature of the approach quite a bit.)

Perhaps there could be convincing arguments that described the most general possible iterative approach to proving regularity lemmas and then proved that no iterative approach could give a regularity lemma that had any chance of helping with this problem. The seeming impossibility of making quasirandom would undoubtedly be a part of such an argument.

That’s all I have to suggest at the moment.

February 1, 2009 at 8:47 pm |

[…] collaborative mathematical project” over at his blog. The project is entitled “A combinatorial approach to density Hales-Jewett“, and the aim is to see if progress can be made on this problem by many small contributions […]

February 1, 2009 at 8:59 pm |

1. A quick question. Furstenberg and Katznelson used the Carlson-Simpson theorem in their proof. Does anyone know that proof well enough to know whether the Carlson-Simpson theorem might play a role here? If so, I could add it to the background-knowledge post. (But I’m sort of hoping it won’t be needed.)

February 1, 2009 at 9:08 pm |

2. In this note I will try to argue that we should consider a variant of the original problem first. If the removal technique doesn’t work here, then it won’t work in the more difficult setting. If it works, then we have a nice result! Consider the Cartesian product of an IP_d set. (An IP_d set is generated by d numbers by taking all the 2^d possible sums. So, if the n numbers are independent then the size of the IP_d set is 2^d. In the following statements we will suppose that our IP_d sets have size 2^n.)

Prove that for any c>0 there is a d, such that any c-dense subset of the Cartesian product of an IP_d set (it is a two dimensional pointset) has a corner.

The statement is true. One can even prove that the dense subset of a Cartesian product contains a square, by using the density HJ for k=4. (I will sketch the simple proof later) What is promising here is that one can build a not-very-large tripartite graph where we can try to prove a removal lemma. The vertex sets are the vertical, horizontal, and slope -1 lines, having intersection with the Cartesian product. Two vertices are connected by an edge if the corresponding lines meet in a point of our c-dense subset. Every point defines a triangle, and if you can find another, non-degenerate, triangle then we are done. This graph is still sparse, but maybe it is well-structured for a removal lemma.

Finally, let me prove that there is square if d is large enough compare to c. Every point of the Cartesian product has two coordinates, a 0,1 sequence of length d. It has a one to one mapping to [4]^d; Given a point ((x_1,…,x_d),(y_1,…,y_d)) where x_i,y_j are 0 or 1, it maps to (z_1,…,z_d), where z_i=0 if x_i=y_i=0, z_i=1 if x_i=1 and y_i=0, z_i=2 if x_i=0 and y_i=1, and finally z_i=3 if x_i=y_i=1. Any combinatorial line in [4]^d defines a square in the Cartesian product, so the density HJ implies the statement.

(I should learn how to use Latex in blogs…)

February 1, 2009 at 9:23 pm |

3. I find it reassuring the first thing I thought of when I read comment D was what turned out to be comment N.

Would it help to have a graph where each node was a set consisting of a combinatorial line, and the edges are the sets that share a sequence in common? That is, {(1,2,3,3), (2,2,3,3), (3,2,3,3)} would link to {(1,2,3,3), (1,2,3,2), (1,2,3,1)}.

I really do wonder if there’s a more direct approach to the problem, simply working out for![\null [3]^n](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) what the minimal number of nodes one can remove before no combinatorial lines remain and then applying induction. Just fiddling with actual examples by hand a greedy approach works well. I’m sure this is horribly naive given the past solution had to apply ergodic theory.

what the minimal number of nodes one can remove before no combinatorial lines remain and then applying induction. Just fiddling with actual examples by hand a greedy approach works well. I’m sure this is horribly naive given the past solution had to apply ergodic theory.

February 1, 2009 at 9:26 pm |

4. As Gil pointed out in his post on this project, the k=2 case of density Hales-Jewett is Sperner’s theorem,

http://en.wikipedia.org/wiki/Sperner_family

Now, of course, this theorem already has a combinatorial proof, but this proof has a rather different flavour than the triangle removal lemma (or its k=2 counterpart, which is the trivial statement that if a subset of a set V of vertices is small, then it can be removed entirely by removing a small number of vertices.) But perhaps a subproblem would be to find an alternate combinatorial proof of Sperner’s theorem.

As pointed out to me by my student, Le Thai Hoang, another difference between DHJ and, say, the corners problem, is that one does not expect a positive density of all combinatorial lines to lie in the set. For instance, in the k=2 case, and identifying![{}[2]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B2%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) with the set of subsets of {1,2,…,n}, Hoang observed that the set of subsets of {1,…,n} of cardinality

with the set of subsets of {1,2,…,n}, Hoang observed that the set of subsets of {1,…,n} of cardinality  has positive density, but only about

has positive density, but only about  of the

of the  combinatorial lines here actually lie in the set. This seems to fit well with your observations that some weighting of the vertices etc. is needed.

combinatorial lines here actually lie in the set. This seems to fit well with your observations that some weighting of the vertices etc. is needed.

At the ergodic theory level, there is also a distinction between the ergodic theory proofs of, say, the corners theorem, and of density Hales-Jewett, which may be an indication that something different happens at the combinatorial level too. Namely, in the ergodic proof of corners (or Roth, or Szemeredi, etc.) one has a single dynamical system (with the action of a single group G), and one works with that system (and its factors) throughout the proof. But for DHJ, one has a system of measurable sets indexed by words, and one repeatedly refines the set of words to much smaller subsets in order to obtain good “stationarity” properties. (My student, Tim Austin, could explain this much better than I could.) It is only once one has all this stationarity that the proof can really begin in earnest. This is also consistent with proofs of the colouring Hales-Jewett theorem, which also massively restrict the space of lines being considered, and with the above observation that we do not expect a positive density of the full space of lines to be contained in the set. So perhaps some preliminary “regularisation” of the problem is needed before doing anything triangle-removal-like.

February 1, 2009 at 9:30 pm |

5. Incidentally, I only learned in the process of writing up my own post on this problem that Furstenberg-Katzelson have _two_ papers on Density Hales-Jewett: the “big one”,

http://www.ams.org/mathscinet-getitem?mr=1191743

that does the full k case, but also an earlier paper,

http://www.ams.org/mathscinet-getitem?mr=1001397

that just does the k=3 case. I’ve only looked at the former.

An unrelated thing: there is an annoying wordpress latex bug concerning latex that begins with a bracket [, which may cause some difficulty in this context. One can fix it by prefacing any latex string that begins with a bracket with some filler (I use {}).

February 1, 2009 at 10:16 pm |

6. Terry’s description of the difference between the `ergodic theoretic’ approaches to the corners theorem and DHJ is well-put: at no point does an action of an easy-to-work-with _group_ of measure-preserving transformations emerge during the DHJ proof. Furstenberg and Katznelson introduce an enormous family of measure-preserving transformations indexed by all finite-length words in an alphabet of size k (and having some algebraic structure in terms of that family of words) in order to encode their notion of stationarity, but they neither use nor prove anything about the group generated by these transformations. Rather, they identify within their collection a great many `IP systems’ of transformations, a rather weaker sort of algebraic structure, and exploit that. In order to reach this point, they first obtain a kind of `stationarity’ for a family of positive-measure sets indexed by these words before introducing the transformations, and then expressing that stationarity in terms of these transformations in effect locks that notion of stationarity in place, and also gives a way to transport various mult-correlations between functions around on the underlying probability space.

In this connexion, it’s in obtaining this notion of stationarity that Furstenberg and Katznelson first use the Carlson-Simpson Theorem, and they then use it repeatedly throughout the paper in order to recover some similar kind of symmetry among a collection of objects indexed by the finite-length words. At this point, I can’t see where the same need would arise in the approach to DHJ under discussion here.

February 2, 2009 at 1:25 am |

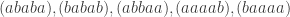

7. With reference to Jozsef’s comment, if we suppose that the numbers used to generate the set are indeed independent, then it’s natural to label a typical point of the Cartesian product as

numbers used to generate the set are indeed independent, then it’s natural to label a typical point of the Cartesian product as  , where each of

, where each of  and

and  is a

is a  -sequence of length

-sequence of length  . Then a corner is a triple of the form

. Then a corner is a triple of the form  ,

,  ,

,  , where

, where  is a

is a  -valued sequence of length

-valued sequence of length  with the property that both

with the property that both  and

and  are

are  -sequences. So the question is whether corners exist in every dense subset of the original Cartesian product.

-sequences. So the question is whether corners exist in every dense subset of the original Cartesian product.

This is simpler than the density Hales-Jewett problem in at least one respect: it involves -sequences rather than

-sequences rather than  -sequences. But that simplicity may be slightly misleading because we are looking for corners in the Cartesian product. A possible disadvantage is that in this formulation we lose the symmetry of the corners: the horizontal and vertical lines will intersect this set in a different way from how the lines of slope -1 do.

-sequences. But that simplicity may be slightly misleading because we are looking for corners in the Cartesian product. A possible disadvantage is that in this formulation we lose the symmetry of the corners: the horizontal and vertical lines will intersect this set in a different way from how the lines of slope -1 do.

I feel that this is a promising avenue to explore, but I would also like a little more justification of the suggestion that this variant is likely to be simpler.

February 2, 2009 at 1:31 am |

8. The following questions are perhaps somewhat tangential to the main project, but have the advantage of requiring less combinatorial expertise than the main question, and so might possibly take better advantage of this format.

Given any n, let be the largest subset of

be the largest subset of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) that does not contain a combinatorial line. Thus for instance

that does not contain a combinatorial line. Thus for instance  ,

,  ,

,  (for the latter, consider deleting the antidiagonal

(for the latter, consider deleting the antidiagonal  from

from ![{}[3]^2](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) ). The k=3 density Hales-Jewett theorem is the statement that

). The k=3 density Hales-Jewett theorem is the statement that  as

as  .

.

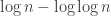

The first question, which seems very amenable to this medium, is to compute exactly, and to get good upper and lower bounds on

exactly, and to get good upper and lower bounds on  (it seems unlikely that

(it seems unlikely that  will be easy to compute on the nose). This of course does not say too much about the asymptotic question, but one might at least try one’s luck with the OEIS once one has enough data (note that this would have worked with the k=2 theory).

will be easy to compute on the nose). This of course does not say too much about the asymptotic question, but one might at least try one’s luck with the OEIS once one has enough data (note that this would have worked with the k=2 theory).

The other question is to get as good a lower bound as one can for . Of course, the trivial example

. Of course, the trivial example ![{}[2]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B2%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) gives the lower bound

gives the lower bound  , but one can clearly do better than this. Writing the Behrend example in base 3 gives something like

, but one can clearly do better than this. Writing the Behrend example in base 3 gives something like  . But presumably one can do better still.

. But presumably one can do better still.

February 2, 2009 at 1:36 am |

9. Terry’s comment about Le Thai Hoang’s observation seems to me to be an important one, so let me spell out why, even though it’s basically there in what Terry says. If you follow the triangle-removal proof of the corners result, you find that you can prove something stronger: in a set of density you don’t just get one corner, but you get

you don’t just get one corner, but you get  corners. (The proof is that if there are very few corners then there are very few triangles in the associated tripartite graph, and then you can apply triangle removal just as before to get a contradiction.) If one is going to find an analogous proof here, it is essential that it should not try to prove a lemma that has false consequences. In our case, we must be careful that we don’t accidentally try to develop an approach that would also imply that a positive density of all possible combinatorial lines lie in the set.

corners. (The proof is that if there are very few corners then there are very few triangles in the associated tripartite graph, and then you can apply triangle removal just as before to get a contradiction.) If one is going to find an analogous proof here, it is essential that it should not try to prove a lemma that has false consequences. In our case, we must be careful that we don’t accidentally try to develop an approach that would also imply that a positive density of all possible combinatorial lines lie in the set.

I haven’t checked, but I think that weighting the vertices as suggested in KK avoids this pitfall, because now the analogous set to the one used by Le Thai Hoang is concentrated around sets of size . Probably this is exactly what Terry meant at the end of his second paragraph.

. Probably this is exactly what Terry meant at the end of his second paragraph.

February 2, 2009 at 1:45 am |

10. In response to Terry and Tim’s comments about regularization and the ergodic-theory proof, my feeling is that we should bear in mind that something like this could well turn out to be necessary (on the grounds that genuine difficulties for one approach often have counterparts in other approaches), but should perhaps not try to guess in advance what form any regularization might take. (In case anyone’s wondering what I mean by “regularization”, a rough description would be that you have a subset of a structure

of a structure  and you find a subset

and you find a subset  of

of  of a similar form, such that

of a similar form, such that  is an easier subset of

is an easier subset of  to handle than

to handle than  is of

is of  .) Instead, we should press ahead, try to prove some kind of triangle-removal statement, find that it fails for some reason, try to work out a nice property of

.) Instead, we should press ahead, try to prove some kind of triangle-removal statement, find that it fails for some reason, try to work out a nice property of  that would get round the problem, prove that one can regularize to obtain that property, etc. etc. But this process will still involve guesswork, and people who know the ergodic-theoretic proof are likely to be able to make better guesses.

that would get round the problem, prove that one can regularize to obtain that property, etc. etc. But this process will still involve guesswork, and people who know the ergodic-theoretic proof are likely to be able to make better guesses.

February 2, 2009 at 2:17 am |

11. This of course does not say too much about the asymptotic question, but one might at least try one’s luck with the OEIS once one has enough data (note that this would have worked with the k=2 theory).

Terry, has anyone tried to collected said data for k=3?

February 2, 2009 at 2:27 am |

12. Dear Jason, I don’t know, but I just emailed someone who I think would. My guess, though, is that density Hales-Jewett is probably not as well known as its cousins such as van der Waerden’s theorem to have much data already worked out. If so, then presumably we can start collecting data here.

For instance, it is not hard to see that and that

and that  for any n, m, so this already gives the bounds

for any n, m, so this already gives the bounds  for

for  . Presumably one can do better.

. Presumably one can do better.

February 2, 2009 at 4:08 am |

13. Perhaps in order to understand the issue coming from Hoang’s observation a bit better, one can pose the following subproblem: what is the natural analogue of Varnavides theorem for DHJ? Varnavides used Roth’s theorem as a black box to show that any subset of![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) of density

of density  contained

contained  3-term arithmetic progressions when n was large; a similar argument using the Ajtai-Szemeredi theorem as a black box also shows that any subset of

3-term arithmetic progressions when n was large; a similar argument using the Ajtai-Szemeredi theorem as a black box also shows that any subset of ![{}[n]^2](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) of density

of density  contains

contains  corners. So, using DHJ as a black box, what is the natural implication as to how “numerous” combinatorial lines are in a dense set? It seems that direct cardinality of such lines may not be the best measure of being “numerous”, but there should presumably still be some Varnavides-like theorem out there.

corners. So, using DHJ as a black box, what is the natural implication as to how “numerous” combinatorial lines are in a dense set? It seems that direct cardinality of such lines may not be the best measure of being “numerous”, but there should presumably still be some Varnavides-like theorem out there.

February 2, 2009 at 5:26 am |

14. Regarding Terry’s last comment about a Varnavides-like theorem, I’m a bit skeptical. Even for the k=2 case (Sperner’s thm) if we take points of the d dimensional cube where the difference between the 0-s and 1-s is at most then we have a positive fraction of the elements and there are less than

then we have a positive fraction of the elements and there are less than  combinatorial lines. Probably this should be the right magnitude for k=2 as a Varvadines-like result and it shouldn’t be difficult to prove. (But I don’t have the proof …)

combinatorial lines. Probably this should be the right magnitude for k=2 as a Varvadines-like result and it shouldn’t be difficult to prove. (But I don’t have the proof …)

February 2, 2009 at 7:32 am |

15. Regarding lower bounds, fixing the numbers of ‘1’ ‘2’ and ‘3’ coordinates or even just fixing the number of ‘1’ coordinates gives better lower bounds, right? (Just like for Sperner’s theorem.)

February 2, 2009 at 8:00 am |

16. Hmm, Gil, you’re right; so we get a bound of asymptotically.

asymptotically.

Dear Jozsef, yes, we will have to normalise things appropriately to get a Varnavides type theorem. For the k=3 problem, for instance, one might try to shoot for a lower bound for the number of combinatorial lines which is something like (3+o(1))^n rather than 4^n. Alternatively, we could somehow weight each line, perhaps by some factor depending on how many wildcards it contains. (Note that any set of density in a large

in a large ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) will contain at least one combinatorial line in which the number of wildcards is at most

will contain at least one combinatorial line in which the number of wildcards is at most  , since one can partition

, since one can partition ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) into copies of

into copies of ![{}[3]^{n_0}](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5E%7Bn_0%7D&bg=ffffff&fg=333333&s=0&c=20201002) , where

, where  is the first index for which DHJ holds at density

is the first index for which DHJ holds at density  , and observe that the original set will have density at least

, and observe that the original set will have density at least  in at least

in at least  of these copies. So maybe one has to weight things to heavily favour lines with very few wildcards.)

of these copies. So maybe one has to weight things to heavily favour lines with very few wildcards.)

February 2, 2009 at 8:46 am |

17. It just occurred to me that if a subset of![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) has positive density, then by the central limit theorem we may restrict attention to those strings which have

has positive density, then by the central limit theorem we may restrict attention to those strings which have  1’s, 2’s, and 3’s, and still have a positive density of strings remaining. So without loss of generality we may assume that all strings in the set are “balanced” in this manner. Hence, the only combinatorial lines which really matter are those which have

1’s, 2’s, and 3’s, and still have a positive density of strings remaining. So without loss of generality we may assume that all strings in the set are “balanced” in this manner. Hence, the only combinatorial lines which really matter are those which have  wildcards in them, rather than

wildcards in them, rather than  . So the number of combinatorial lines that are genuinely “in play” is just

. So the number of combinatorial lines that are genuinely “in play” is just  rather than

rather than  .

.

I wonder if DHJ implies the existence of a combinatorial line with wildcards. In the k=2 case it seems that a double counting argument should be able to establish something like this, though I haven’t checked it thoroughly. If so, then there seems to be a good shot at getting the “right” Varnavides type theorem.

wildcards. In the k=2 case it seems that a double counting argument should be able to establish something like this, though I haven’t checked it thoroughly. If so, then there seems to be a good shot at getting the “right” Varnavides type theorem.

February 2, 2009 at 9:01 am |

18. Two more thoughts… firstly, a sufficiently good Varnavides type theorem for DHJ may have a separate application from the one in this project, namely to obtain a “relative” DHJ for dense subsets of a sufficiently pseudorandom subset of![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , much as I did with Ben Green for the primes (and which now has a significantly simpler proof by Gowers and by Reingold-Trevisan-Tulsiani-Vadhan). There are other obstacles though to that task (e.g. understanding the analogue of “dual functions” for Hales-Jewett), and so this is probably a bit off-topic.

, much as I did with Ben Green for the primes (and which now has a significantly simpler proof by Gowers and by Reingold-Trevisan-Tulsiani-Vadhan). There are other obstacles though to that task (e.g. understanding the analogue of “dual functions” for Hales-Jewett), and so this is probably a bit off-topic.

Another thought is the following. For any a,b,c adding up to n, let be the subset of

be the subset of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) consisting of those strings with a 1s, b 2s, and c 3s. If A is a dense subset of

consisting of those strings with a 1s, b 2s, and c 3s. If A is a dense subset of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , then

, then  should be a dense subset of

should be a dense subset of  for many a, b, c; call a triple (a,b,c) rich if this is the case. By applying the corners theorem (!) we should then be able to find (a+r,b,c), (a,b+r,c), (a,b,c+r) which are simultaneously rich. But now this might be a good place with which to try a triangle removal argument, converting the lines of r wildcards connecting

for many a, b, c; call a triple (a,b,c) rich if this is the case. By applying the corners theorem (!) we should then be able to find (a+r,b,c), (a,b+r,c), (a,b,c+r) which are simultaneously rich. But now this might be a good place with which to try a triangle removal argument, converting the lines of r wildcards connecting  into triangles as Tim suggests in the main post?

into triangles as Tim suggests in the main post?

February 2, 2009 at 9:13 am |

19. Terry, I once tried something like what you suggest in the second paragraph of your last comment. There I was trying to do something different: come up with a definition of “quasirandom” that would guarantee that if you had quasirandom subsets of ,

,  and

and  then you would get a combinatorial line. I failed because I kept thinking of more and more obstructions and it seemed hard to find a definition that would deal with them all. This might be another project worth pursuing.

then you would get a combinatorial line. I failed because I kept thinking of more and more obstructions and it seemed hard to find a definition that would deal with them all. This might be another project worth pursuing.

A problem here, which I feel is loosely related, is that you can’t apply triangle removal if you restrict to ,

,  and

and  because you don’t get the degenerate triangles. This is similar to the problem that I had in comment CC above. (Incidentally, can anyone think of a better way of referring to comments than saying things like, “In Terry’s third last comment, paragraph 2”? Added later: I’ve gone back and numbered the comments.)

because you don’t get the degenerate triangles. This is similar to the problem that I had in comment CC above. (Incidentally, can anyone think of a better way of referring to comments than saying things like, “In Terry’s third last comment, paragraph 2”? Added later: I’ve gone back and numbered the comments.)

February 2, 2009 at 9:17 am |

20. A related observation is that if you’ve got three rich triples in the right configuration, you aren’t guaranteed a combinatorial line. For example, in two of the sets the first coordinate could always be 1 and in the third it could always be 2.

February 2, 2009 at 9:46 am |

21. This is a response to the first paragraph of Terry’s first comment (comment number 4), in which he asks for another proof of Sperner’s theorem. I like this idea: in one sense one could say that the difficulty with density Hales-Jewett is that the proof of Sperner’s theorem doesn’t generalize in an obvious way. (I don’t necessarily mean directly, but one might try to find an argument that has the same relationship to the corners problem as a proof of Sperner’s theorem has to the trivial one-dimensional analogue of the corners theorem, which says that a dense subset of![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) contains a pair of distinct points. But even this doesn’t seem to be at all easy.)

contains a pair of distinct points. But even this doesn’t seem to be at all easy.)

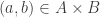

A minor point of disagreement with what Terry says (again in comment 4). I think the correct analogue of triangle removal (in the tripartite case) one level down is the following statement: given two subsets![A\subset[n]](https://s0.wp.com/latex.php?latex=A%5Csubset%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) and

and ![B\subset[n]](https://s0.wp.com/latex.php?latex=B%5Csubset%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) such that the number of pairs

such that the number of pairs  with

with  and

and  is very small, then it is possible to remove a small number of elements from

is very small, then it is possible to remove a small number of elements from  and end up with no pairs. The proof of course is that one of

and end up with no pairs. The proof of course is that one of  and

and  must be small. The reason I call this the analogue is that I think the analogue of a tripartite graph is a pair of subsets of the vertex sets, and the analogue of a triangle (a triple of edges) is a pair of vertices.

must be small. The reason I call this the analogue is that I think the analogue of a tripartite graph is a pair of subsets of the vertex sets, and the analogue of a triangle (a triple of edges) is a pair of vertices.

Now let me use this “pair-removal lemma” to prove the “one-dimensional corners” theorem. Suppose, then, that we have a subset![A\subset [n]](https://s0.wp.com/latex.php?latex=A%5Csubset+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) of size at least

of size at least  . I’m going to define two vertex sets

. I’m going to define two vertex sets  and

and  as follows. I let

as follows. I let  be the set of all ordered singletons

be the set of all ordered singletons  such that

such that  , and I let

, and I let  be the set of all ordered singletons

be the set of all ordered singletons  such that the sum of all the terms equals an element of

such that the sum of all the terms equals an element of  . (In other words, both

. (In other words, both  and

and  are equal to

are equal to  , but I want to demonstrate that I’m doing exactly what one does in higher dimensions.) If we can find a pair

, but I want to demonstrate that I’m doing exactly what one does in higher dimensions.) If we can find a pair  , then we are done (that is, we’ve found a configuration of the form

, then we are done (that is, we’ve found a configuration of the form  in the set

in the set  ), except in the degenerate case that

), except in the degenerate case that  . So if we can’t find a one-dimensional corner, then the only pairs in

. So if we can’t find a one-dimensional corner, then the only pairs in  are the degenerate ones, which implies that there are only

are the degenerate ones, which implies that there are only  pairs. But then we can apply the pair-removal lemma and remove a few elements from

pairs. But then we can apply the pair-removal lemma and remove a few elements from  and end up with no pairs. But the degenerate pairs are vertex-disjoint, so we have to remove at least

and end up with no pairs. But the degenerate pairs are vertex-disjoint, so we have to remove at least  elements to get rid of all those, so we have reached a contradiction.

elements to get rid of all those, so we have reached a contradiction.

The next question might be whether we can use the “pair-removal lemma” to give a different proof of Sperner’s theorem. Or rather, we don’t actually want to prove the full strength of Sperner’s theorem but just the weaker statement that if you have at least subsets of

subsets of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) then you can find one that’s a proper subset of another. Up to now what I’ve done is silly, but this project would not be silly: it might tell us exactly what the difficulty is with this approach to density Hales-Jewett, but in a much simpler context.

then you can find one that’s a proper subset of another. Up to now what I’ve done is silly, but this project would not be silly: it might tell us exactly what the difficulty is with this approach to density Hales-Jewett, but in a much simpler context.

February 2, 2009 at 10:13 am |

22. Continuing the same theme, there is a natural pair of vertex sets to define in the Sperner case. For all higher dimensions, one takes the vertex sets to be copies of the power set of![\null[n]](https://s0.wp.com/latex.php?latex=%5Cnull%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , so one should surely do so here. Now in a certain sense the

, so one should surely do so here. Now in a certain sense the  th vertex set stands for the set of places where a sequence equals

th vertex set stands for the set of places where a sequence equals  . So here we would let

. So here we would let  and

and  be two copies of the power set of

be two copies of the power set of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , with sets in

, with sets in  representing the set of places where a sequence equals 0 and sets in

representing the set of places where a sequence equals 0 and sets in  representing the set of places where a sequence equals 1. Then we take as our subset

representing the set of places where a sequence equals 1. Then we take as our subset  the set of all sets

the set of all sets  such that the sequence that’s 0 on

such that the sequence that’s 0 on  and 1 on the complement of

and 1 on the complement of  belongs to

belongs to  , and we take

, and we take  to be the set of all sets

to be the set of all sets  such that the sequence that’s 1 on

such that the sequence that’s 1 on  and 0 on the complement of

and 0 on the complement of  belongs to

belongs to  . In set theoretic terms,

. In set theoretic terms,  consists of sets that belong to

consists of sets that belong to  (which itself, just to be clear, is our given collection of

(which itself, just to be clear, is our given collection of  subsets of

subsets of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) ), and

), and  is consists of all complements of sets that belong to

is consists of all complements of sets that belong to  .

.

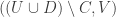

At this point it is clear that what we are looking for is a pair with

with  ,

,  , and

, and  . This will be a degenerate pair if

. This will be a degenerate pair if ![U\cup V=[n]](https://s0.wp.com/latex.php?latex=U%5Ccup+V%3D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , but otherwise will imply that

, but otherwise will imply that  contains two sets with one a proper subset of the other. So this is the natural analogue of the triangles discussed in comments N and P.

contains two sets with one a proper subset of the other. So this is the natural analogue of the triangles discussed in comments N and P.

Note that in this situation we seem to be forced to put a graph structure on the two vertex sets and

and  , since we cannot just take any old pair

, since we cannot just take any old pair  but have to make them disjoint. At first this looks bad, but I actually think it’s OK because the same disjointness condition occurs in all dimensions. In other words, you get a graph in higher dimensions and not a hypergraph.

but have to make them disjoint. At first this looks bad, but I actually think it’s OK because the same disjointness condition occurs in all dimensions. In other words, you get a graph in higher dimensions and not a hypergraph.

The next step, I think, would be to try to find a suitable “pair-removal lemma” for this more complicated situation where the pairs have to be disjoint sets. Is the following true (or even the right question)? Suppose you have two collections and

and  of subsets of

of subsets of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , and there are very few pairs

, and there are very few pairs  such that

such that  ,

,  , and

, and  . Then you can remove a small fraction of the sets from

. Then you can remove a small fraction of the sets from  and end up with no such pairs.

and end up with no such pairs.

February 2, 2009 at 11:02 am |

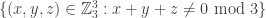

23. This comment is a further response to Jozsef Solymosi’s comment (number 2). A slight variant of the problem you propose is this. Let’s take as our ground set the set of all pairs of subsets of

of subsets of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , and let’s take as our definition of a corner a triple of the form

, and let’s take as our definition of a corner a triple of the form  ,

,  ,

,  , where both the unions must be disjoint unions. This is asking for more than you asked for because I insist that the difference

, where both the unions must be disjoint unions. This is asking for more than you asked for because I insist that the difference  is positive, so to speak. It seems to be a nice combination of Sperner’s theorem and the usual corners result. But perhaps it would be more sensible not to insist on that positivity and instead ask for a triple of the form

is positive, so to speak. It seems to be a nice combination of Sperner’s theorem and the usual corners result. But perhaps it would be more sensible not to insist on that positivity and instead ask for a triple of the form  ,

,  ,

,  , where

, where  is disjoint from both

is disjoint from both  and

and  and

and  is contained in both

is contained in both  and

and  . That is your original problem I think.

. That is your original problem I think.

I think I now understand better why your problem could be a good toy problem to look at first. Let’s quickly work out what triangle-removal statement would be needed to solve it. (You’ve already done that, so I just want to reformulate it in set-theoretic language, which I find easier to understand.) We let all of ,

,  and

and  equal the power set of

equal the power set of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) . We join

. We join  to

to  if

if  .

.

Ah, I see now that there’s a problem with what I’m suggesting, which is that in the normal corners problem we say that and

and  lie in a line because both points have the same coordinate sum. When should we say that

lie in a line because both points have the same coordinate sum. When should we say that  and

and  lie in a line? It looks to me as though we have to treat the sets as

lie in a line? It looks to me as though we have to treat the sets as  -sequences and take the sum again. So it’s not really a set-theoretic reformulation after all.

-sequences and take the sum again. So it’s not really a set-theoretic reformulation after all.

That suggests a wild question, which might have an easy counterexample (or an easy proof using density Hales-Jewett, which would be useful just to see whether it is true). If you have a dense subset of the set of all pairs

of the set of all pairs  of subsets of

of subsets of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , can you find a triple

, can you find a triple  ,

,  and

and  in

in  such that the three sets

such that the three sets  ,

,  and

and  are all disjoint?

are all disjoint?

February 2, 2009 at 12:45 pm |

24. I want to continue the discussion in 13, 14, 16, 17 and 18 concerning the appropriate Varnavides-type theorem for density Hales-Jewett.

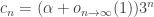

The basic difficulty appears to be that if you know that a point lies in a combinatorial line, then it affects the shape of the point. Let’s see this first in a very simple context, where we just put the uniform distribution on the set of all points and the uniform distribution on the set of all lines. We can represent a combinatorial line as a point in the space , where the asterisks represent the varying coordinates. In this way we see easily that there are

, where the asterisks represent the varying coordinates. In this way we see easily that there are  combinatorial lines and that the set of fixed coordinates that take a given one of the values 1, 2 or 3 will typically have size about

combinatorial lines and that the set of fixed coordinates that take a given one of the values 1, 2 or 3 will typically have size about  . (For anyone who isn’t familiar with this kind of argument, if you choose a random combinatorial line, regarded as a sequence that consists of 1s, 2s, 3s and asterisks, then the set of places where you choose 1 is a random subset of

. (For anyone who isn’t familiar with this kind of argument, if you choose a random combinatorial line, regarded as a sequence that consists of 1s, 2s, 3s and asterisks, then the set of places where you choose 1 is a random subset of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) where each point is chosen with probability

where each point is chosen with probability  . With very high probability such a set has size close to

. With very high probability such a set has size close to  .) So we see that while a typical point has roughly equal numbers of 1s, 2s and 3s, a typical combinatorial line contains points that have around

.) So we see that while a typical point has roughly equal numbers of 1s, 2s and 3s, a typical combinatorial line contains points that have around  of two values and around

of two values and around  of the third value.

of the third value.

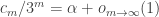

Suppose we try to remedy this by putting a different distribution on to the set of lines. Is there some natural way to do this that encourages the set of variable coordinates to be much smaller than the sets of fixed coordinates? A rather unpleasant approach would be simply to put some upper bound on the size of the set of variable coordinates and otherwise choose uniformly. The most natural thing I can think of to do is not all that much nicer, but at least it smooths the cutoff. It’s to choose the variable set of coordinates by picking each point independently with probability , where

, where  is something like

is something like  , and then choose the fixed coordinates randomly to be 1, 2 or 3.

, and then choose the fixed coordinates randomly to be 1, 2 or 3.

Actually, I can already see that this doesn’t work. The size of the resulting set of variable coordinates will be strongly concentrated around . But it’s not hard to choose a dense set of sequences such that if you choose any two of them,

. But it’s not hard to choose a dense set of sequences such that if you choose any two of them,  and

and  , then the number of 1s in

, then the number of 1s in  and the number of 1s in

and the number of 1s in  do not differ by approximately

do not differ by approximately  . The number of combinatorial lines in such a set would not be dense.

. The number of combinatorial lines in such a set would not be dense.

The fact that the natural measure on the set of sequences does not project properly to the natural measure on the set of triples of integers such that

of integers such that  seems to be a fundamental difficulty.

seems to be a fundamental difficulty.

Another thought one might have is to put a different measure on the set of points, so as to encourage points where we get two values roughly times and one value roughly

times and one value roughly  times. But the trouble with this is that it leads to the no-degenerate-triangles problem. To be precise, it would lead to three sets of sequences (according to whether 1, 2 or 3 was the preferred value) and no sequence would belong to all three sets. So it really does seem essential to force the variable set to be small somehow. And all I have to suggest so far is to do something very unnatural like first randomly choosing the cardinality according to some distribution that isn’t too concentrated about any value — the most natural one I can think of is a gentle exponential decay — then choosing a set of that cardinality, and then choosing the fixed coordinates randomly. But I have no reason to suppose that that leads to a Varnavides-type theorem.

times. But the trouble with this is that it leads to the no-degenerate-triangles problem. To be precise, it would lead to three sets of sequences (according to whether 1, 2 or 3 was the preferred value) and no sequence would belong to all three sets. So it really does seem essential to force the variable set to be small somehow. And all I have to suggest so far is to do something very unnatural like first randomly choosing the cardinality according to some distribution that isn’t too concentrated about any value — the most natural one I can think of is a gentle exponential decay — then choosing a set of that cardinality, and then choosing the fixed coordinates randomly. But I have no reason to suppose that that leads to a Varnavides-type theorem.

February 2, 2009 at 1:44 pm |

25. I see now that there’s quite a substantial overlap between my last comment and Terry’s comment 16. But one can view my last comment as an elaboration of his, perhaps.

Here I just want to make the quick suggestion that one way to work out what the appropriate Varnavides theorem should say is to prove it first. How would this strategy work? Well, we assume the density Hales-Jewett theorem in the following form: for every there exists

there exists  such that every subset of

such that every subset of ![\null [3]^k](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5Ek&bg=ffffff&fg=333333&s=0&c=20201002) of size at least

of size at least  contains a combinatorial line. (The

contains a combinatorial line. (The  is convenient in just a moment.)

is convenient in just a moment.)

Now let be a subset of

be a subset of ![\null [3]^n](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) of size at least

of size at least  . Here,

. Here,  is an integer that is much bigger than

is an integer that is much bigger than  . The set

. The set ![\null [3]^n](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) can be covered uniformly by structures that are isomorphic to

can be covered uniformly by structures that are isomorphic to ![\null [3]^k](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5Ek&bg=ffffff&fg=333333&s=0&c=20201002) , and a positive proportion (in fact,

, and a positive proportion (in fact,  at least) of these structures intersect

at least) of these structures intersect  in a subset of density at least

in a subset of density at least  . So a positive proportion of these structures contain a combinatorial line. And now a double-counting argument gives us lots of combinatorial lines.

. So a positive proportion of these structures contain a combinatorial line. And now a double-counting argument gives us lots of combinatorial lines.

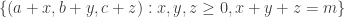

Now there are several choices to make if one wants to pursue this line of attack. The main one is what set of -dimensional substructures of

-dimensional substructures of ![\null [3]^n](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) to average over. For the benefit of people who haven’t thought too hard about Hales-Jewett, let me give a pretty general class of such structures. You choose

to average over. For the benefit of people who haven’t thought too hard about Hales-Jewett, let me give a pretty general class of such structures. You choose  disjoint sets

disjoint sets  , and you choose fixed values in

, and you choose fixed values in  for all elements of

for all elements of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) that do not belong to one of the

that do not belong to one of the  . The

. The  -dimensional structure consists of the

-dimensional structure consists of the  sequences that take the fixed values outside the

sequences that take the fixed values outside the  and are constant on each

and are constant on each  . What can we deduce from the fact that there exists

. What can we deduce from the fact that there exists  that depends on

that depends on  only such that if

only such that if  intersects any

intersects any  -dimensional set of this kind in at least

-dimensional set of this kind in at least  places then that

places then that  -dimensional set contains a combinatorial line in

-dimensional set contains a combinatorial line in  ?

?

The choice one has is this: over what set of structures of this kind (or with what weighting on the structures) do we do an averaging argument? And what can we expect to get out of it at the end? Is it likely to give anything useful?

One other question: is there a reasonably precise argument to the effect that if the triangle-removal approach worked, then it would imply a stronger Varnavides-type result? And if so, what would that result be? This could be another way to get a handle on the problem.

February 2, 2009 at 3:18 pm |

26. Another small thought in response to Terry’s comment number 18, paragraph 2, is this. Even if that idea runs into the difficulty I mentioned in comment 19, there might be a chance of getting something out of a small variant of the idea. Terry suggested using the corners theorem to find a triple ,

,  ,

,  in each part of which

in each part of which  is “rich”. The problem was that if one then used just these sets to form a tripartite graph, one would end up with no degenerate triangles. But what if instead one used the full 2-dimensional Szemer\’edi theorem (which can be proved using the simplex-removal lemma in sufficiently high dimension, so it doesn’t depend on ergodic theory) to obtain a big network of sets

is “rich”. The problem was that if one then used just these sets to form a tripartite graph, one would end up with no degenerate triangles. But what if instead one used the full 2-dimensional Szemer\’edi theorem (which can be proved using the simplex-removal lemma in sufficiently high dimension, so it doesn’t depend on ergodic theory) to obtain a big network of sets  in which

in which  was rich? They could be organized into a triangular lattice: they would consist of all sets of the form

was rich? They could be organized into a triangular lattice: they would consist of all sets of the form  such that

such that  ,

,  and

and  are non-negative integers that sum to

are non-negative integers that sum to  for some reasonably large

for some reasonably large  . It’s not obvious that this would be any help, but it’s also not obvious that it wouldn’t be any help.

. It’s not obvious that this would be any help, but it’s also not obvious that it wouldn’t be any help.

February 2, 2009 at 3:33 pm |

27. I was rather pleased with the “conjecture” in the final paragraph of comment 22, but have just noticed that it is completely false, at least if you interpret it in the most obvious way. Indeed, if you take both and

and  to consist of all subsets of

to consist of all subsets of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , then you find that almost every set in

, then you find that almost every set in  intersects almost every set in

intersects almost every set in  . And it’s clear that if you just remove a small fraction of the sets from

. And it’s clear that if you just remove a small fraction of the sets from  and

and  you aren’t going to be able to ensure that every set left in

you aren’t going to be able to ensure that every set left in  intersects every set left in

intersects every set left in  . (Proof: for each pair

. (Proof: for each pair  you will either have to remove

you will either have to remove  from

from  or

or  from

from  .)

.)

This leaves me with two questions.

(i) Can this pair-removal approach to Sperner be rescued somehow?

(ii) If not, can one use its failure as the basis of a rigorous proof that no triangle-removal approach can work for density Hales-Jewett?

February 2, 2009 at 3:47 pm |

28. Perhaps the answer to (i) is rather simple. First, we see how many disjoint pairs there are in the case where both and

and  are the full power set of

are the full power set of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) . The answer is

. The answer is  , because for each element of

, because for each element of ![\null [n]](https://s0.wp.com/latex.php?latex=%5Cnull+%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) you get to choose whether it belongs to the first set, the second set, or neither. So now we would deem a pair of set systems

you get to choose whether it belongs to the first set, the second set, or neither. So now we would deem a pair of set systems  to have “few” disjoint pairs if the number of pairs

to have “few” disjoint pairs if the number of pairs  with

with  ,

,  and

and  is at most

is at most  for a tiny positive constant

for a tiny positive constant  . Under that assumption, does it follow that we can remove a small number of sets and end up with no disjoint pairs? And if so, would that prove Sperner’s theorem (in the weak form where we assume that a set system has positive density)?

. Under that assumption, does it follow that we can remove a small number of sets and end up with no disjoint pairs? And if so, would that prove Sperner’s theorem (in the weak form where we assume that a set system has positive density)?

February 2, 2009 at 4:16 pm |

29. Several remarks which are not directly towrds the specific project but related.

1. With all due respect to Sperner’s theorem it is hard to believe that the lower bound cannot be improved. Right? Maybe as a side project we should come up with a

lower bound cannot be improved. Right? Maybe as a side project we should come up with a  example for k=3 density HJ or ask around if this is known?

example for k=3 density HJ or ask around if this is known?

2. A sort of generic attack one can try with Sperner is to look at and express using the Fourier expansion of

and express using the Fourier expansion of  the expression

the expression  where x<y is the partial order (=containment) for 0-1 vectors. Then one may hope that if f does not have a large Fourier coefficient then the expression above is similar to what we get when A is random and otherwise we can raise the density for subspaces.

where x<y is the partial order (=containment) for 0-1 vectors. Then one may hope that if f does not have a large Fourier coefficient then the expression above is similar to what we get when A is random and otherwise we can raise the density for subspaces.

(OK, you can try it directly for the k=3 density HJ problem too but Sperner would be easier;)

This is not unrealeted to the regularity philosophy.

3. Reductions: the works of Furstenberg and Katznelson went gradually from multidimensional Szemeredi to density theorems for affine subspaces to density HJ. (Maybe there were more stops on the way)

Beside the obvious reduction – combinatorial lines imply long AP (and also high dimension Szemeredi if I remember correctly) reductions in the opposite directions are not known (to me, at least).

It is tempting to think that the when we find high dmensionall affine space perhaps in a much much much larger alphabet we can somehow get a little combinatorial line for the small alphabet.

4. There is an analogous for Sperner but with high dimensional combinatorial spaces instead of "lines" but I do not remember the details (Kleitman(?) Katona(?) those are ususal suspects.)

February 2, 2009 at 4:35 pm |

30. As a person who writes the phrase “choose the sequence x at random; then form y by rerandomizing each coordinate of x with probability p” in nearly half his papers, I’m naturally drawn to the 16-17-24-etc. comment thread.

In #24, Tim suggests (and I agree) that the most natural way to pick a line is to form three sequences (x,y,z) by choosing

(x_i,y_i,z_i) uniformly from [3]^3, with probability 1-p,

(x_i,y_i,z_i) = (1,2,3) with probability p,

independently for each i = 1…n, with p taken to be perhaps 1/sqrt(n). But Tim also makes the good — and worrisome — point that this distribution has the “wrong” marginal on x (and on y and on z). For example, the marginal on x is the (1/3+2p/3, 1/3-p/3, 1/3-p/3) product distribution on [3]^n, whereas it “ought to be” the uniform distribution, because we are measuring the density of A with respect to the uniform distribution.

It’s not completely clear to me that this is an insuperable problem though. For example, in #24 Tim wrote,

“But it’s not hard to choose a dense set of sequences such that if you choose any two of them, x and y, then the number of 1s in x and the number of 1s in y do not differ by approximately n^{1/2}.”

I don’t quite see it… Isn’t it the case, as Terry pointed out in #17, that almost all strings in A have 1/3 +/- O(sqrt(n)) 1’s? In fact, shouldn’t a random pair x, y in A have a 1-count differing by Theta(sqrt(n)) with high probability? [All hidden constants here depend on delta.]

Anyway, I agree it’s worrisome. To check whether it is indeed insuperable, I wonder if we could try “practising” this idea in the weak-Sperner’s setting Tim suggested at the end of #21. The analogous question there would be:

Suppose A contains at least a delta-fraction of the strings in {0,1}^n (w.r.t. the uniform distribution). Suppose we choose to strings (x,y) as follows:

(x_i,y_i) is uniform from {0,1}^2 with probability 1 – 1/sqrt(n)

(x_i,y_i) is (0,1) with probability 1/sqrt(n),

independently for each i = 1…n. Is there a positive probability that both x and y are in A?

February 2, 2009 at 4:45 pm |

31. Gil, a quick remark about Fourier expansions and the case. I want to explain why I got stuck several years ago when I was trying to develop some kind of Fourier approach. Maybe with your deep knowledge of this kind of thing you can get me unstuck again.

case. I want to explain why I got stuck several years ago when I was trying to develop some kind of Fourier approach. Maybe with your deep knowledge of this kind of thing you can get me unstuck again.

The problem was that the natural Fourier basis in![\null [3]^n](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) was the basis you get by thinking of

was the basis you get by thinking of ![\null [3]^n](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) as the group

as the group  . And if that’s what you do, then there appear to be examples that do not behave quasirandomly, but which do not have large Fourier coefficients either. For example, suppose that