I don’t have much to say mathematically, or rather I do but now that there is a wiki associated with polymath1, that seems to be the obvious place to summarize the mathematical understanding that arises in the comments on the various blog posts here and over on Terence Tao’s blog (see blogroll). The reason I am writing a new post now is simply that the 500s thread is about to run out.

So let me quickly make a few comments on how things seem to be going. (At some point in the future I will do so at much greater length.) Not surprisingly, it seems that we have reached a stage that is noticeably different from how things were right at the beginning. Certain ideas that emerged then have become digested by all the participants and have turned into something like background knowledge. Meanwhile, the discussion itself has become somewhat fragmented, in the sense that various people, or smaller groups of people, are pursuing different approaches and commenting only briefly if at all on other people’s approaches. In other words, at the moment the advantage of collaboration is that it is allowing us to do things in parallel, and efficiently because people are likely to be better at thinking about the aspects of the problem that particularly appeal to them.

Whether there will be a problem with lack of communication I don’t know. But perhaps there are enough of us that it won’t matter. At the moment I feel rather optimistic that we will end up with a new proof of DHJ(3) (but that is partly because I have a sketch that I have not subjected to appropriately stringent testing, which always makes me feel stupidly optimistic). In fact, what I’d really like to see is several related new proofs emerging, each drawing on different but overlapping subsets of the ideas that have emerged during the discussion. That would reflect in a nice way the difference between polymath and more usual papers written by monomath or oligomath.

Finally, a quick word on threading. The largest number of votes (at the time of writing) have gone to allowing full threading, but it is not an absolute majority: those who want unrestricted threading are outnumbered by those who have voted either for limited threading or for no threading at all. I think that points to limited threading. I’ve allowed the minimum non-zero amount. I can’t force you to abide by any rules here, but I can at least give my recommendation, which is this. For polymath comments, I’d like to stick as closely as possible to what we’ve got already. So if you have a genuinely new point to make, then it should come with a new number. However, if you want to give a quick reaction to another comment, then a reply to it is appropriate. If you have a longish reply, then it should appear as a new comment, but here there is another use of threading that could be very helpful, which is to add replies such as, “I have a counterexample to this conjecture — see comment 845 below.” In other words, it will allow forward referencing as well as backward referencing. Comments on this post will start at 800, and if yours is the nth reply to comment 8**, then you should number it 8**.n. Going back to the question of when to reply to a comment, a good rule of thumb is that you should do so only if your reply very much has the feel of a reaction and not of a full comment in itself.

Also, it isn’t possible to have threading on some posts and not on others, but I’d be quite grateful if we didn’t have threaded comments on any posts earlier than this one. And a quick question: does anyone know what happens to the threaded comments if you turn the threading feature off again, which is something I might find myself wanting to do?

February 23, 2009 at 6:12 pm |

800. Here is a writeup of a Fourier/density-increment argument for Sperner, implementing Terry’s #578 using some of the Fourier calculations Tim and I were doing:

http://www.cs.cmu.edu/~odonnell/wrong-sperner.pdf

Hope it’s mostly bug-free!

February 23, 2009 at 6:14 pm |

Tim – First of all I have to say that I did enjoy to read the blog and to contribute a bit. On the other hand it became less and less comfortable to me posting any comments. You wrote that

“Meanwhile, the discussion itself has become somewhat fragmented, in the sense that various people, or smaller groups of people, are pursuing different approaches and commenting only briefly if at all on other people’s approaches.”

Let me tell you what is my experience. I’m reading posts using notations from to ergodic theory or Fourier analysis with great interest, but I will rarely make any comment as I’m not expert on that field. Some post are still accessible to me and some others aren’t. There is no way that I will understand posts referring to noise stability unless I learn something more about it, which I’m not planning to do in the near future unless it turns out to be crucial for the project. Maybe the blog is still accessible for the majority of readers but I’m finding it more and more difficult to follow. For the same reasons I feel a bit awkward about posting notes. It’s tempting to work on the problems alone without sharing it with the others but this is clearly against the soul of the project.

February 23, 2009 at 6:28 pm |

Metacomment. Jozsef, in the light of what you say, I invite others to give their reactions to how things are going for them. My feeling, as I said in the post, is that we are entering uncharted territory (or rather, a different uncharted territory from the initial one) and it is not clear that the same rules should still apply. What we have done so far has, in my view, been a wonderful way of doing very quickly and thoroughly the initial exploration of the problem, and it feels as though the best way of making further progress will be if we start dealing with technicalities and actually write up some statements formally. I am hoping to do that in the near future, Ryan is doing something along those lines, Terry recently put a Hilbert-spaces lemma on the wiki, etc.

The obvious hazard with this is that people may end up writing things that others do not understand, or can understand only if they are prepared to invest a lot of time and effort. So if we want to continue to operate as a collective unit, so to speak, it is extremely important for people who write formal proofs to give generous introductions explaining what they are doing, roughly how the proof goes, why they think it could be useful for DHJ(3) (or something related to DHJ(3)), and so on. This is perhaps an area where people who have been following the discussion without actually posting comments could be helpful—just by letting us know which bits you find hard to understand. For example, if you see something on the wiki that is clearly meant to be easily comprehensible but it in fact isn’t, then it would be helpful to know. (However, some of the wiki articles are over-concise simply because we haven’t had time to expand them yet.)

Do others have any thoughts about where we should go from here?

February 23, 2009 at 6:47 pm |

801. Fourier/Sperner

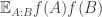

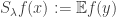

Ryan, re the remark at the end of the thing you wrote up, if we do indeed have an expression of the form![\mathbb{E}_{p\leq q}\sum_{A\subset[n]}\lambda_{p,q}^{|A|}\hat{f}_p(A)\hat{f}_q(A)](https://s0.wp.com/latex.php?latex=%5Cmathbb%7BE%7D_%7Bp%5Cleq+q%7D%5Csum_%7BA%5Csubset%5Bn%5D%7D%5Clambda_%7Bp%2Cq%7D%5E%7B%7CA%7C%7D%5Chat%7Bf%7D_p%28A%29%5Chat%7Bf%7D_q%28A%29&bg=ffffff&fg=333333&s=0&c=20201002) for the total weight

for the total weight  for

for  when everything is according to equal-slices density, then it seems to me not completely obvious that one couldn’t prove some kind of positivity result for the contribution from each

when everything is according to equal-slices density, then it seems to me not completely obvious that one couldn’t prove some kind of positivity result for the contribution from each  , especially given your calculation that

, especially given your calculation that  splits up as a product. But probably you’ve thought about that—I can see that it’s a problem that

splits up as a product. But probably you’ve thought about that—I can see that it’s a problem that  is not symmetric in p and q, but can one not make it symmetric by talking instead about pairs of disjoint sets?

is not symmetric in p and q, but can one not make it symmetric by talking instead about pairs of disjoint sets?

Or perhaps it’s false that you have positivity and the disjoint-pairs formulation is what you need to get a similar result where it becomes true. I hope this vague comment makes some sense to you. I’ll see if I can think of a counterexample. In fact, isn’t this a counterexample: let n=1, and let f(0)=-1, f(1)=1. Then the expectation of f(x)f(y) over combinatorial lines (x,y) is -1 and the expectation of f is 0.

February 23, 2009 at 7:25 pm

801.1 Please ignore the last paragraph of this comment — for analytic purposes we need to consider degenerate lines, and as you say the usual proof of Sperner proves positivity. Hmm — not sure that the threading adds much here.

February 23, 2009 at 8:05 pm

801.2: Yes, it’s not clear to me why![\mathbb{E}_{p \leq q}[\hat{f}_p(A) \hat{f}_q(A)]](https://s0.wp.com/latex.php?latex=%5Cmathbb%7BE%7D_%7Bp+%5Cleq+q%7D%5B%5Chat%7Bf%7D_p%28A%29+%5Chat%7Bf%7D_q%28A%29%5D&bg=ffffff&fg=333333&s=0&c=20201002) needs to be nonnegative. This is a shame, because: a) we’d be extremely done if we could show this; b) as

needs to be nonnegative. This is a shame, because: a) we’d be extremely done if we could show this; b) as  the quantity approaches

the quantity approaches  , which is of course nonnegative. Unfortunately, if you make

, which is of course nonnegative. Unfortunately, if you make  extremely close to

extremely close to  so as to force it nonnegativity, you’ll probably be swamped with degenerate lines.

so as to force it nonnegativity, you’ll probably be swamped with degenerate lines.

February 23, 2009 at 8:20 pm |

Metacomment: I sympathise with Jozsef’s position. Luckily, some of the directions we’re working on are in areas I’m familiar with, so I can comment there. But to be honest, I know nothing about ergodic methods, am extremely shaky on what triangle-removal is, and am only kind of on top of Szemeredi’s regularity lemma. So indeed it takes me quite a while just to read comments on these topics. That’s fine with me though.

Thing is, even in the areas I’m familiar with it’s awfully tough to keep up with a certain pair of powerhouses who post regularly to the project 🙂 In a perfect world I’d post more definitions, add more intuition to my comments, update the wiki with stuff about noise sensitivity and so forth — but it already takes me many hours just to quasi-keep up with Tim and Terry and generate non-nonsensical comments. I’m fine with this too, though.

Finally, I agree that it’s also gotten to the point where it’s sort of impossible to explore certain directions — especially ones with calculations — “online”. You probably won’t be surprised to learn I spent a fair bit of time working out the pdf document in #800 by myself on paper. I hope this isn’t too much against the spirit of the project, but I couldn’t find any way to do it otherwise. I would feel like I were massively spamming people if I tried to compute like this online, with all the associated wrong turns, miscalculations, and crazy mistakes I make.

February 23, 2009 at 8:25 pm |

802. Sperner/Fourier.

By the way, I’m pretty sure one can also do Roth’s Theorem (or at least, finding “Schur triples” in ) in this way. It might sound ridiculous to say so, since Roth/Meshulam already gave a highly elegant density-increment/Fourier-analysis proof.

) in this way. It might sound ridiculous to say so, since Roth/Meshulam already gave a highly elegant density-increment/Fourier-analysis proof.

But the point is that:

. it works in a non-“arithmetic”/”algebraic” way

. it works by doing density increments that restrict just to *combinatorial* subpaces

. it demonstrates that the method can sometimes work in cases where one needs to find *three* points in the set satisfying some relation.

I can supply more details later but right now I have to go to a meeting…

February 23, 2009 at 8:54 pm |

Metacomment.1

(If this .1 will automatically creat a threading I will consider it a miracle.)

I think the nature of this collaboration via different people writing blog remarks is somewhat similar to the massive collaboration in ordinary math where people are writing papers partially based on earlier papers.

Here the time scale is quicker and the nature of contributions is more tentative. Half baked ideas are encourged.

It is not possible to follow everything or even most of the things or even a large fraction of them. (Not to speak about understanding and digesting and remembering). I think it can be useful that people will not assume that other people read earlier things and from time to time repeat and summarize and overall be clear as possible. (I think comments of the form: “please clarify” can be useful.) One the other hand, like in usual collaborations people often write things mainly for themselves (e.g. the Hardy-Littlewood rules) and this is very fine.

(A question: is Ryan’s write-up a correct new proof of (weak) Sperner using density incement and Fourier? (The file is called wrong-Sperner but reading it I realize that this means the proof is right but not yet the “Fourier/Sperner proof from the book” or something like that. Please clarify.)

There are various avenues which I find very interesting even if not directly related to the most promising avenues towards DHJ proof and even some ideas I’d like to share and explore. (One of these is a non-density-decreasing Fourier strategy.)

One general avenue is various reductions (ususally based on simple combinatorial reasonings) : Joszef mentioned Moser(k=6)-> DHK(k=3) and some reductions to Szemeredi (k=4) were considered. Summarizing those and pushing for further reductions can be fruitfull.

Also the equivalence of DHJ for various measures (I supose this is also a reduction business) is an interesting aspect worth further study. When there is group acting such equivalences are easy and of general nature. But for DHJ I am not sure how general they are.

February 23, 2009 at 11:32 pm

Gil wrote: “(The file is called wrong-Sperner but reading it I realize that this means the proof is right but not yet the “Fourier/Sperner proof from the book” or something like that. Please clarify.)”

Yes — I forgot to mention that. I think/hope the writeup’s correct, but I called it “wrong-Sperner” because I still feel there’s something not-from-the-Book about it.

February 23, 2009 at 10:07 pm |

Metacomment.2

It certainly does feel that the project is developing into a more mature phase, where it resembles the activity of a highly specialised mathematical subfield, rather than a single large collaboration, albeit at very different time scales than traditional activity. (The wiki is somewhat analogous to a “Journal of Attempts to Prove Density Hales Jewett”, and the threads are analogous to things like “4th weekly conference on Density Hales Jewett” 🙂 .) Also we are hitting the barrier that the number of promising avenues of research seems to exceed the number of people actively working on the project.

But I think things will focus a bit more (and become more “polymathy”) once we identify a particularly promising approach to the problem (I think we already have a partially assembled skeleton of such, and a significant fraction of the tools needed have already been sensed). This is what is going on in the 700 thread, by the way; we are focusing largely on one subproblem at a time (right now, it’s getting a good Moser(3) bound for n=5) and we seem to be using the collaborative environment quite efficiently.

My near-term plan is to digest enough of the ergodic theory proofs that I can communicate in finitary combinatorial language a formal proof of DHJ(2) that follows the ergodic approach, and a very handwavy proof of DHJ(3). (The Hilbert space lemma I’m working on is a component of the DHJ(2) analysis.) The finitisation of DHJ(2) looks doable, but is already quite messy (more “wrong” than Ryan’s “wrong” proof of DHJ(2)), and it seems to me that formally finitising the ergodic proof of DHJ(3), while technically possible, is not the most desirable objective here. But there does seem to be some useful ideas that we should be able to salvage from the ergodic proof that I would like to toss out here once I understand them properly. (For instance, there seems to be an “IP van der Corput lemma” that lets one “win” when one has 01-pseudorandomness (which roughly means that if one takes a medium dimensional slice of A and then flips one of the fixed digits from 0 to 1, then the pattern of A on the shifted slice is independent of the pattern of A on the original slice). I would like to understand this lemma better. The other extreme, that of 01-insensitivity, is tractable by Shelah’s trick of identifying 0 and 1 into a single letter of the alphabet, and the remaining task is to apply a suitable structure theorem to partition arbitrary sets into 01-structured and 01-pseudorandom components, analogously to how one would apply the regularity lemma to one part of the tripartite graph needed to locate triangles.)

February 23, 2009 at 10:34 pm |

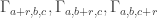

803. Sperner/Fourier

Re the discussion in 801, this is a proposal for getting positivity. I haven’t checked it, and it could just obviously not work.

The reason I make it is that I just can’t believe the evidence in front of me: the trivial positivity in “physical space” just must be reflected by equally trivial positivity in “frequency space”. I wonder if the problem, looked at from the random-permutation point of view, is that to count pairs of initial segments it is not completely sensible to count them with the smaller one always first — after all, the proof of positivity goes by saying that that is actually half the set of pairs of initial segments. So maybe we should look at a sum of the form , where

, where  is a hastily invented notation for “

is a hastily invented notation for “ or

or  .”

.”

How could we implement this Ryan style? Well, obviously we would average over all pairs of probabilities (p,q). And if we choose a particular pair, then the obvious thing to do would be to choose independent random variables and

and  in such a way that the resulting set will give you a probability p of being in A, a probability q of being in B, and a certainty that A:B. To achieve that in a unified way, for each

in such a way that the resulting set will give you a probability p of being in A, a probability q of being in B, and a certainty that A:B. To achieve that in a unified way, for each  we choose a random

we choose a random ![t\in [0,1]](https://s0.wp.com/latex.php?latex=t%5Cin+%5B0%2C1%5D&bg=ffffff&fg=333333&s=0&c=20201002) and set

and set  if and only if

if and only if  and

and  if and only if

if and only if  .

.

I haven’t even begun to try any calculations here, but I would find it very strange if one didn’t get some kind of positivity over on the Fourier side.

Having said that, the fact that one expands f in a different way for each p does make me not quite as sure of this positivity as I’m pretending to be.

February 23, 2009 at 11:43 pm

803.1 Positivity:

In my experience, obvious positivity on the physical side doesn’t always imply obvious positivity on the Fourier side. Here is an example (although it goes in the reverse direction).

Let be arbitrary and consider

be arbitrary and consider ![\mathbb{E}[f(x) f(y)]](https://s0.wp.com/latex.php?latex=%5Cmathbb%7BE%7D%5Bf%28x%29+f%28y%29%5D&bg=ffffff&fg=333333&s=0&c=20201002) , where

, where  is uniform random and

is uniform random and  is formed by flipping each bit of

is formed by flipping each bit of  with probability

with probability  . (Note that

. (Note that  has the same distribution as

has the same distribution as  , so there’s no asymmetry here.)

, so there’s no asymmetry here.)

Now and

and  are typically quite far apart in Hamming distance, and since

are typically quite far apart in Hamming distance, and since  can have positive and negative values, big and small, it seems far from obvious that

can have positive and negative values, big and small, it seems far from obvious that ![\mathbb{E}[f(x) f(y)]](https://s0.wp.com/latex.php?latex=%5Cmathbb%7BE%7D%5Bf%28x%29+f%28y%29%5D&bg=ffffff&fg=333333&s=0&c=20201002) should be nonnegative.

should be nonnegative.

But this is obvious on the Fourier side: it’s precisely .

.

February 24, 2009 at 12:05 am

803.2 Positivity

That’s interesting. The non-obvious assertion is that a certain matrix (where the value at (x,y) is the probability that you get y when you start from x and flip) is positive definite. And the Fourier transform diagonalizes it. And that helps to see why it’s genuinely easier on the Fourier side: given a quadratic form, there is no reason to expect it to be easy to see that it is positive definite without diagonalizing it. (However, I am tempted to try with your example …)

February 24, 2009 at 11:52 am

803.3 Positivity

Got it! Details below in an hour’s time (after my lecture). It will be comment 812 if nobody else has commented by then.

February 23, 2009 at 11:15 pm |

804. DHJ(2.7)

Randall: I tried to wikify your notes from 593 at

http://michaelnielsen.org/polymath1/index.php?title=DHJ(2.7)

Unfortunately due to a wordpress error, an important portion of your Tex (in particular, part of the statement of DHJ(2.7)) was missing (the portion between a less than sign and a greater than sign – unfortunately this was interpreted as HTML and thus eaten). So I unfortunately don’t understand the proof. Could you possibly take a look at the page and try to restore the missing portion? Thanks!

February 24, 2009 at 5:04 am

Sorry about that. After several missteps, I may have managed to correct it in full….

February 23, 2009 at 11:22 pm |

805. Sperner

(Previous comment should be 804.)

Ryan’s notes (which, incidentally, might also be migrated to the wiki at some point) seem to imply something close to my Lemma in 578 that had a bogus proof, namely that if f is non-uniform in the sense that or

or  is large for some g, then f has significant Fourier concentration at low modes (i.e. the energy

is large for some g, then f has significant Fourier concentration at low modes (i.e. the energy  is large), and hence f correlates with a function of low influence (e.g. f correlates with

is large), and hence f correlates with a function of low influence (e.g. f correlates with  , where

, where  is the Fourier multiplier that multiplies the S fourier coefficient by

is the Fourier multiplier that multiplies the S fourier coefficient by  ).

).

If this is the case, then (because polynomial combinations of low influence functions are still low influence) one can iterate this in the usual energy-increment manner (or as in the proof of the regularity lemma) to obtain a decomposition , where

, where  is uniform and

is uniform and  has low influence, is non-negative and has the same density as

has low influence, is non-negative and has the same density as  . (This is an oversimplification; there are error terms, and one has to specify the scales at which uniformity or low influence occurs, but ignore these issues for now.)

. (This is an oversimplification; there are error terms, and one has to specify the scales at which uniformity or low influence occurs, but ignore these issues for now.)

If this is the case, then the Sperner count should be approximately equal to

should be approximately equal to  . But if

. But if  is low influence, this should be approximately

is low influence, this should be approximately  . Meanwhile, we have

. Meanwhile, we have  , so by Cauchy-Schwartz we get at least

, so by Cauchy-Schwartz we get at least  , as desired.

, as desired.

February 23, 2009 at 11:24 pm

805.1

p.s. Something very similar goes on in the ergodic proof of DHJ(2). What I call here will be called

here will be called  , where P is a certain “weak limit” of shift operators. The key point is that P is going to be an orthogonal projection in L^2 (this is the upshot of a Hilbert space lemma that I am working on on the wiki.)

, where P is a certain “weak limit” of shift operators. The key point is that P is going to be an orthogonal projection in L^2 (this is the upshot of a Hilbert space lemma that I am working on on the wiki.)

February 24, 2009 at 12:01 am |

806. Sperner.

Re #805, Terry, one thing I had trouble with was — roughly speaking — showing that indeed![\mathbb{E}[f_{U^\bot}(x) f_{U^\bot}(y)]](https://s0.wp.com/latex.php?latex=%5Cmathbb%7BE%7D%5Bf_%7BU%5E%5Cbot%7D%28x%29+f_%7BU%5E%5Cbot%7D%28y%29%5D&bg=ffffff&fg=333333&s=0&c=20201002) is close to

is close to ![\mathbb{E}[f_{U^\bot}(x)^2]](https://s0.wp.com/latex.php?latex=%5Cmathbb%7BE%7D%5Bf_%7BU%5E%5Cbot%7D%28x%29%5E2%5D&bg=ffffff&fg=333333&s=0&c=20201002) .

.

It seemed logical that this would be true but I had technical difficulties actually making the switch from to

to  . In some sense that’s why I wrote the notes, to really convince myself that one could do something. Unfortunately, that something was to define

. In some sense that’s why I wrote the notes, to really convince myself that one could do something. Unfortunately, that something was to define  all the way down to just the lowest mode; i.e., to look at density rather than energy.

all the way down to just the lowest mode; i.e., to look at density rather than energy.

Perhaps we could try to think about, at a technical level, how to really pass from to

to  for low-influence functions…

for low-influence functions…

February 24, 2009 at 1:49 am

806.2 Sperner

Well, I would imagine that would have Fourier transform concentrated in sets S of size

would have Fourier transform concentrated in sets S of size  , and this should mean that the average influence of

, and this should mean that the average influence of  , i.e. {\Bbb E} |f_{U^\perp}(x) – f_{U^\perp}(y)|^2, where x and y differ by just one bit, should be small (something like

, i.e. {\Bbb E} |f_{U^\perp}(x) – f_{U^\perp}(y)|^2, where x and y differ by just one bit, should be small (something like  ). This should then be able to be iterated to extend to x and y being several bits apart rather than just one bit. (In order to do this properly, one will probably need to have a whole bunch of different scales in play, not just a single scale

). This should then be able to be iterated to extend to x and y being several bits apart rather than just one bit. (In order to do this properly, one will probably need to have a whole bunch of different scales in play, not just a single scale  . There are various pigeonhole tricks that let one find a whole range of scales at which all statistics are the same [I call this the “finite convergence principle” in my blog], so as a first approximation let’s pretend that concentration at one scale

. There are various pigeonhole tricks that let one find a whole range of scales at which all statistics are the same [I call this the “finite convergence principle” in my blog], so as a first approximation let’s pretend that concentration at one scale  is the same as concentration at other small scales such as

is the same as concentration at other small scales such as  . The point is that while the L^2 energy of f could be significant in the range

. The point is that while the L^2 energy of f could be significant in the range  for a single

for a single  , the pigeonhole principle prevents it from being significant for all such choices of

, the pigeonhole principle prevents it from being significant for all such choices of  ])

])

February 24, 2009 at 12:08 am |

807. Fourier/density-increment.

In #802, I guess I’m getting the terminology all wrong. I should say that another problem I think the same method will work for is the problem in where you’re looking for “lines” of length 3 where in the wildcard coordinates you’re allowed either (0,1,1), (1,0,1), or (1,1,0).

where you’re looking for “lines” of length 3 where in the wildcard coordinates you’re allowed either (0,1,1), (1,0,1), or (1,1,0).

I don’t know if this problem has a name. It’s not quite a Schur triple. I personally would call it the “Not-Two” problem because in every coordinate you’re allowed (0,0,0), (1,1,1), (0,1,1), (1,0,1), or (1,1,0): anything where the number of 0’s is “Not Two”.

February 24, 2009 at 12:59 am

807.1

A question and 3 remarks:

Q: I remember when we first talked about it there were obvious difficulties for why fourier+increasing density strategy wont work. At the end what is the basic change that make it works is it changing the measure?

R1: sometimes is is equally convenient (and even more) not to work with measures and their associated Fourier transform but to consider functions on [0,1]

measures and their associated Fourier transform but to consider functions on [0,1] and use the Walsh orthonormal basis even for every [0,1]. So given p we represent our set by a subset of the solid cube and use the “fine” Walsh transform all the time.

and use the Walsh orthonormal basis even for every [0,1]. So given p we represent our set by a subset of the solid cube and use the “fine” Walsh transform all the time.

R2: If changing the measure can help when small Fourier coefficients do not suffice for quasirandomness, is there hope it will help for case k=4 of Szemeredi?

R3: there are some problems regarding influences threshold phenomena where better understanding of the relation between equal sliced density and specific densities are needed. Maybe some of the tools from here can help.

densities are needed. Maybe some of the tools from here can help.

February 24, 2009 at 1:13 am |

808. Another Fourier/Sperner approach

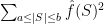

Let me mention briefly a non density-increasing approach to Sperner. You first note that not having lines with 1 wild card implies that . If you can show that the non empty fourier coefficients are concentrated (in terms of |S|) then I think you can conclude that the density is small.

. If you can show that the non empty fourier coefficients are concentrated (in terms of |S|) then I think you can conclude that the density is small.

You want to control expression of the from . Those are related to the number of elements in the set when we fixed the value of n-k variables and look over all the rest. These numbers are controlled by the density of Sperner families for subsets of {1,2,…,k}. So this looks like circular thing but maybe it allows some bootstrapping.

. Those are related to the number of elements in the set when we fixed the value of n-k variables and look over all the rest. These numbers are controlled by the density of Sperner families for subsets of {1,2,…,k}. So this looks like circular thing but maybe it allows some bootstrapping.

February 24, 2009 at 5:42 am |

809. Response to 806.2 (Sperner).

Terry wrote, “This should then be able to be iterated to extend to x and y being several bits apart rather than just one bit.”

Hmm, but one source of annoyance is that y is not just x with or so bits flipped — it’s x with

or so bits flipped — it’s x with  or so 0’s flipped to 1’s. As you get more and more 1’s in there, the intermediate strings are less and less distributed as uniformly random strings. So it’s not 100% clear to me that you can keep appealing to average influence, since influence as defined is a uniform-distribution concept.

or so 0’s flipped to 1’s. As you get more and more 1’s in there, the intermediate strings are less and less distributed as uniformly random strings. So it’s not 100% clear to me that you can keep appealing to average influence, since influence as defined is a uniform-distribution concept.

Overall I agree that this is probably more of a nuisance then a genuine problem, but it was stymieing me a bit so I thought I’d ask.

February 24, 2009 at 6:07 am

809.2 Sperner and influence

Ryan, I think the trusty old triangle inequality should deal with things nicely. (I would need a binomial model of bit-flipping rather than a Poisson model, but I’m sure this makes very little difference.)

Suppose has average influence

has average influence  ; thus flipping a bit from a 0 to 1 or vice versa would only affect things by

; thus flipping a bit from a 0 to 1 or vice versa would only affect things by  on the average. Conditioning, we conclude that if we flip just one bit from a 0 to 1, we expect

on the average. Conditioning, we conclude that if we flip just one bit from a 0 to 1, we expect  to change by at most

to change by at most  on the average. Now we iterate this process

on the average. Now we iterate this process  times. As long as

times. As long as  is much less than

is much less than  (as in your notes), there is no significant distortion of the underlying probability distribution and we see that if y differs by

(as in your notes), there is no significant distortion of the underlying probability distribution and we see that if y differs by  0->1 bits from x, then

0->1 bits from x, then  and

and  will differ by

will differ by  on the average.

on the average.

This will be enough for our purposes as long as the influence is a really small multiple of

is a really small multiple of  . One can’t quite get this by working at a single scale – one gets

. One can’t quite get this by working at a single scale – one gets  instead – but one can do so if one works with a whole range of scales and uses the energy increment argument. I’ve sketched out the details on the wiki at

instead – but one can do so if one works with a whole range of scales and uses the energy increment argument. I’ve sketched out the details on the wiki at

http://michaelnielsen.org/polymath1/index.php?title=Fourier-analytic_proof_of_Sperner

February 24, 2009 at 6:27 am |

810. DHJ(2.7)

Randall, thanks for cleaning up the file! It is a very nice proof, and I’d like to try to describe it in my own words here.

DHJ(2.7) is the strengthening of DHJ(2.6) that gives us three parallel combinatorial lines (i.e. they have the same wildcard set), the first of which hits the dense set A in the 0 and 1 position, the second hits it in the 1 and 2 position, and the third in the 2 and 0 position.

To describe Randall’s argument, I’d first like to describe how Randall’s argument gives yet another proof of DHJ(2) which is quite simple (and gives civilised bounds). It uses the density increment argument: we want to prove DHJ(2) at some density and we assume that we’ve already proven it at any significantly bigger density.

and we assume that we’ve already proven it at any significantly bigger density.

Now let A be a subset of![[2]^n](https://s0.wp.com/latex.php?latex=%5B2%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) of density

of density  for some n. We split n into a smallish r and a biggish n-r, thus viewing

for some n. We split n into a smallish r and a biggish n-r, thus viewing ![[2]^n](https://s0.wp.com/latex.php?latex=%5B2%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) as a whole bunch of (n-r)-dimensional slices, each indexed by a word in

as a whole bunch of (n-r)-dimensional slices, each indexed by a word in ![[2]^r](https://s0.wp.com/latex.php?latex=%5B2%5D%5Er&bg=ffffff&fg=333333&s=0&c=20201002) .

.

If any of the big slices has density significantly bigger than , we are done; so we can assume that all the big slices have density not much larger than

, we are done; so we can assume that all the big slices have density not much larger than  (e.g. at most

(e.g. at most  ). Because the total density is

). Because the total density is  , we can subtract and conclude that all the big slices have density close to

, we can subtract and conclude that all the big slices have density close to  .

.

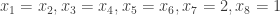

Now we look at the r+1 slices indexed by the words . Each of these slices intersects the set A with density about

. Each of these slices intersects the set A with density about  . Thus by the pigeonhole principle, if r is much bigger than

. Thus by the pigeonhole principle, if r is much bigger than  , then two of the A-slices must share a point in common, i.e. there exists

, then two of the A-slices must share a point in common, i.e. there exists ![w \in [2]^{n-r}](https://s0.wp.com/latex.php?latex=w+%5Cin+%5B2%5D%5E%7Bn-r%7D&bg=ffffff&fg=333333&s=0&c=20201002) and i,j such that

and i,j such that  both lie in A. Voila, a two-dimensional line.

both lie in A. Voila, a two-dimensional line.

The same argument gives DHJ(2.7). Now there are three different graphs on r+1 vertices, the 01 graph, the 12 graph, and the 20 graph. i and j are connected on the 01 graph if the -slice and

-slice and  -slice of A have a point in common; similarly define the 12 graph and the 20 graph. Together, this forms an 8-coloured graph on r+1 vertices. By Ramsey’s theorem, if r is big enough there is a monochromatic subgraph of size

-slice of A have a point in common; similarly define the 12 graph and the 20 graph. Together, this forms an 8-coloured graph on r+1 vertices. By Ramsey’s theorem, if r is big enough there is a monochromatic subgraph of size  . But by the preceding pigeonhole argument, we see that none of the 01 graph, the 12 graph, or the 20 graph can vanish completely here, so they must instead all be complete, and we get three parallel combinatorial lines, each intersecting A in two of the three positions.

. But by the preceding pigeonhole argument, we see that none of the 01 graph, the 12 graph, or the 20 graph can vanish completely here, so they must instead all be complete, and we get three parallel combinatorial lines, each intersecting A in two of the three positions.

February 24, 2009 at 6:53 am |

811. Prelude to some more density-increment arguments.

I was hoping to give an illustration of the #578 technique for a problem in![{[3]^n}](https://s0.wp.com/latex.php?latex=%7B%5B3%5D%5En%7D&bg=ffffff&fg=000000&s=0&c=20201002) . But it got too late for me to finish writing it, so I’ll just give the “background info” I managed to write. This probably ought to go in the wiki rather than in a post, but having spent a bit of time to get Luca’s converter working for me, I didn’t have the energy to also convert to the third, wiki format. More on the #578 method tomorrow.

. But it got too late for me to finish writing it, so I’ll just give the “background info” I managed to write. This probably ought to go in the wiki rather than in a post, but having spent a bit of time to get Luca’s converter working for me, I didn’t have the energy to also convert to the third, wiki format. More on the #578 method tomorrow.

Here, then, some basics on noise and correlated product spaces; for more, see e.g. this paper of Mossel.

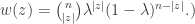

Let be a small finite set; for example,

be a small finite set; for example, ![{\Omega = [3]}](https://s0.wp.com/latex.php?latex=%7B%5COmega+%3D+%5B3%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) . Let

. Let  be a probability distribution on

be a probability distribution on  . Abusing notation, write also

. Abusing notation, write also  for the corresponding product distribution on

for the corresponding product distribution on  . For

. For  , define the noise operator

, define the noise operator  , which acts on functions

, which acts on functions  , as follows:

, as follows: ![\displaystyle (T_\rho^\mu f)(x) = \mathop{\bf E}_{{\bf y} \sim^\mu_\rho x}[f({\bf y})],](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%28T_%5Crho%5E%5Cmu+f%29%28x%29+%3D+%5Cmathop%7B%5Cbf+E%7D_%7B%7B%5Cbf+y%7D+%5Csim%5E%5Cmu_%5Crho+x%7D%5Bf%28%7B%5Cbf+y%7D%29%5D%2C+&bg=ffffff&fg=000000&s=0&c=20201002)

denotes that

denotes that  is formed from

is formed from  by doing the following, independently for each

by doing the following, independently for each ![{i \in [n]}](https://s0.wp.com/latex.php?latex=%7Bi+%5Cin+%5Bn%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) : with probability

: with probability  , set

, set  ; with probability

; with probability  , draw

, draw  from

from  (i.e., “rerandomize the coordinate”). (I use boldface for random variables.)

(i.e., “rerandomize the coordinate”). (I use boldface for random variables.)

where

Here are some simple facts about the noise operator:

Fact 1:![{\mathop{\bf E}_{{\bf x} \sim \mu}[T_\rho^\mu f({\bf x})] = \mathop{\bf E}_{{\bf x} \sim \mu}[f({\bf x})]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D_%7B%7B%5Cbf+x%7D+%5Csim+%5Cmu%7D%5BT_%5Crho%5E%5Cmu+f%28%7B%5Cbf+x%7D%29%5D+%3D+%5Cmathop%7B%5Cbf+E%7D_%7B%7B%5Cbf+x%7D+%5Csim+%5Cmu%7D%5Bf%28%7B%5Cbf+x%7D%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

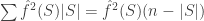

Fact 2: For we have

we have  , where

, where  denotes

denotes ![{\mathop{\bf E}_{{\bf x} \sim \mu}[|f({\bf x})|^p]^{1/p}}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D_%7B%7B%5Cbf+x%7D+%5Csim+%5Cmu%7D%5B%7Cf%28%7B%5Cbf+x%7D%29%7C%5Ep%5D%5E%7B1%2Fp%7D%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

Fact 3: The ‘s form a semigroup, in the sense that

‘s form a semigroup, in the sense that  .

.

Fact 4:![{\mathop{\bf E}_{{\bf x} \sim \mu}[f({\bf x}) \cdot T_\rho^\mu f({\bf x})] = \mathop{\bf E}_{{\bf x} \sim \mu}[(T_{\sqrt{\rho}}^\mu f({\bf x}))^2]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D_%7B%7B%5Cbf+x%7D+%5Csim+%5Cmu%7D%5Bf%28%7B%5Cbf+x%7D%29+%5Ccdot+T_%5Crho%5E%5Cmu+f%28%7B%5Cbf+x%7D%29%5D+%3D+%5Cmathop%7B%5Cbf+E%7D_%7B%7B%5Cbf+x%7D+%5Csim+%5Cmu%7D%5B%28T_%7B%5Csqrt%7B%5Crho%7D%7D%5E%5Cmu+f%28%7B%5Cbf+x%7D%29%29%5E2%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) . We use the notation

. We use the notation  for this quantity.

for this quantity.

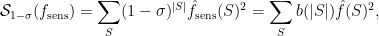

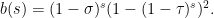

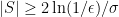

Fact 5:![{\mathbb{S}_{1-\gamma}^\mu(f) = \mathop{\bf E}_\mu[(T_{\sqrt{1 - \gamma}}^\mu f)^2] = \mathop{\bf E}_{{\bf V}}[\mathop{\bf E}_\mu[f|_{{\bf V}}]^2]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathbb%7BS%7D_%7B1-%5Cgamma%7D%5E%5Cmu%28f%29+%3D+%5Cmathop%7B%5Cbf+E%7D_%5Cmu%5B%28T_%7B%5Csqrt%7B1+-+%5Cgamma%7D%7D%5E%5Cmu+f%29%5E2%5D+%3D+%5Cmathop%7B%5Cbf+E%7D_%7B%7B%5Cbf+V%7D%7D%5B%5Cmathop%7B%5Cbf+E%7D_%5Cmu%5Bf%7C_%7B%7B%5Cbf+V%7D%7D%5D%5E2%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) , where: a)

, where: a)  is a randomly chosen combinatorial subspace, with each coordinate being fixed (from

is a randomly chosen combinatorial subspace, with each coordinate being fixed (from  ) with probability

) with probability  and left “free” with probability

and left “free” with probability  , independently; b)

, independently; b)  denotes the restriction of

denotes the restriction of  to this subspace, and

to this subspace, and ![{\mathop{\bf E}_\mu[f|_{{\bf V}}]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D_%5Cmu%5Bf%7C_%7B%7B%5Cbf+V%7D%7D%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) denotes the mean of this restricted function, under

denotes the mean of this restricted function, under  (the product distribution on the free coordinates of

(the product distribution on the free coordinates of  ).

).

February 24, 2009 at 1:32 pm |

812. Positivity

This concerns Ryan’s example of a quantity that is easily seen to be non-negative on the Fourier side, and not so easily seen to be non-negative in physical space. Except that I now have an easy argument in physical space. I don’t know how relevant this is, but there is some potential motivation for it, which is that perhaps the simple idea behind the proof could help in Ryan’s quest for a “non-wrong” proof of Sperner.

The problem, laid out in 803.1, was this. Let be a function defined on

be a function defined on  . Now choose two random points

. Now choose two random points  in

in  as follows:

as follows:  is uniform, and

is uniform, and  is obtained from

is obtained from  by changing each bit of

by changing each bit of  independently with probability 1/5. Now show that

independently with probability 1/5. Now show that  is non-negative for all real functions

is non-negative for all real functions  .

.

There are various ways of describing a proof of this. Here is the one I first thought of, and after that I’ll give a slicker version. I’m going to do a different calculation. Again I’ll pick a random , but this time I’ll create two new vectors

, but this time I’ll create two new vectors  and

and  out of

out of  , which will be independent of each other (given

, which will be independent of each other (given  ) and obtained from

) and obtained from  by flipping coordinates with probability

by flipping coordinates with probability  . And I’ll choose

. And I’ll choose  so that

so that  , which guarantees that the probability that the

, which guarantees that the probability that the  th coordinates of

th coordinates of  and

and  are equal is

are equal is  . Then I’ll work out

. Then I’ll work out  . It’s obviously positive because it is equal to

. It’s obviously positive because it is equal to ![\mathbb{E}_x(\mathbb{E}[f(y)|x])^2](https://s0.wp.com/latex.php?latex=%5Cmathbb%7BE%7D_x%28%5Cmathbb%7BE%7D%5Bf%28y%29%7Cx%5D%29%5E2&bg=ffffff&fg=333333&s=0&c=20201002) . But also, the joint distribution of

. But also, the joint distribution of  and

and  is equal to the joint distribution of

is equal to the joint distribution of  and

and  , since

, since  is uniform and the digits of

is uniform and the digits of  are obtained from

are obtained from  by flipping independently with probability

by flipping independently with probability  . (Proof: the events

. (Proof: the events  are independent and have probability 4/5.)

are independent and have probability 4/5.)

Now for the second way of seeing it. This time I’ll generate from

from  in two stages. First I’ll flip each digit with probability

in two stages. First I’ll flip each digit with probability  . And then I’ll do that again. Now each time you flip with probability

. And then I’ll do that again. Now each time you flip with probability  you are multiplying

you are multiplying  by the symmetric matrix

by the symmetric matrix  defined by

defined by  (which is the same as convolving

(which is the same as convolving  by the function

by the function  So we end up with

So we end up with  , which, since

, which, since  is symmetric, is

is symmetric, is

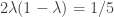

Note that this proof fails if you flip with probability p greater than 1/2, because then you can’t solve the equation . But that’s all to the good, because the result is false when

. But that’s all to the good, because the result is false when  . When

. When  it is “only just true” since flipping with probability 1/2 gives you the uniform distribution for y, so if

it is “only just true” since flipping with probability 1/2 gives you the uniform distribution for y, so if  averages zero then

averages zero then  is zero too.

is zero too.

I’m sure there’s yet another way of looking at it (and in fact these thoughts led to the above proof) which is to think of the random flipping operation as the exponential of an operation where you flip with a different probability. (To define that, I would flip N times, each time with probability , and take limits.) I would then expect some general nonsense about exponentials to give the positivity. In the end I have taken a square root instead.

, and take limits.) I would then expect some general nonsense about exponentials to give the positivity. In the end I have taken a square root instead.

February 24, 2009 at 4:06 pm

812.1. Thanks Tim! I believe this proof is in fact Fact 4 from #811 (with its ).

).

February 24, 2009 at 4:27 pm

812.2. I was pleased with myself for figuring out the analogous trick in our equal-slices setting… until I realized it was exactly what you said way back in #572, first paragraph. Drat.

February 24, 2009 at 1:50 pm |

813. An optimistic conjecture.

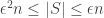

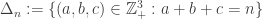

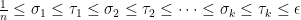

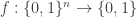

The hyper-optimistic conjecture says that . Here I would like to suggest an “optimistic conjecture”:

. Here I would like to suggest an “optimistic conjecture”: such that

such that  for all

for all  .

.

There exist a number

The hyper-optimistic conjecture implies the optimistic conjecture and the optimistic conjecture implies DHJ.

Let be the set of elements x in A, such that A has measure at least

be the set of elements x in A, such that A has measure at least  in the slice x belong to.

in the slice x belong to. is at most 2. In particular A cannot contain more than 2/3 of each of three slices in an equilateral triangle. Thus, the slices where A has a density greater than 2/3 forms a triangle-free subset of

is at most 2. In particular A cannot contain more than 2/3 of each of three slices in an equilateral triangle. Thus, the slices where A has a density greater than 2/3 forms a triangle-free subset of  . So, if the optimistic conjecture is false, we know that for biggest line-free set A, the density of

. So, if the optimistic conjecture is false, we know that for biggest line-free set A, the density of  in A go to 0 as $n\to \infty$.

in A go to 0 as $n\to \infty$.

We know that if A is a line-free set, the sum of the measure of A in the slices

I think the following would imply DHJ: there exist a C such that for every set A with

there exist a C such that for every set A with  (no element in A is “epsilon-lonesome” in its slice) there exist a equilateral triangle-free set

(no element in A is “epsilon-lonesome” in its slice) there exist a equilateral triangle-free set  with more than

with more than  elements.

elements.

For every

February 24, 2009 at 4:11 pm |

814. Re Sperner & Influences & 809.2.

Hi Terry, not to be ridiculously nitpicky, but could I ask one more question?

It seems to me that: might end up having range

might end up having range ![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=333333&s=0&c=20201002) rather than

rather than  ;

; ;

; . (It wouldn’t be a problem if

. (It wouldn’t be a problem if  had range

had range  since

since  is a metric on $\{0,1\}$.)

is a metric on $\{0,1\}$.)

a)

b) average influence is defined in terms of the *squared* change in

c) we don’t have a triangle inequality for

February 24, 2009 at 4:33 pm

814.1. Maybe one gets around it by playing with the scales & using triangle inequality for ; I’m thinking about it…

; I’m thinking about it…

February 24, 2009 at 5:10 pm

814.2. Oops.

That was a dumb question, as it turns out. Assuming is bounded, squared-differences are bounded by 4 times absolute-value-differences, and then use the triangle inequality. Got it.

is bounded, squared-differences are bounded by 4 times absolute-value-differences, and then use the triangle inequality. Got it.

February 24, 2009 at 5:56 pm |

815. Re Sperner.

I think I can answer my own question from #814. I’ll say what Terry was saying in #809.2, being a little bit imprecise. Let be an integer. Define random variables

be an integer. Define random variables  as follows:

as follows:  is a uniformly random string;

is a uniformly random string;  is formed from

is formed from  by picking a random coordinate and, if that coordinate is

by picking a random coordinate and, if that coordinate is  in

in  , changing it to a

, changing it to a  . Now the distribution on

. Now the distribution on  is pretty much like the distribution on

is pretty much like the distribution on  from before, with

from before, with  . Probably, as Terry says, one should ultimately wrap a Poisson choice of

. Probably, as Terry says, one should ultimately wrap a Poisson choice of  around this entire process.

around this entire process.

Anyway, for fixed , by telescoping we have

, by telescoping we have ![\displaystyle \mathop{\bf E}[f(x_0)g(x_T)] = \mathop{\bf E}[f(x_0)g(x_0)] + \sum_{t=1}^T \mathop{\bf E}[f(x_0)(g(x_t) - g(x_{t-1}))]. \ \ \ \ \ (1)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+++%5Cmathop%7B%5Cbf+E%7D%5Bf%28x_0%29g%28x_T%29%5D+%3D+%5Cmathop%7B%5Cbf+E%7D%5Bf%28x_0%29g%28x_0%29%5D+%2B+%5Csum_%7Bt%3D1%7D%5ET+%5Cmathop%7B%5Cbf+E%7D%5Bf%28x_0%29%28g%28x_t%29+-+g%28x_%7Bt-1%7D%29%29%5D.+%5C+%5C+%5C+%5C+%5C+%281%29&bg=ffffff&fg=000000&s=0&c=20201002)

we have

we have ![\displaystyle |\mathop{\bf E}[f(x_0)(g(x_t) - g(x_{t-1}))]| \leq \|f\|_2 \sqrt{\mathop{\bf E}[(g(x_t) - g(x_{t-1}))^2]}. \ \ \ \ \ (2)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+++%7C%5Cmathop%7B%5Cbf+E%7D%5Bf%28x_0%29%28g%28x_t%29+-+g%28x_%7Bt-1%7D%29%29%5D%7C+%5Cleq+%5C%7Cf%5C%7C_2+%5Csqrt%7B%5Cmathop%7B%5Cbf+E%7D%5B%28g%28x_t%29+-+g%28x_%7Bt-1%7D%29%29%5E2%5D%7D.+%5C+%5C+%5C+%5C+%5C+%282%29&bg=ffffff&fg=000000&s=0&c=20201002)

be the function defined by

be the function defined by ![{Mg(y) = \mathop{\bf E}_{y'}[(g(y') - g(y))^2]}](https://s0.wp.com/latex.php?latex=%7BMg%28y%29+%3D+%5Cmathop%7B%5Cbf+E%7D_%7By%27%7D%5B%28g%28y%27%29+-+g%28y%29%29%5E2%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) , where

, where  is formed from

is formed from  by taking one step in the Markov chain we used in defining the

by taking one step in the Markov chain we used in defining the  ‘s. Hence the expression inside the square-root in (2) is

‘s. Hence the expression inside the square-root in (2) is ![{\mathop{\bf E}[Mg(x_{t-1})]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D%5BMg%28x_%7Bt-1%7D%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) . We would like to say that this expectation is close to

. We would like to say that this expectation is close to ![{\mathop{\bf E}[Mg(x_0)]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D%5BMg%28x_0%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) because the distributions on

because the distributions on  and

and  are very similar. We can use the argument in my “wrong-Sperner” notes for this. Using

are very similar. We can use the argument in my “wrong-Sperner” notes for this. Using  , I think that’ll give something like

, I think that’ll give something like ![\displaystyle |\mathop{\bf E}[Mg(x_{t-1})] - \mathop{\bf E}[Mg(x_{0})]| \leq O(t/\sqrt{n}) \|Mg(x_0)\|_2 \leq O(t/\sqrt{n}) \sqrt{\mathop{\bf E}[Mg(x_0)]},](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%7C%5Cmathop%7B%5Cbf+E%7D%5BMg%28x_%7Bt-1%7D%29%5D+-+%5Cmathop%7B%5Cbf+E%7D%5BMg%28x_%7B0%7D%29%5D%7C+%5Cleq+O%28t%2F%5Csqrt%7Bn%7D%29+%5C%7CMg%28x_0%29%5C%7C_2+%5Cleq+O%28t%2F%5Csqrt%7Bn%7D%29+%5Csqrt%7B%5Cmathop%7B%5Cbf+E%7D%5BMg%28x_0%29%5D%7D%2C+&bg=ffffff&fg=000000&s=0&c=20201002)

is bounded, hence

is bounded, hence  is bounded, hence

is bounded, hence  pointwise.

pointwise.

To bound the “error term” here, use Cauchy-Schwarz on each summand. For a given

Let

where on the right I’ve now assumed that

But![{\mathop{\bf E}[Mg(x_0)]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D%5BMg%28x_0%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) is precisely (well, maybe up to a factor of

is precisely (well, maybe up to a factor of  or something) the “energy” or “average influence” of

or something) the “energy” or “average influence” of  ; write it as

; write it as  . So we get

. So we get ![\displaystyle \mathop{\bf E}[(g(x_t) - g(x_{t-1}))^2] = \mathcal{E}(g) \pm O(t/\sqrt{n}) \sqrt{\mathcal{E}(g)}.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Cmathop%7B%5Cbf+E%7D%5B%28g%28x_t%29+-+g%28x_%7Bt-1%7D%29%29%5E2%5D+%3D+%5Cmathcal%7BE%7D%28g%29+%5Cpm+O%28t%2F%5Csqrt%7Bn%7D%29+%5Csqrt%7B%5Cmathcal%7BE%7D%28g%29%7D.+&bg=ffffff&fg=000000&s=0&c=20201002)

. Then the error in the above is essentially negligible. Now plugging this into (2) we get that each of the

. Then the error in the above is essentially negligible. Now plugging this into (2) we get that each of the  error terms in (1) is at most

error terms in (1) is at most  . So overall, we conclude

. So overall, we conclude ![\displaystyle \mathop{\bf E}[f(x_0)g(x_T)] = \mathop{\bf E}[f(x_0)g(x_0)] \pm O(T \cdot \|f\|_2 \cdot \sqrt{\mathcal{E}(g)}). \ \ \ \ \ (3)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+++%5Cmathop%7B%5Cbf+E%7D%5Bf%28x_0%29g%28x_T%29%5D+%3D+%5Cmathop%7B%5Cbf+E%7D%5Bf%28x_0%29g%28x_0%29%5D+%5Cpm+O%28T+%5Ccdot+%5C%7Cf%5C%7C_2+%5Ccdot+%5Csqrt%7B%5Cmathcal%7BE%7D%28g%29%7D%29.+%5C+%5C+%5C+%5C+%5C+%283%29&bg=ffffff&fg=000000&s=0&c=20201002)

is bounded and that

is bounded and that  .

.

Let’s assume now that in fact

Just to repeat, the assumptions needed to deduce (3) were that

February 24, 2009 at 8:10 pm

815.1 Sperner

Ryan, thanks for fleshing in the details and sorting out the l^1 vs l^2 issue. I just wanted to point out that we may have located the “DHJ(0,2)” problem that Boris brought up a while back – namely, DHJ(0,2) should be DHJ(2) for “low influence” sets. I think we now have a satisfactory understanding of the DHJ(2) problem from an obstructions-to-uniformity perspective, namely that arbitrary sets can be viewed as the superposition of a low influence set (or more precisely, a function ) plus a DHJ(2)-uniform error.

) plus a DHJ(2)-uniform error.

DHJ(1,3) may now need to be tweaked to generalise to functions f which are polynomial combinations of 01-low influence, 12-low influence, and 20-low influence functions, where 01-low influence means that f(x) and f(y) are close whenever y is formed from x by flipping a random 0 bit to a 1 or vice versa, etc. (With our current definition of DHJ(1,3), we are considering products of indicator functions which have zero 01-influence, zero 12-influence, and zero 20-influence respectively.)

February 24, 2009 at 6:47 pm |

816. Obstructions.

I hope I’m going to have time to do some serious averaging arguments this evening. They will be private calculations (though made public as soon as they work), but let me give an outline of what I am hoping to make rigorous. The broad aim is to prove that a set with no combinatorial lines has a significant local correlation with a set of complexity 1. This is a statement that has been sort of sketched in various comments already (by Terry and by me and possibly by others too), but I now think that writing it out in a serious way will be a very useful thing to do and should get us substantially closer to a density-increment proof of DHJ(3). Here is a rough description of the steps.

1. A rather general argument to prove that whenever we feel like restricting to a combinatorial subspace, we can always assume that it has density at most , where

, where  is an arbitrary function of

is an arbitrary function of  . This kind of argument is standard, and has already been mentioned by Terry: basically if you can find a subspace with increased density then you happily pass to that subspace and you’ve already completed your iteration. If you can’t do that, then the proportion of subspaces (according to any reasonable distribution of your convenience) with substantially smaller density is tiny. The minor (I hope) technical challenge is to get a version of this principle that is sufficiently general that it can be used easily whenever it is needed.

. This kind of argument is standard, and has already been mentioned by Terry: basically if you can find a subspace with increased density then you happily pass to that subspace and you’ve already completed your iteration. If you can’t do that, then the proportion of subspaces (according to any reasonable distribution of your convenience) with substantially smaller density is tiny. The minor (I hope) technical challenge is to get a version of this principle that is sufficiently general that it can be used easily whenever it is needed.

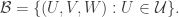

2. Representing combinatorial lines as (U,V,W), we know that for an average W (each element chosen with probability 1/3 — I’m going for uniform measure here) the density of (U,V) such that (U,V,W) is in is

is  .

.

3. Also, by 1, for almost all W we find that the set of points where

where  (for some

(for some  with

with  ) has density almost

) has density almost  .

.

4. Combining 2 and 3, we obtain a W such that the density of (U,V) with (U,V,W) in is at least

is at least  , say, and the density of

, say, and the density of  is almost as large as

is almost as large as  (at least).

(at least).

5. But the points of the latter kind have to avoid those and

and  for which the point

for which the point  , which is a dense complexity-1 obstruction in the “unbalanced” set of

, which is a dense complexity-1 obstruction in the “unbalanced” set of  that have union

that have union ![{}[n]\setminus W](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D%5Csetminus+W&bg=ffffff&fg=333333&s=0&c=20201002) and have

and have  of size at most

of size at most  .

.

6. Randomly restricting, we can get a similar statement, but this time for a “balanced” set—that is, one where have comparable sizes.

have comparable sizes.

7. From that it is straightforward to get a (local) density increment on a special set of complexity 1 (as defined here).

Now I think I’ve basically shown that a special set of complexity 1 contains large combinatorial subspaces, though I need to check this. But what we actually need is a bit stronger than that: we need to cover sets of complexity 1 uniformly by large combinatorial subspaces, or perhaps do something else of a similar nature. (This is where the Ajtai-Szemerédi proof could come in very handy.) But if everything from 1 to 7 works out—I’m not sure how realistic a hope that is but even a failure would be instructive—then we’ll be left with a much smaller-looking problem to solve. I’ll report back when I either get something working or see where some unexpected difficulty lies.

February 24, 2009 at 8:27 pm |

817. Sperner.

Just to clarify, the thing I wrote in #800 says that if you don’t mind restricting to combinatorial subspaces (which we usually don’t, unless we’re really trying to get outstanding quantitatives), then the decomposition we seek can be achieved trivially: you just take

we seek can be achieved trivially: you just take ![f_{U^\bot} = \mathbb{E}[f]](https://s0.wp.com/latex.php?latex=f_%7BU%5E%5Cbot%7D+%3D+%5Cmathbb%7BE%7D%5Bf%5D&bg=ffffff&fg=333333&s=0&c=20201002) .

.

February 25, 2009 at 2:35 am |

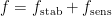

818. Ergodic proof of DHJ(3)

I managed to digest Randall’s lecture notes on the completion of the Furstenberg-Katznelson proof of DHJ(3) (the focus of the 600 thread) to the point where I now have an informal combinatorial translation of the argument at

http://michaelnielsen.org/polymath1/index.php?title=Furstenberg-Katznelson_argument

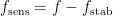

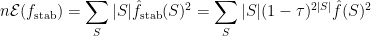

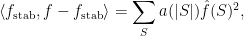

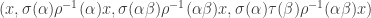

that avoids any reference to infinitary concepts, at the expense of rigour and precision. Interestingly, the argument is morally based on a reduction to something resembling DHJ(1,3), but more complicated to state. We are trying to get a lower bound for

where f is non-negative, bounded, and has positive density, and ranges over all lines with “few” wildcards (and I want to be vague about what “few” means). The first reduction is to eliminate “uniform” or “mixing” components from the second two factors and reduce to

ranges over all lines with “few” wildcards (and I want to be vague about what “few” means). The first reduction is to eliminate “uniform” or “mixing” components from the second two factors and reduce to

where ,

,  are certain “structured” components of f, analogous to

are certain “structured” components of f, analogous to  from the Sperner theory. They have positive correlation with f, and in fact are positive just about everywhere that f is positive.

from the Sperner theory. They have positive correlation with f, and in fact are positive just about everywhere that f is positive.

What other properties do have? In a perfect world, they would be “complexity 1” sets, and in particular one would expect

have? In a perfect world, they would be “complexity 1” sets, and in particular one would expect  to be describable as some simple combination of 01-low influence sets and 12-low influence sets (and similarly

to be describable as some simple combination of 01-low influence sets and 12-low influence sets (and similarly  should be some simple combination of 20-low influence sets and 12-low influence sets). Here, ij-low influence means that the function does not change much if an i is flipped to a j or vice versa.

should be some simple combination of 20-low influence sets and 12-low influence sets). Here, ij-low influence means that the function does not change much if an i is flipped to a j or vice versa.

Unfortunately, it seems (at least from the ergodic approach) that this is not easily attainable. Instead, obeys a more complicated (and weaker) property, which I call “01-almost periodicity relative to 12-low influence”, with

obeys a more complicated (and weaker) property, which I call “01-almost periodicity relative to 12-low influence”, with  obeying a similar property. Very roughly speaking, this is a “relative” version of 01-low influence: flipping digits from 0 to 1 makes

obeying a similar property. Very roughly speaking, this is a “relative” version of 01-low influence: flipping digits from 0 to 1 makes  change, but the way in which it changes is controlled entirely by functions that have low 12-influence. (This is related to the notion of “uniform almost periodicity” which comes up in my paper on the quantitative ergodic theory proof of Szemeredi’s theorem.)

change, but the way in which it changes is controlled entirely by functions that have low 12-influence. (This is related to the notion of “uniform almost periodicity” which comes up in my paper on the quantitative ergodic theory proof of Szemeredi’s theorem.)

It is relatively painless (using Cauchy-Schwarz and energy-increment methods) to pass from (1) to (2). To deal with (2) we need some periodicity properties of on small “monochromatic” spaces (the existence of which is ultimately guaranteed by Graham-Rothschild) which effectively let us replace

on small “monochromatic” spaces (the existence of which is ultimately guaranteed by Graham-Rothschild) which effectively let us replace  with

with  and

and  with

with  on a large family of lines

on a large family of lines  (and more importantly, a large 12-low influence family of lines). From this fact, and the previously mentioned fact that

(and more importantly, a large 12-low influence family of lines). From this fact, and the previously mentioned fact that  are large on f, we can get DHJ(3).

are large on f, we can get DHJ(3).

The argument as described on the wiki is far from rigorous at present, but I am hopeful that it can be translated into a rigorous finitary proof (though it is not going to be pleasant – I would have to deploy a lot of machinery from my quantitative ergodic theory paper). Perhaps a better approach would be to try to export some of the ideas here to the Fourier-type approaches where there is a better chance of a shorter and more quantitatively effective argument.

February 25, 2009 at 5:06 pm

818.1

I haven’t read this closely enough to have even an initial impression, however, much of it looks (somewhat) familiar.

First, I notice you removed your discussion of stationarity…instead (tell me if I misread), and in multiple settings, you seem to do a Cesaro average, over lines, rather than employing something like Graham-Rothschild to get near-convergence along lines restricted to a subspace. Most striking of these are instances of using the so-called IP van der Corput lemma. Looking at its proof, this does indeed look kosher, but it’s rather surprising to me all the same; assuming I’m understanding this at least somewhat correctly, did you give any thought to whether the ergodic proof itself could be tidily rewritten to accommodate this averaging method?

Modulo the above, the main part of the argument I still don’t (in principle) understand is how you bound h, the number of functions used to approximate the almost periodic component of f, independently of n. (This is the part of the argument I got stuck on in my own thoughts.) I see now that you solved this issue in your quantitative ergodic proof of Szemeredi, which I printed last night as well, though I haven’t read deeply enough yet to see how. Am I to assume that something similar happens here, or is the answer different in the two cases?

February 25, 2009 at 8:50 pm

818.2

Yes, the argument is distilled from your notes, though as you see I messed around with the notation quite a bit.

The stationarity is sort of automatic if you work with random subspaces of a big space![[3]^n](https://s0.wp.com/latex.php?latex=%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , and I am implicitly using it all over the place when rewriting one sort of average by another.

, and I am implicitly using it all over the place when rewriting one sort of average by another.

I am indeed hoping that Cesaro averaging may be simpler to implement than IP averaging, and may save me from having to use Graham-Rothschild repeatedly. There are a lot of things hidden in the sketch that may cause this to bubble up. For instance, I am implicitly using the fact that certain shift operators converge (in some Cesaro or IP sense) to an orthogonal projection, and this may require a certain amount of Graham-Rothschild type trickery (I started writing some separate notes on a finitary Hilbert space IP Khintchine recurrence theorem which will be relevant here.)

converge (in some Cesaro or IP sense) to an orthogonal projection, and this may require a certain amount of Graham-Rothschild type trickery (I started writing some separate notes on a finitary Hilbert space IP Khintchine recurrence theorem which will be relevant here.)

I admit I’m a bit sketchy on how to deal with h not blowing up. A key observation here is that of statistical sampling: if one wants to understand an average of bounded quantities over a very large set H, one can get quite a good approximation to this expression by picking a relatively small number

of bounded quantities over a very large set H, one can get quite a good approximation to this expression by picking a relatively small number  of representatives of H at random and looking at the local average

of representatives of H at random and looking at the local average  instead. (This fact substitutes for the fact used in the Furstenberg approach that Volterra integral operators are compact and hence approximable by finite rank operators; or more precisely, the Furstenberg approach needs the relative version of this over some factor Y.) I haven’t worked out completely how this trick will mesh with the IP-systems involved, but I’m hoping that I can throw enough Ramsey theorems at the problem to make it work out.

instead. (This fact substitutes for the fact used in the Furstenberg approach that Volterra integral operators are compact and hence approximable by finite rank operators; or more precisely, the Furstenberg approach needs the relative version of this over some factor Y.) I haven’t worked out completely how this trick will mesh with the IP-systems involved, but I’m hoping that I can throw enough Ramsey theorems at the problem to make it work out.

Perhaps one thing that helps out in the finitary setting that is not immediately available in the ergodic setting is that there are more symmetries available; in particular, the non-commutativity of the IP systems that makes the ergodic setup so hard seems to be less of an issue in the finitary world (the operations of flipping a random 0 to a 1 and flipping a random 0 to a 2 essentially commute since there are so many 0s to choose from). There is a price to pay for this, which is that certain Ramsey theorems may break the symmetry and so one may have to choose to forego either the Ramsey theorem or the symmetry. This could potentially cause a problem in my sketch; as I said, I have not worked out the details (given the progress on the Fourier side of things, I had the vague hope that maybe just the concepts in the sketch, most notably the concept of almost periodicity relative to a low influence factor, could be useful to assist the other main approach to the problem, as I am not particularly looking forward to rewriting my quantitative ergodic theory paper again.)

February 25, 2009 at 4:53 am |

819. #816 and #818 look quite exciting; I plan to try to digest them soon. Meanwhile, here is a Moser-esque problem I invented for the express purpose of being solvable. I hope it might give a few tricks we can use (but it might not be of major help due to the PS of #528).

Let’s define a combinatorial bridge in![{[3]^n}](https://s0.wp.com/latex.php?latex=%7B%5B3%5D%5En%7D&bg=ffffff&fg=000000&s=0&c=20201002) to be a triple of points

to be a triple of points  formed by taking a string with zero or more wildcards and filling in the wildcards with either

formed by taking a string with zero or more wildcards and filling in the wildcards with either  or

or  . If there are zero wildcards we call the bridge degenerate. I think I can show, using the ideas from #800, that if

. If there are zero wildcards we call the bridge degenerate. I think I can show, using the ideas from #800, that if  has mean

has mean  and

and  is sufficiently large as function of

is sufficiently large as function of  , then there is a nondegenerate bridge

, then there is a nondegenerate bridge  with

with  .

.

Roughly, we first use a density-increment argument to reduce to the case when is extremely noise sensitive; i.e.,

is extremely noise sensitive; i.e.,  is only a teeny bit bigger than

is only a teeny bit bigger than  . Here

. Here  is something very small to be chosen later. Next, we pick a suitable distribution on combinatorial bridges

is something very small to be chosen later. Next, we pick a suitable distribution on combinatorial bridges  ; basically, choose a random one where the wildcard probability is

; basically, choose a random one where the wildcard probability is  . Now the key is that under this distribution, there is “imperfect correlation” between the random variable

. Now the key is that under this distribution, there is “imperfect correlation” between the random variable  and the random variable

and the random variable  — and similarly, between

— and similarly, between  and

and  . Here I use the term in the sense of the Mossel paper in #811. Because of this (see Mossel’s Lemma 6.2),

. Here I use the term in the sense of the Mossel paper in #811. Because of this (see Mossel’s Lemma 6.2), ![{\mathop{\bf E}[f(x)f(y)f(z)]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D%5Bf%28x%29f%28y%29f%28z%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) is practically the same as

is practically the same as ![{\mathop{\bf E}[T_{1-\gamma}f(x)\cdot T_{1-\gamma}f(y)\cdot T_{1-\gamma}f(z)]}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D%5BT_%7B1-%5Cgamma%7Df%28x%29%5Ccdot+T_%7B1-%5Cgamma%7Df%28y%29%5Ccdot+T_%7B1-%5Cgamma%7Df%28z%29%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) , when

, when  . But this is extremely close to

. But this is extremely close to  because

because ![{\mathop{\bf E}[T_{1-\gamma}f(x)] = \mathop{\bf E}[T_{1-\gamma}f(y)] = \mathop{\bf E}[T_{1-\gamma}f(z)] = \delta}](https://s0.wp.com/latex.php?latex=%7B%5Cmathop%7B%5Cbf+E%7D%5BT_%7B1-%5Cgamma%7Df%28x%29%5D+%3D+%5Cmathop%7B%5Cbf+E%7D%5BT_%7B1-%5Cgamma%7Df%28y%29%5D+%3D+%5Cmathop%7B%5Cbf+E%7D%5BT_%7B1-%5Cgamma%7Df%28z%29%5D+%3D+%5Cdelta%7D&bg=ffffff&fg=000000&s=0&c=20201002) and because the error can be controlled with H\”{o}lder in terms of

and because the error can be controlled with H\”{o}lder in terms of  , which is teeny by the density-increment reduction.

, which is teeny by the density-increment reduction.

More details here: http://www.cs.cmu.edu/~odonnell/bridges.pdf. I can try to port this to the wiki soon.

February 25, 2009 at 8:38 pm

Ryan, I’m not sure what you mean by “fill the wildcards by either (0,-1,0) or (0,+1,0)”. Wouldn’t this always make x and z equal in a bridge? Perhaps an example would clarify what you mean here.

February 25, 2009 at 10:09 pm

819.2: Er, whoops. You’re right. Even easier then I thought 🙂 To make this problem more interesting, I think it will work if the triples allowed on wildcards are, say: (-,0,-), (-,0,0), (+,0,0), (+,0,+).

But: a) this is not as nice-looking, and b) I think it’ll actually take slightly more work.

So, um, never mind for now.

February 25, 2009 at 6:44 pm |

820. Density increment.

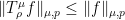

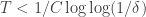

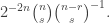

I’ve now finished a wiki write-up that is supposed to establish (and does unless I’ve made a mistake, which is possible) that if is a line-free set of uniform density

is a line-free set of uniform density  then you can pass to a combinatorial subspace of dimension

then you can pass to a combinatorial subspace of dimension  , as long as

, as long as  , and find a special subset of complexity 1 in that subspace of density at least

, and find a special subset of complexity 1 in that subspace of density at least  , such that the equal-slices density of

, such that the equal-slices density of  inside that special subset is at least