I will give only a rather brief summary here, together with links to some comments that expand on what I say.

If we take our lead from known proofs of Roth’s theorem and the corners theorem, then we can discern several possible approaches (each one attempting to imitate one of these known proofs).

1. Szemerédi’s original proof.

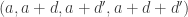

Szemerédi proved a proof of a one-dimensional theorem. I do not know whether his proof has been modified to deal with the multidimensional case (this was not done by Szemerédi, but might perhaps be known to Terry, who recently digested and rewrote Szemerédi’s proof). So it is not clear whether one can base an entire argument for DHJ on this argument, but it could well contain ideas that would be useful for DHJ. For a sketch of Szemerédi’s proof, see this post of Terry’s, and in particular his discussion of question V. He also has links to comments that expand on what he says.

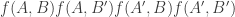

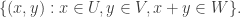

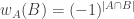

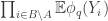

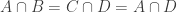

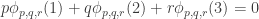

Ajtai and Szemerédi found a clever proof of the corners theorem that used Szemerédi’s theorem as a lemma. This gives us a direction to explore that we have hardly touched on. Very roughly, to prove the corners theorem you first use an averaging argument to find a dense diagonal (that is, subset of the form that contains many points of your set

). For any two such points

and

you know that the point

does not lie in

(if

is corner free), which gives you some kind of non-quasirandomness. (The Cartesian semi-product arguments discussed in the 400s thread are very similar to this observation.) Indeed, it allows you to find a large Cartesian product

in which

is a bit too dense. In order to exploit this, Ajtai and Szemerédi used Szemerédi’s theorem to find long arithmetic progressions

and

of the same common difference such that

has a density increment in

. (I may have misremembered, but I think this must have been roughly what they did.) So we could think about what the analogue would be in the DHJ setting. Presumably it would be some kind of multidimensional Sperner statement of sufficient depth to imply Szemerédi’s theorem. A naive suggestion would be that in a dense subset of

you can find a large-dimensional combinatorial subspace in which all the variable sets have the same size. If you apply this to a union of layers, then you find an arithmetic progression of layer-cardinalities. But this feels rather artificial, so here’s a question we could think about.

Question 1. Can anyone think what the right common generalization of Szemerédi’s theorem and Sperner’s theorem ought to be? (Sperner’s theorem itself would correspond to the case of progressions of length 2.)

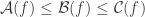

Density-increment strategies.

The idea here is to prove the result by means of the following two steps.

1. If does not contain a combinatorial line, then it correlates with a set

with some kind of atypical structure that we can describe.

2. Every set with that kind of structure can be partitioned into (or almost evenly covered by) a collection of combinatorial subspaces with dimension tending to infinity as

tends to infinity.

One then finishes the argument as follows. If contains no combinatorial line, then find

such that

is a bit denser in

than it is in

. Cover

with subspaces. By averaging we find that the density of

is a bit too large in one of these subspaces. But now we are back where we started with a denser set

so we can repeat. The iteration must eventually terminate (since we cannot have a density greater than 1) so if the initial

was large enough then we must have had a combinatorial line.

One can also imagine splitting 1 up further as follows.

1a. If contains no combinatorial line, then

contains too many of some other simple configuration.

1b. Any set that contains too many of those configurations must correlate with a structured set.

This is certainly how the argument goes in some of the proofs of related results.

Triangle removal.

This was the initial proposal, and we have not been concentrating on it recently, so I will simply refer the reader to the original post, and add the remark that if we manage to obtain a complete and global description of obstructions to uniformity, then regularity and triangle removal could be an alternative way of using this information to prove the theorem. And in the case of corners, this is a somewhat simpler thing to do.

Ergodic-inspired methods.

For a proposal to base a proof on at least some of the ideas that come out of the proof of Furstenberg and Katznelson, see Terry’s comment 439, as well as the first few comments after it. Another helpful comment of Terry’s is his comment 460, which again is responded to by other comments. Terry has also begun an online reading seminar on the Furstenberg-Katznelson proof, using not just the original paper of Furstenberg and Katznelson but also a more recent paper of Randall McCutcheon that explains how to finish off the Furstenberg-Katznelson argument.

Suggestion. I have tried to do a bit of further summary in my first couple of comments. It would be good if others did the same, with the aim that anyone who reads this should be able to get a reasonable idea of what is going on without having to read too much of the earlier discussion.

Remark. There is now a wiki associated with this whole enterprise. It is in the very early stages of development but has some useful things on it already.

February 13, 2009 at 11:30 am |

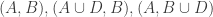

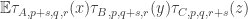

500. Inverse theorem.

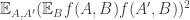

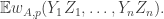

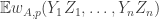

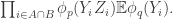

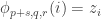

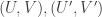

This is continuing where I left off in 490. Let me briefly say what the aim is. I am using the disjoint-pairs formulation of DHJ: given a dense set of pairs

of pairs  of disjoint sets, one can find a triple of the form

of disjoint sets, one can find a triple of the form  ,

,  ,

,  in

in  with all of

with all of

and

and  disjoint. Here “dense” is to be interpreted in the equal-slices measure: that is, if you randomly choose two non-negative integers

disjoint. Here “dense” is to be interpreted in the equal-slices measure: that is, if you randomly choose two non-negative integers  with

with  , and then randomly choose disjoint sets

, and then randomly choose disjoint sets  and

and  with cardinalities

with cardinalities  and

and  , then the probability that

, then the probability that  is at least

is at least  .

.

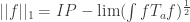

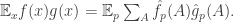

Now I define a norm on functions![f:[2]^n\times[2]^n\rightarrow\mathbb{R}](https://s0.wp.com/latex.php?latex=f%3A%5B2%5D%5En%5Ctimes%5B2%5D%5En%5Crightarrow%5Cmathbb%7BR%7D&bg=ffffff&fg=333333&s=0&c=20201002) as follows. (Incidentally, this is the genuine Cartesian product here, but pairs of sets will not make too big a contribution if they intersect.) First choose a random permutation

as follows. (Incidentally, this is the genuine Cartesian product here, but pairs of sets will not make too big a contribution if they intersect.) First choose a random permutation  of

of ![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) . Then take the average of

. Then take the average of  over all pairs

over all pairs  of initial segments of

of initial segments of ![\pi[n]](https://s0.wp.com/latex.php?latex=%5Cpi%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) and all pairs

and all pairs  of final segments of

of final segments of ![\pi[n]](https://s0.wp.com/latex.php?latex=%5Cpi%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) . Finally, take the average over all

. Finally, take the average over all  .

.

A few things to note about this definition. First, if you choose the initial and final segments randomly, then the probability that is disjoint from

is disjoint from  is at least

is at least  (and in fact slightly higher than this). Second, the marginal distributions of

(and in fact slightly higher than this). Second, the marginal distributions of  and

and  are just the slices-equal measure. Third, for any fixed

are just the slices-equal measure. Third, for any fixed  the expectation we take can also be thought of as

the expectation we take can also be thought of as  , so we get positivity. If we take the fourth root of this quantity and average over all

, so we get positivity. If we take the fourth root of this quantity and average over all  then we get a norm, by standard arguments. (I’m less sure what happens if we first average and then take the fourth root. I’m also not sure whether that’s an important question or not.)

then we get a norm, by standard arguments. (I’m less sure what happens if we first average and then take the fourth root. I’m also not sure whether that’s an important question or not.)

Given a set of density

of density  (as a subset of the set of all disjoint pairs, with slices-equal measure), define its balanced function

(as a subset of the set of all disjoint pairs, with slices-equal measure), define its balanced function ![f:[2]^n\times[2]^n\rightarrow\mathbb{R}](https://s0.wp.com/latex.php?latex=f%3A%5B2%5D%5En%5Ctimes%5B2%5D%5En%5Crightarrow%5Cmathbb%7BR%7D&bg=ffffff&fg=333333&s=0&c=20201002) as follows. If

as follows. If  and

and  are disjoint, then

are disjoint, then  if

if  and

and  otherwise. If

otherwise. If  and

and  intersect, then

intersect, then  . Then

. Then  if we think about it suitably. And the way to think about it is this. To calculate

if we think about it suitably. And the way to think about it is this. To calculate  we first take a random permutation of

we first take a random permutation of ![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) . Then we choose a random initial segment

. Then we choose a random initial segment  and a random final segment

and a random final segment  . Then we evaluate

. Then we evaluate  . This is how I am defining the expectation of

. This is how I am defining the expectation of  .

.

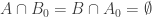

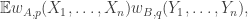

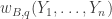

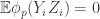

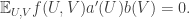

Though I haven’t written out a formal proof (since I am not doing that — though I think a general rule is going to have to be that if a sketch starts to become rather detailed, and if nobody can see any reason for it not to be essentially correct, then the hard work of turning it into a formal argument would be done away from the computer), I am fairly sure that if a dense set contains no combinatorial lines and

contains no combinatorial lines and  is the balanced function of

is the balanced function of  then

then  is bounded away from 0.

is bounded away from 0.

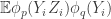

That was just a mini-summary of where this uniformity-norms idea may possibly have got to. Because it feels OK I want to think about the part that feels potentially a lot less OK, which is obtaining some kind of usable structure where has a large average, on the assumption that

has a large average, on the assumption that  is large.

is large.

February 13, 2009 at 11:48 am |

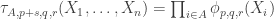

501. Inverse theorem.

What I would very much like to be able to do is rescue a version of the conjecture put forward in comment 411. The conjecture as stated seems to be false, but it may still be true if we use the slices-equal measure. In terms of functions, what I’d like to be able to prove is that if is at least

is at least  , then there exist set systems

, then there exist set systems  and

and  such that the density of disjoint pairs

such that the density of disjoint pairs  with

with  and

and  is at least

is at least  and the average of

and the average of  over all such pairs is at least

over all such pairs is at least  . (The first condition could be relaxed to positive density in some subspace if that helped.)

. (The first condition could be relaxed to positive density in some subspace if that helped.)

I’m fairly sure that easy arguments give us the following much weaker statement. If you choose a random pair according to equal-slices measure, then you can find set systems

according to equal-slices measure, then you can find set systems  and

and  with the following properties.

with the following properties.

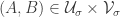

(i) Every set in is disjoint from

is disjoint from  and every set in

and every set in  is disjoint from

is disjoint from  .

.

(ii) The set of disjoint pairs such that

such that  and

and  is on average dense in the set of disjoint pairs

is on average dense in the set of disjoint pairs  such that

such that  .

.

(iii) For each the expectation of

the expectation of  over all disjoint pairs

over all disjoint pairs  is at least

is at least  .

.

If this is correct, then the question at hand is whether one can piece together the pairs to form two set systems

to form two set systems  and

and  such that the density of disjoint pairs

such that the density of disjoint pairs  with

with  and

and  is at least

is at least  and the average of

and the average of  over all such pairs is at least

over all such pairs is at least  .

.

February 13, 2009 at 12:36 pm |

502. Inverse theorem.

In this comment I want to explain slightly better what I mean by “piecing together” local lack of uniformity to obtain global obstructions. To do so I’ll look at a few examples.

Example 1 Terry has already mentioned this one. Let be a function defined on

be a function defined on  . Suppose we know that for every interval

. Suppose we know that for every interval  of length

of length  we can find a trigonometric function

we can find a trigonometric function  such that

such that  . Can we find a trigonometric function

. Can we find a trigonometric function  defined on all of

defined on all of  such that

such that  ? The answer is very definitely no. Basically, you can just partition

? The answer is very definitely no. Basically, you can just partition  into intervals of length a bit bigger than

into intervals of length a bit bigger than  and define

and define  to be a randomly chosen trigonometric function on each of these intervals. This will satisfy the hypotheses but be globally quasirandom.

to be a randomly chosen trigonometric function on each of these intervals. This will satisfy the hypotheses but be globally quasirandom.

Example 2 Now let’s modify Example 1. This time we assume that has trigonometric bias not just on intervals but on arithmetic progressions of length

has trigonometric bias not just on intervals but on arithmetic progressions of length  (in the mod-

(in the mod- sense of an arithmetic progression). I don’t know for sure what the answer is here, but I think we do now get a global correlation. Suppose for instance that

sense of an arithmetic progression). I don’t know for sure what the answer is here, but I think we do now get a global correlation. Suppose for instance that  is around

is around  . Then if we choose random trigonometric functions on each interval of length

. Then if we choose random trigonometric functions on each interval of length  , they will give rise to random functions on arithmetic progressions of common difference

, they will give rise to random functions on arithmetic progressions of common difference  . So the frequencies are forced to relate to each other in a way that they weren’t before.

. So the frequencies are forced to relate to each other in a way that they weren’t before.

Example 3. Suppose that is a graph of density

is a graph of density  with vertex set

with vertex set  of size

of size  . Suppose that

. Suppose that  is some integer that’s much smaller than

is some integer that’s much smaller than  and suppose that for a proportion of at least

and suppose that for a proportion of at least  of the choices

of the choices  of

of  vertices you can find a subset

vertices you can find a subset  of size at least

of size at least  such that the density of the subgraph induced by

such that the density of the subgraph induced by  (inside

(inside  ) is at least

) is at least  . Can we find a subset

. Can we find a subset  of

of  of size at least

of size at least  such that the density of the subgraph induced by

such that the density of the subgraph induced by  is at least

is at least  ?

?

The answer is yes, and it is an easy consequence of standard facts about quasirandomness. The hypothesis plus an averaging argument implies that contains too many 4-cycles, which means that

contains too many 4-cycles, which means that  is not quasirandom, which implies that there is a global density change. (Sorry, in this case I misstated it — you don’t actually get an increase, as the complete bipartite graph on two sets of size

is not quasirandom, which implies that there is a global density change. (Sorry, in this case I misstated it — you don’t actually get an increase, as the complete bipartite graph on two sets of size  illustrates, but you can get the density to differ substantially from

illustrates, but you can get the density to differ substantially from  . If you want a density increase, then you need a similar statement about bipartite graphs.)

. If you want a density increase, then you need a similar statement about bipartite graphs.)

Example 4. Suppose that is a random graph on

is a random graph on  vertices with edge density

vertices with edge density  . Let

. Let  be a subgraph of

be a subgraph of  of relative density

of relative density  . Then one would expect

. Then one would expect  to contain about

to contain about  labelled triangles. Suppose that it contains more triangles than this. Can we find two large subsets

labelled triangles. Suppose that it contains more triangles than this. Can we find two large subsets  and

and  of vertices such that the number of edges joining them is substantially more than

of vertices such that the number of edges joining them is substantially more than  ?

?

Our hypothesis tells us that if we pick a random vertex in the graph, then its neighbourhood will on average have greater edge density than . So we have a large collection of sets where

. So we have a large collection of sets where  is too dense. However, these sets are all rather small. So can we put them together? The answer is yes but the proof is non-trivial and depends crucially on the fact that the neighbourhoods of the vertices are forced (by the randomness of

is too dense. However, these sets are all rather small. So can we put them together? The answer is yes but the proof is non-trivial and depends crucially on the fact that the neighbourhoods of the vertices are forced (by the randomness of  ) to spread themselves around.

) to spread themselves around.

Question. Going back to our Hales-Jewett situation, as described at the end of the previous comment, is it more like Example 1 (which would be bad news for this approach, but illuminating nevertheless), or is it more like the other examples where the non-uniformities are forced to relate to one another and combine to form a global obstruction?

February 13, 2009 at 5:23 pm |

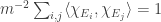

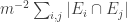

503. Combining obstructions.

I want to think as abstractly as possible about when obstructions in small subsets are forced to combine to form a single global obstruction. For simplicity of discussion I’ll use a uniform measure. So let be a set, let

be a set, let  be subsets of

be subsets of  , and let us assume that every

, and let us assume that every  is contained in exactly

is contained in exactly  of the

of the  . Let us assume also that the

. Let us assume also that the  all have the same size (an assumption I’d eventually hope to relax) and that a typical intersection

all have the same size (an assumption I’d eventually hope to relax) and that a typical intersection  is not too small, though I won’t be precise about this for now.

is not too small, though I won’t be precise about this for now.

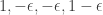

For each let

let ![g_i:E_i\rightarrow[-1,1]](https://s0.wp.com/latex.php?latex=g_i%3AE_i%5Crightarrow%5B-1%2C1%5D&bg=ffffff&fg=333333&s=0&c=20201002) be some function. Then one simple remark one can make (which doesn’t require anything like all the assumptions I’ve just made) is that if we can find

be some function. Then one simple remark one can make (which doesn’t require anything like all the assumptions I’ve just made) is that if we can find  that correlates with many of the

that correlates with many of the  , then there must be lots of correlation between the

, then there must be lots of correlation between the  themselves. To see this, recall first that

themselves. To see this, recall first that  is the constant function 1. Therefore,

is the constant function 1. Therefore,  is a function that takes values in

is a function that takes values in ![{}[-1,1]](https://s0.wp.com/latex.php?latex=%7B%7D%5B-1%2C1%5D&bg=ffffff&fg=333333&s=0&c=20201002) . Furthermore,

. Furthermore,  . Since

. Since  , and we can rewrite it as

, and we can rewrite it as  , we find that on average

, we find that on average  must be a substantial fraction of its trivial maximum

must be a substantial fraction of its trivial maximum  . If

. If  is large, so that the diagonal terms are negligible, then this says that there are lots of correlations between the

is large, so that the diagonal terms are negligible, then this says that there are lots of correlations between the  .

.

In my next comment I will discuss a strategy for getting from this to a globally defined function, built out of the , that correlates with

, that correlates with  and is likely to have good properties under certain circumstances.

and is likely to have good properties under certain circumstances.

February 13, 2009 at 7:13 pm |

504. Combining obstructions.

Tim – Before you define a general local-global uniformity, please let me ask a question. For extremal set problems it was very useful – and we used it before – to take a random permutation of the base set [n] and considering only sets which are “nice” in this ordering. For example, let us consider sets where the elements are consecutive. If the original set system was dense, then in this window, that we had by a random permutation, is a dense set of intervals. Here we can define/check uniformity easily. Disjoint sets are disjoint, and one can also analyze intersections. I didn’t do any formal calculations, however this window might give us some information we want and it is easy to work with. (We won’t find combinatorial lines there, so I don’t see direct a proof for DHJ, but it’s not a surprise …)

February 13, 2009 at 9:06 pm |

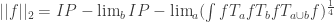

505. Uniformity norms:

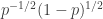

The ergodic proof of DHJ reduces it to an IP recurrence theorem. Now, for an IP system setup, we can easily define analogs of the (ergodic) uniformity norms. So, let be a measure preserving IP system;

be a measure preserving IP system;  ranges over finite subsets of the naturals. Put

ranges over finite subsets of the naturals. Put  . Now let

. Now let  be the projection onto the factor that is asymptotically invariant under

be the projection onto the factor that is asymptotically invariant under  ; we can write

; we can write  if we like. Now put

if we like. Now put  . (This should look awfully familiar.) Presumably, if

. (This should look awfully familiar.) Presumably, if  is small, where

is small, where  is the balanced version of the characteristic function of a set, then the set should have, asymptotically, the right number of arithmetic progressions of length 3 whose difference comes from some relevant IP set (or something like that). On the other hand, if

is the balanced version of the characteristic function of a set, then the set should have, asymptotically, the right number of arithmetic progressions of length 3 whose difference comes from some relevant IP set (or something like that). On the other hand, if  is big,

is big,  must correlate with….well, let’s just say if

must correlate with….well, let’s just say if  is big then

is big then  correlates with a rigid function, that much is easy. At any rate I think the above may be on the right track. I may try to translate from ergodic theory to a more recognizable form, but right now a baby is waking up behind me….

correlates with a rigid function, that much is easy. At any rate I think the above may be on the right track. I may try to translate from ergodic theory to a more recognizable form, but right now a baby is waking up behind me….

However, I will say this much…there is no conventional averaging in any proof of any ergodic IP recurrence theorem (IP limits serve that purpose in this context). Also, there are extremely strong analogies between the uniformity norms on and the uniformity norms in ergodic theory (actually…to me it’s not really an analogy; these are exactly the same norms). Although no norms are defined in ergodic IP theory, there could be…what does a norm stand in for? To an ergodic theorist, the dth uniformity norm is a stand-in for d iterations of van der Corput. In the IP case, we do in fact use d iterations of a version of van der Corput. If the correlation holds to form, the above norms may well be the ones to be looking at.

and the uniformity norms in ergodic theory (actually…to me it’s not really an analogy; these are exactly the same norms). Although no norms are defined in ergodic IP theory, there could be…what does a norm stand in for? To an ergodic theorist, the dth uniformity norm is a stand-in for d iterations of van der Corput. In the IP case, we do in fact use d iterations of a version of van der Corput. If the correlation holds to form, the above norms may well be the ones to be looking at.

February 13, 2009 at 9:08 pm |

505. Combining obstructions.

In my previous post I said that we won’t see combinatorial lines in a random permutation. Now I’m not that sure about it. So, here is a simple statement which would imply DHJ (so it’s false most likely, but I can’t see a simple counterexample) If you take a dense subset of pairwise disjoint intervals in [n] then there are four numbers a, b, c, d in increasing order that [a,b],[c,d] and [a,c],[c,d] and [a,b],[b,d] are in your subset.

The rough numbers – counting the probability that there is no such configuration in a random permutation – didn’t give me any hint. I have to work with a more precise form of Stirling’s formula. I need time and I will work away from the computer. (I have to leave shortly anyways)

February 13, 2009 at 9:18 pm |

506.

The “simple statement” above is clearly false, just take pairs where

one interval is between 1 and n/2 and the second is between n/2 and n. But I think this is not a general problem for our application where we might be able to avoid such “bipartite” cases.

February 14, 2009 at 1:07 am |

507.

Tim,

Regarding your Example 2 in 502 above, here is a cheat way to get a global obstruction. If you correlate with a linear phase on many progressions of length sqrt{N} then you also have large U^2 norm on each such progression. Summing over all progressions, this means that the sum of f over all parallelograms (x,x+h,x+k,x+h+k) with |k/h| \leq \sqrt{N}, or roughly that, is large.

But even if you only control such parallelograms with k = 2h this is the same as controlling the average of f over 4-term progressions, and one knows that is controlled by the *global* U^3 norm and hence by a global quadratic object (nilsequence). That will allow you to “tie together” the frequencies of the trig polys that you correlated with at the beginning, though I haven’t bothered to think exactly how. One just needs to analyse which 2-step nilsequences correlate with a linear phase on many progressions of length sqrt{N}, and it might just be ones which are essentially 1-step, and that would imply that f correlates globally with a linear phase.

BY the way much the same argument shows that if you have large U^k norm on many progressions of length sqrt{N} (say) then you have large global U^{2^k -1} norm.

Ben

February 14, 2009 at 1:13 am |

508. Ergodic-mimicking general proof strategy:

Okay, I will give my general idea for a proof. I’m pretty sure it’s sound, though it may not be feasible in practice. On the other hand I may be badly mistaken about something. I will throw it out there for someone else to attempt, or say why it’s nonsense, or perhaps ignore. I won’t formulate it as a strategy to prove DHJ, but of what I’ve called IP Roth. If successful, one could possibly adapt it to the DHJ, k=3 situation, but there would be complications that would obscure what was going on.

We work in $X=[n]^{[n]}\times [n]^{[n]}$. For a real valued function $f$ defined on $X$, define the first coordinate 2-norm by $||f||^1_2=(\iplim_b\iplim_a {1\over |X|}\sum_{(x,y)\in X} f((x,y))f((x+a,y))f((x+b,y))f((x+a+b,y)))^{1\over 4}$. The second coordinate 2-norm is defined similarly (on the second coordinate, obviously). Now, let me explain what this means. $a$ and $b$ are subsets of $[n]$, and we identify $a$ with the characteristic function of $a$, which is a member of $[n]^{[n]}$. (That is how we can add $a$ to $x$ inside, etc. Since $[n]$ is a finite set, you can’t really take limits, but if $n$ is large, we can do something almost as good, namely ensure that whenever $\max\alpha<\min\beta$, the expression we are taking the limit of is close to something (Milliken Taylor ensures this, I think). Of course, you have to restrict $a$ and $b$ to a subspace. What is a subspace? You take a sequence $a_i$ of subsets of $[n]$ with $\max a_i<\min a_{i+1}$ and then restrict to unions of the $a_i$.

Now here is the idea. Take a subset $E$ of $X$ and let $f$ be its balanced indicator function. You first want to show that if *either* of the coordinate 2-norms of $f$ is small, then $E$ contains about the right number of corners $\{ (x,y), (x+a,y), (x,y+a)\}$. Restricted to the subspace of course. What does that mean? Well, you treat each of the $a_i$ as a single coordinate, moving them together. The other coordinates I’m not sure about. Maybe you can just fix them in the right way and have the norm that was small summing over all of $X$ still come out small. At any rate, the real trick is to show that if *both* coordinate 2-norms are big, you get a density increment on a subspace. Here a subspace surely means that you find some $a_i$s, treat them as single coordinates, and fix the values on the other coordinates.

February 14, 2009 at 1:19 am |

509. Quasirandomness.

Jozsef, re 504, what you describe is what I thought I was doing in the second paragraph of 500. The nice thing about taking a random permutation and then only looking at intervals is that you end up with dense graphs. The annoying thing is that these dense graphs don’t instantly give you combinatorial lines. However, they do give correlation with a Cartesian product, so my hope was to put together all these small dense Cartesian products to get a big dense “Kneser product”. At the moment I feel quite hopeful about this. I’ve got to go to bed very soon, but roughly my planned programme for doing it is this.

Suppose you have proved that there are a lot of pairwise correlations between the . Then I want to think of these

. Then I want to think of these  as unit vectors in a Hilbert space and find some selection of them such that most pairs of them correlate. Here’s a general dependent-random-selection procedure for vectors in a finite-dimensional Hilbert space. Suppose I have unit vectors

as unit vectors in a Hilbert space and find some selection of them such that most pairs of them correlate. Here’s a general dependent-random-selection procedure for vectors in a finite-dimensional Hilbert space. Suppose I have unit vectors  . Now I choose random unit vectors

. Now I choose random unit vectors  and pick all those

and pick all those  such that

such that  for every

for every  . The probability of choosing any given

. The probability of choosing any given  is obviously

is obviously  . Now the probability that

. Now the probability that  and

and  are both positive is equal to the proportion of the sphere in the intersection of the positive hemispheres defined by

are both positive is equal to the proportion of the sphere in the intersection of the positive hemispheres defined by  and

and  , which has measure

, which has measure  , where

, where  is the angle between

is the angle between  and

and  . So the probability of picking both

. So the probability of picking both  and

and  is

is  . So if

. So if  and we have lots of correlations of at least

and we have lots of correlations of at least  , then we should end up with almost all pairs that we choose having good correlations. It is some choice like this that I am currently hoping will give rise to a global obstruction to uniformity.

, then we should end up with almost all pairs that we choose having good correlations. It is some choice like this that I am currently hoping will give rise to a global obstruction to uniformity.

The more I think about this, the more I am beginning to feel that it’s got to the point where it’s going to be hard to make progress without going away and doing calculations. I don’t really see any other way of testing the plausibility of some of the ideas I am suggesting.

February 14, 2009 at 8:37 am |

510. Stationarity

The reading seminar is already paying off for me, in that I now see that the random Bernoulli variables associated to a Cartesian half-product automatically enjoy the stationarity property, which will presumably be useful in showing that those half-products contain lines with positive probability. (I also plan to write up this strategy on the wiki at some point.)

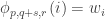

Let’s recall the setup. We have a set A of density in

in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) that we wish to find lines in. Pick a random x in

that we wish to find lines in. Pick a random x in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , then pick random

, then pick random  in the 0-set of x, where

in the 0-set of x, where  does not depend on n. This gives a random embedding of

does not depend on n. This gives a random embedding of ![{}[3]^m](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5Em&bg=ffffff&fg=333333&s=0&c=20201002) into

into ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , and in particular creates the strings

, and in particular creates the strings  for

for  , formed from x by flipping the

, formed from x by flipping the  digits from 0 to 1, and the

digits from 0 to 1, and the  digits from 0 to 2. I like to think of the

digits from 0 to 2. I like to think of the  as a “Cartesian half-product”, which in the m=4 case is indexed as follows:

as a “Cartesian half-product”, which in the m=4 case is indexed as follows:

0000 0002 0022 0222 2222

1000 1002 1022 1222

1100 1102 1122

1110 1112

1111

Observe that form a line whenever

form a line whenever  . We have the Bernoulli events

. We have the Bernoulli events  .

.

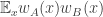

We already observed that each of the have probability about

have probability about  , thus the one-point correlations of

, thus the one-point correlations of  are basically independent of i and j. More generally, it looks like the k-point correlations of the

are basically independent of i and j. More generally, it looks like the k-point correlations of the  are translation-invariant in i and j (so long as one stays inside the Cartesian half-product). For instance, when

are translation-invariant in i and j (so long as one stays inside the Cartesian half-product). For instance, when  , the events

, the events  and

and  (which are the assertions that the embedded copies of 0000, 1000 and 0002, 1002 respectively both lie in A) have essentially the same probability. This is obvious if you think about it, and comes from the fact that if you take a uniformly random string in

(which are the assertions that the embedded copies of 0000, 1000 and 0002, 1002 respectively both lie in A) have essentially the same probability. This is obvious if you think about it, and comes from the fact that if you take a uniformly random string in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) and flip a random 0-bit of that string to a 2, one essentially gets another uniformly random string in

and flip a random 0-bit of that string to a 2, one essentially gets another uniformly random string in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) (the error in the probability distribution is negligible in the total variation norm). Indeed, all the probabilities of

(the error in the probability distribution is negligible in the total variation norm). Indeed, all the probabilities of  are essentially equal for all i,j for which this makes sense. And similarly for other k-point correlations.

are essentially equal for all i,j for which this makes sense. And similarly for other k-point correlations.

I don’t yet know how to use stationarity, but I expect to learn as the reading seminar continues.

February 14, 2009 at 9:42 am |

511. Local-to-global

Re 502 Example 2 and 507, I think I now have a simple way of getting a global obstruction in this case, but it would have to be checked. Suppose that is a function that correlates with a trigonometric function on a positive proportion of the APs in

is a function that correlates with a trigonometric function on a positive proportion of the APs in  of length

of length  . Here

. Here  could be very small — depending on the size of the correlation only I think.

could be very small — depending on the size of the correlation only I think.

Now suppose I pick a random AP of length 3. Then it will be contained in several of these APs of length , and if

, and if  is prime each AP of length 3 will be in the same number of APs of length

is prime each AP of length 3 will be in the same number of APs of length  . At this stage there’s a gap in the argument because lack of uniformity doesn’t imply that you have the wrong sum over APs of length 3, but it sort of morally does, so I think it might well be possible to deal with this and get that the expectation of

. At this stage there’s a gap in the argument because lack of uniformity doesn’t imply that you have the wrong sum over APs of length 3, but it sort of morally does, so I think it might well be possible to deal with this and get that the expectation of  is not small — perhaps by modifying

is not small — perhaps by modifying  in some way or playing around with different

in some way or playing around with different  . And then we’d have a global obstruction by the

. And then we’d have a global obstruction by the  inverse theorem.

inverse theorem.

Alternatively, instead of using APs of length 3, it might be possible to use additive quadruples but under the extra condition that there is a small linear relation between

but under the extra condition that there is a small linear relation between  and

and  . That would give a useful positivity. I haven’t checked whether lack of uniformity on such additive quadruples implies that the

. That would give a useful positivity. I haven’t checked whether lack of uniformity on such additive quadruples implies that the  norm is large, but the fact that 3-APs give you big

norm is large, but the fact that 3-APs give you big  is a promising sign.

is a promising sign.

February 14, 2009 at 5:15 pm |

512. Stationarity.

Just a small note on Terry.#510: Is there any particular reason for choosing the indices from the 0-set of the initial random string

from the 0-set of the initial random string  ?

?

If not, the probabilistic analysis might be very slightly cleaner if we simply say, “Choose random coordinates

random coordinates  and fill in the remaining

and fill in the remaining  coordinates at random. Now consider the events

coordinates at random. Now consider the events  …”

…”

February 14, 2009 at 9:57 pm |

513. Stationarity

Yeah, you’re right; I was rather clumsily thinking of the base point x as being the lower left corner of the embedded space, when in fact one only needs to think of what x is doing outside of the variable indices. (I’ve updated the wiki to reflect this improvement.)

February 15, 2009 at 8:06 am |

514. DHJ(2.6)

Back in 130 I asked a weaker statement than DHJ(3), dubbed DHJ(2.5): if A is a dense subset of![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , does there exist a combinatorial line

, does there exist a combinatorial line  whose first two positions only

whose first two positions only  are required to lie inside A? It was quickly pointed out to me that this follows easily from DHJ(2).

are required to lie inside A? It was quickly pointed out to me that this follows easily from DHJ(2).

From the reading seminar, I now have a new problem intermediate between DHJ(3) and DHJ(2.5), which I am dubbing DHJ(2.6): if A is a dense subset of![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , does there exist an r such that for each ij=01,12,20, there exists a combinatorial line

, does there exist an r such that for each ij=01,12,20, there exists a combinatorial line  with exactly r wildcards with

with exactly r wildcards with  ? So it’s three copies of DHJ(2.5) with the extra constraint that the lines all have to have the same wildcard length. Clearly this would follow from DHJ(3) but is a weaker problem. At present I do not see a combinatorial proof of this weaker statement (which Furstenberg and Katznelson, by the way, spend an entire paper just to prove).

? So it’s three copies of DHJ(2.5) with the extra constraint that the lines all have to have the same wildcard length. Clearly this would follow from DHJ(3) but is a weaker problem. At present I do not see a combinatorial proof of this weaker statement (which Furstenberg and Katznelson, by the way, spend an entire paper just to prove).

February 15, 2009 at 3:53 pm |

515. Different measures.

Terry, I’m not sure I understand your derivation of slices-equal DHJ from uniform DHJ. For convenience let me copy your argument and then comment on specific lines of it.

Suppose that![A \subset [3]^n](https://s0.wp.com/latex.php?latex=A+%5Csubset+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) has density

has density  in the equal-slices sense. By the first moment method, this means that A has density

in the equal-slices sense. By the first moment method, this means that A has density  on

on  on the slices.

on the slices.

Let m be a medium integer (much bigger than , much less than n).

, much less than n).

Pick (a, b, c) at random that add up to n-m. By the first moment method, we see that with probability , A will have density

, A will have density  on the

on the  of the slices

of the slices  with

with  ,

,  ,

,  .

.

This implies that A has expected density on a random m-dimensional subspace generated by a 1s, b 2s, c 3s, and m independent wildcards.

on a random m-dimensional subspace generated by a 1s, b 2s, c 3s, and m independent wildcards.

Applying regular DHJ to that random subspace we obtain the claim.

One of my arguments for deducing slices-equal from uniform collapsed because sequences belonging to non-central slices are very much more heavily weighted than sequences that belong to central ones. I don’t understand why that’s not happening in your argument (though I haven’t carefully done calculations). Consider for example what might happen if . Then the weights of individual sequences in the slices of the form

. Then the weights of individual sequences in the slices of the form  go down exponentially as r increases and as t decreases, even if

go down exponentially as r increases and as t decreases, even if  is close to

is close to  . Or am I missing something?

. Or am I missing something?

In general, there seems to be a difficult phenomenon associated with the slices-equal measure, which is that unless you’re lucky enough for to contain bits near the middle, it will be very far from uniform on all combinatorial subspaces where it might have a chance to be dense. Worse still, it doesn’t restrict to slices-equal measure on subspaces.

to contain bits near the middle, it will be very far from uniform on all combinatorial subspaces where it might have a chance to be dense. Worse still, it doesn’t restrict to slices-equal measure on subspaces.

February 15, 2009 at 5:28 pm |

516. Different measures.

On second thoughts, I do now follow your argument!

February 15, 2009 at 6:29 pm |

517. Different measures

I’ve put a summary of the different measures discussion on the wiki at

http://michaelnielsen.org/polymath1/index.php?title=Equal-slices_measure

(More generally, I will probably be more silent on this thread than previously, but will be working “behind the scenes” by distilling some of the older discussion onto the wiki.)

February 15, 2009 at 9:43 pm |

DHJ (2.6):

Here’s what looks like a combinatorial proof, modulo reduction to sets and Graham-Rothschild being kosher (at least no infinitary theorems are used). Put everything in the sets formulation, where you have sets $B_w$ of measure at least $\delta$ in a prob. space. (No need for stationarity.) Now color combinatorial lines $\{ w(0),w(1),w(2)\}$ according to whether $B_{w(0)}\cap B_{w(1)}$, $B_{w(0)}\cap B_{w(2)}$ and $B_{w(1)}\cap B_{w(2)}$ are empty or not (8 colors). By Graham-Rothschild, there is an $n$-dimensional subspace all of whose lines have the same color for this coloring. Just take $n$ large enough for the sets version of DHJ (2.5).

February 15, 2009 at 10:59 pm |

519.

Dear Randall, I like this proof, but I am a little worried that A is still dense on the subspace one passes to by Graham-Rothschild. If A is deterministic, it may well be that the space one passes to is completely empty; if instead A is random, then the Graham-Rothschild subspace will depend on A and it is no longer clear that all the events in that space have probability .

.

February 16, 2009 at 12:40 am |

520.

Terry…the subspace doesn’t depend on the random set A. What I am proving is the (b) formulation, not the (a) formulation. There seems to be some disconnect between the language of ergodic theory and the language of “random sets”, so let me try to translate: one reduces to a subspace where, for each ij=01,12,02, one of the following two things happens:

1. for all variable words w over the subspace, the probability that both w(i) and w(j) are in A is zero.

2. for all variable words w over the subspace, the probability that both w(i) and w(j) are in A is positive.

If you still think there is an issue about a density decrement, just read my proof as a proof of the (b) formulation…there, you can’t have a density decrement upon passing to a subspace because all the sets B_w have density at least \delta.

February 16, 2009 at 1:50 am |

521.

Oh, I see now. That’s a nice argument – and one that demonstrates an advantage in working in the ergodic/probabilistic setting. (It would be interesting to see a combinatorial translation of the argument, though.)

I’ll write it up on the wiki (on the DHJ page) now.

February 16, 2009 at 5:35 am |

522. DHJ(2.6).

Hmm, I see how this is a bit trickier than DHJ(2.5). For example, for any of the three pairs you can probably show that if

pairs you can probably show that if  for some moderate

for some moderate  then there is at least an

then there is at least an  chance of having an “

chance of having an “ pair” at distance

pair” at distance  . But the trouble is, these could potentially be different measure

. But the trouble is, these could potentially be different measure  sets, and since they’re small it’s not so obvious how to show they’re not disjoint.

sets, and since they’re small it’s not so obvious how to show they’re not disjoint.

As an example of the trickiness, let , assumed an integer. Perhaps $\latex A$ is the set of strings whose 0-count is divisible by

, assumed an integer. Perhaps $\latex A$ is the set of strings whose 0-count is divisible by  and whose 1-count is divisible by

and whose 1-count is divisible by  . Now 02-pairs have to be at distance which is a multiple of

. Now 02-pairs have to be at distance which is a multiple of  , and 12-pairs have to be at a distance which is a multiple of

, and 12-pairs have to be at a distance which is a multiple of  . So you have to be a bit clever to extract a common valid distance, a multiple of

. So you have to be a bit clever to extract a common valid distance, a multiple of  .

.

February 16, 2009 at 8:01 am |

Ryan,

Can you explain more your approach in comment 477. ?

February 16, 2009 at 8:38 am |

524. DHJ(2.6)

A small observation: one can tone the Ramsey theory in Randall’s proof of DHJ(2.6) a notch, by replacing the Graham-Rothschild theorem by the simpler Folkman’s theorem (this is basically because of the permutation symmetry of the random subcube ensemble). Indeed, given a dense set A in![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) we can colour [n] in eight colours, colouring a shift r depending on whether there exists a combinatorial line with r wildcards whose first two (or last two, or first and last) vertices lie in A. By Folkman’s theorem, we can find a monochromatic m-dimensional pinned cube Q in [n] (actually it is convenient to place this cube in a much smaller set, e.g.

we can colour [n] in eight colours, colouring a shift r depending on whether there exists a combinatorial line with r wildcards whose first two (or last two, or first and last) vertices lie in A. By Folkman’s theorem, we can find a monochromatic m-dimensional pinned cube Q in [n] (actually it is convenient to place this cube in a much smaller set, e.g. ![{}[n^{0.1}]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5E%7B0.1%7D%5D&bg=ffffff&fg=333333&s=0&c=20201002) ). If the monochromatic colour is such that all three pairs of a combinatorial line with r wildcards can occur in A, we are done, so suppose that there is no line with r wildcards with r in Q with (say) the first two elements lying in A.

). If the monochromatic colour is such that all three pairs of a combinatorial line with r wildcards can occur in A, we are done, so suppose that there is no line with r wildcards with r in Q with (say) the first two elements lying in A.

Now consider a random copy of![{}[3]^m](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5Em&bg=ffffff&fg=333333&s=0&c=20201002) in

in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , using the m generators of Q to determine how many times each of the m wildcards that define this copy will get. The expected density of A in this cube is about

, using the m generators of Q to determine how many times each of the m wildcards that define this copy will get. The expected density of A in this cube is about  , so by DHJ(2.5) at least one of the copies is going to get a line whose first two elements lie in A, which gives the contradiction.

, so by DHJ(2.5) at least one of the copies is going to get a line whose first two elements lie in A, which gives the contradiction.

February 16, 2009 at 8:59 am |

p.s. I have placed the statement of relevant Ramsey theorems (Graham-Rothschild, Folkman, Carlson-Simpson, etc.) on the wiki for reference.

February 16, 2009 at 2:22 pm |

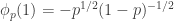

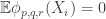

525. Strategy based on influence proofs

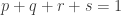

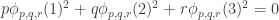

Let me say a little more (vague) things about possible strategy based on mimmicking influence proofs. We want to derive facts on the Fourier expansion of our set based on the fact that there are no lines and let me just consider k=2 (Sperner). Suppose that your set A has density c and you know that there is no lines with one wild cards. This gives that

. (In a random set we would expect

. (In a random set we would expect  ; anyway this is of course possible.)

; anyway this is of course possible.)

Next you want to add the fact that there are no lines with 2 wild cars. or some other indormations.

or some other indormations.

The formula (in fact there are few and we have to choose a simple one) for the number of lines with two wild cards i and j and the conclusion for the Fourier coefficients are less clear to me. Maybe we can derive conclusions on the higher moments

Here, we do not want to reach a subspace with higher density but rather a contradiction. In the ususal influence proofs the tool for that were certain inequalities asserting that for sets with small support the Fourier coefficients are high. This particular conclusion will not help us but the inequalities can help in some other way.

If indeed we are getting from the no line assumptions that the Fourier coefficients are high and concentrated this may be in conflict with facts about codes. Namely that our A will be a better than possible code in terms of its distance distribution. Anyway the first thing to calculate would be a good Fourier expression for the number of lines with 2 wildcards.

February 16, 2009 at 3:18 pm |

526. DHJ(2.6).

Thanks for the observation about using Folkman’s Theorem, Terry, it looks quite nice. I have some other ideas about trying to give a Ramsey-less proof based on Sperner’s Theorem; I’ll try to write them soon.

February 16, 2009 at 3:52 pm |

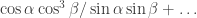

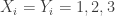

527. Fourier approach to Sperner.

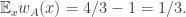

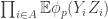

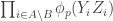

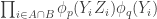

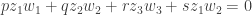

Gil, here area few more details re #477. First, I see I dropped the Fourier coefficients at the beginning of the post, whoops! What I meant to say is that if you do the usual Fourier thing, you get the probability of is

is

The intuition here is that since is tiny, hopefully all the terms involving a positive power of

is tiny, hopefully all the terms involving a positive power of  are “negligible”. Obviously this is unlikely to precisely happen, but if it were true then we would get

are “negligible”. Obviously this is unlikely to precisely happen, but if it were true then we would get

a noise stability quantity. Now there are something like terms we want to drop, so it would be hard to do something simple like Cauchy-Schwarz. My idea is one from a recent paper with Wu. Think of

terms we want to drop, so it would be hard to do something simple like Cauchy-Schwarz. My idea is one from a recent paper with Wu. Think of  as a quadratic form over the Fourier coefficients of

as a quadratic form over the Fourier coefficients of  . I.e., think of

. I.e., think of  as a vector of length

as a vector of length  and think of

and think of

where is a matrix whose

is a matrix whose  entry is the obvious expression from above with

entry is the obvious expression from above with  ‘s and

‘s and  ‘s and so on. Our goal is to find two similar-looking matrices

‘s and so on. Our goal is to find two similar-looking matrices  such that

such that  , meaning

, meaning  are psd. Then we get

are psd. Then we get  . (I see I’ve used

. (I see I’ve used  for two different things here; whoops.)

for two different things here; whoops.)

To be continued…

February 16, 2009 at 3:53 pm |

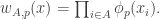

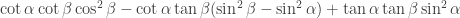

528. Fourier approach to Sperner.

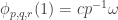

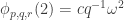

Let me explain what I hope will be; the matrix

will be; the matrix  will be similar.

will be similar.

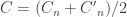

Let be the 2×2 matrix with entries

be the 2×2 matrix with entries  . This is like a little “

. This is like a little “ -biased

-biased  -noise stability” matrix. Let

-noise stability” matrix. Let  . Similarly, let

. Similarly, let  be the 2×2 matrix with entries

be the 2×2 matrix with entries  and define

and define  . Finally, let

. Finally, let  . The quadratic form associated to

. The quadratic form associated to  should be that average of two noise stabilities I wrote in post #477, except with noise rate

should be that average of two noise stabilities I wrote in post #477, except with noise rate  , not

, not  .

.

My unsubstantiated claim/hope is that indeed . Why do I believe this? Uh… believe it or not, because I checked empirically for

. Why do I believe this? Uh… believe it or not, because I checked empirically for  up to 5 with Maple. 😉 Sorry, I guess this isn’t how a real mathematician would do it. But I hope it can be proven.

up to 5 with Maple. 😉 Sorry, I guess this isn’t how a real mathematician would do it. But I hope it can be proven.

To define the matrix , do the same thing but set

, do the same thing but set  to be the 2×2 matrix with entries

to be the 2×2 matrix with entries  , etc. So eventually I hope to sandwich

, etc. So eventually I hope to sandwich  between the average of the

between the average of the  -biased

-biased  -noise stabilities of

-noise stabilities of  and the average of the

and the average of the  -biased

-biased  -noise stabilities of

-noise stabilities of  .

.

PS: Although it may work for Sperner, I’m less optimistic ideas like this will work for DHJ. The trouble is, in a distribution on comparable pairs for Sperner, there is *imperfect* correlation between

for Sperner, there is *imperfect* correlation between  and

and  . Roughly, if you know

. Roughly, if you know  , you are still unsure what

, you are still unsure what  is. On the other hand, in any distribution on combinatorial lines

is. On the other hand, in any distribution on combinatorial lines  for DHJ, there is *perfect* correlation between

for DHJ, there is *perfect* correlation between  and

and  (and the other two pairs). If you know

(and the other two pairs). If you know  and

and  , then you know

, then you know  with certainty. This seems to break a lot of hopes to use “Invariance”-type methods like those in Elchanan’s follow-on to MOO. This is related to the fact that there is no hope to prove a generalization of DHJ of the form, “If

with certainty. This seems to break a lot of hopes to use “Invariance”-type methods like those in Elchanan’s follow-on to MOO. This is related to the fact that there is no hope to prove a generalization of DHJ of the form, “If  are two subsets of

are two subsets of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) of density

of density  , then there is a combinatorial line

, then there is a combinatorial line  with

with  and

and  .”

.”

February 16, 2009 at 5:10 pm |

529. Ramsey-less DHJ(2.6) plan.

Here is a potential angle for a Ramsey-free proof of DHJ(2.6). The idea would be to soup up the Sperner-based proof of DHJ(2.5) and show that the set of (Hamming) distances where we can find a 01-half-line in

of (Hamming) distances where we can find a 01-half-line in  is “large” and “structured”.

is “large” and “structured”.

So again, let be a random string in

be a random string in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) and condition on the location of

and condition on the location of  ‘s 2’s. There must be some set of locations such that

‘s 2’s. There must be some set of locations such that  has density at least

has density at least  on the induced 01 hypercube (which will likely have dimension around

on the induced 01 hypercube (which will likely have dimension around  ). So fix 2’s into these locations and pass to the 01 hypercube. Our goal is now to show that if

). So fix 2’s into these locations and pass to the 01 hypercube. Our goal is now to show that if  is a subset of

is a subset of  of density

of density  then the set

then the set  of Hamming distances where

of Hamming distances where  has Sperner pairs is large/structured. (I’ve written

has Sperner pairs is large/structured. (I’ve written  here although it ought to be

here although it ought to be  .)

.)

Almost all the action in is around the

is around the  middle slices. Let’s simplify slightly (although this is not much of a cheat) by pretending that

middle slices. Let’s simplify slightly (although this is not much of a cheat) by pretending that  has density

has density  on the union of the

on the union of the  middle slices *and* that these slices have “equal weight”. Index the slices by

middle slices *and* that these slices have “equal weight”. Index the slices by ![i \in [\sqrt{n}]](https://s0.wp.com/latex.php?latex=i+%5Cin+%5B%5Csqrt%7Bn%7D%5D&bg=ffffff&fg=333333&s=0&c=20201002) , with the

, with the  th slice actually being the

th slice actually being the  slice.

slice.

Let denote the intersection of

denote the intersection of  with the $i$th slice, and let

with the $i$th slice, and let  denote the relative density of

denote the relative density of  within its slice.

within its slice.

To be continued…

February 16, 2009 at 5:10 pm |

530. Ramsey-less DHJ(2.6) plan continued.

Here is a slightly wasteful but simplifying step: Since the average of the ‘s is

‘s is  , a simple Markov-type argument shows that

, a simple Markov-type argument shows that  for at least a

for at least a  fraction of the

fraction of the  ‘s. Call such an

‘s. Call such an  “marked”.

“marked”.

Now whenever we have, say, marked

marked  ‘s, it means we have a portion of

‘s, it means we have a portion of  with a number of points at least

with a number of points at least  times the number of points in a single middle slice. Hence Sperner’s Theorem tells us we get two comparable points

times the number of points in a single middle slice. Hence Sperner’s Theorem tells us we get two comparable points  from among these slices.

from among these slices.

Thus we have reduced to the following setup:

There is a graph , where

, where ![V \subseteq [\sqrt{n}]](https://s0.wp.com/latex.php?latex=V+%5Csubseteq+%5B%5Csqrt%7Bn%7D%5D&bg=ffffff&fg=333333&s=0&c=20201002) (the “marked

(the “marked  ‘s”) has density at least

‘s”) has density at least  and the edge set

and the edge set  has the following density property: For every collection

has the following density property: For every collection  with

with  , there is at least one edge among the vertices in

, there is at least one edge among the vertices in  .

.

Let be the set of “distances” in this graph, where an edge

be the set of “distances” in this graph, where an edge  has “distance”

has “distance”  . Show that this set of distances must be large and/or have some useful arithmetic structure.

. Show that this set of distances must be large and/or have some useful arithmetic structure.

February 16, 2009 at 9:57 pm |

I wonder if the following “ideology” can be promoted or justified: A set without a line with at most r wildcards “behaves like” an error correcting code with minimal distance r’ where r’ grows to infinity (possibly very slowly) when r does. for k=2 of course we do not get en error correcting codes but when we have to split the set between many slices points from different slices are far apart so it is a little like a bahavior of a code. for k=3 this looks much more dubious but maybe has some truth in it.

February 16, 2009 at 10:40 pm |

532. Need for Carlson’s theorem?

Terry’s reduction to a use of Folkman instead of Graham-Rothschild, as well as his ideas for increasing the symmetry available, got me thinking there might be a way to reduce to a situation where you are using Hindman’s theorem instead of from Carlson’s theorem in the entire proof. Here is my first attempt…perhaps others can check if this makes sense. Fix a big $n$ and let $A$ be your subset of $[k]^n$ that you want to find a combinatorial line in. Let $Y$ be the space $2^{[k]^n}$, that is, all sets of words of length $n$. $U^i_j$ is the map on $Y$ that takes a set $E$ of words and interchanges 0s and js in the ith place to get a new set of words, namely the set $U^i_j E$. (Some of the words in $E$ may be unaffected; in particular, if no word in $E$ has a 0 or j in the ith place, $E$ is a fixed point.) Now let $X$ be the orbit of $A$ under $G$, the group gen. by these maps. It seems that to find a combinatorial line in any member of $X$ will give you a line in $A$. Let $B=\{ E:00…00\in E\}\subset X$. In general, for $U\subset X$, define the measure $\mu(U)$ to be the fraction of $g$ in $G$ such that $gA\in U$. This should give measure to $B$ equal to that of the relative density of $A$, and I am thinking the measure is preserved by the $U^i_j$ maps. If all of this is right, then things are simpler than FK imagined, because we have that $U^i_j$ commutes with $U^k_l$ for $i\neq k$, which should allow for the use of Hindman in all places were before Carlson was used. In particular, it allows for the use of Folkman in any finitary reduction (rather than Graham-Rothschild). And, in the case of Sperner’s Lemma, well, that becomes a simple consequence of the pigeonhole principle. (Because it’s just recurrence for a single IP system.) To be a bit more precise, in the $k=3$ case, I want to prove that there is some $\alpha\subset [n]$ such that $\mu(B\cap \prod_{i\in \alpha} U^i_1 B\cap \prod_{i\in \alpha} U^i_2 B)>0$ and conclude DHJ (3). The point is you get to IP systems much more easily with the extra commutativity assumption.

Sorry for the ergodic wording. Really at this state I am just hoping someone will check to see if everything I am saying is wrong for some simple reason I have overlooked. If not, we can write up a trivial proof of Sperner and a simplified version of $k=3$ and go from there.

February 16, 2009 at 11:10 pm |

533. Well it seems that was wrong…the maps don’t take lines to lines. Can anyone else think of a way to preserve some of the symmetry that Terry has gotten in the early stages?

February 17, 2009 at 12:08 am |

534. Folkman’s theorem

Back in 341 and in some following comments I tried to popularize Folkman’s theorem as a statement relevant to our project. I didn’t find a meaningful density version yet, however Tim pointed out that statements like Ben Green’s removal lemma for linear equations might work. It would be something like this; If the number of IP_d-sets is much less than what isexpected from the density, then removing a few elements one can destroy all IP_d sets. In the next post I will say a few more words about the set variant of Folkman’s theorem, however I’m not sure that this 500 blog series is the best forum for that.

February 17, 2009 at 12:41 am |

535. Folkman’s theorem

on second thought I decided not to talk about Folkman’s theorem here, since this blog is about possible proof strategies for DHJ. I will try to find another forum to discuss some questions on Folkman’s theorem.

February 17, 2009 at 6:27 pm |

536.

Jozsef: I guess this is the best forum we have right now (we tried splitting up into more threads earlier, but found that all but one of them would die out quickly). I can always archive the discussion at the wiki to be revived at some later point.

I wanted to point out two thoughts here. Firstly, I think I have a Fourier-analytic proof of DHJ(2.6) avoiding Ramsey theory. Firstly, observe that the usual proof of Sperner shows that the set of wildcard-lengths r of the combinatorial lines in a dense subset of![{}[2]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B2%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) contains a difference set of the form A-A where A is a dense subset of

contains a difference set of the form A-A where A is a dense subset of ![{}[\sqrt{n}]](https://s0.wp.com/latex.php?latex=%7B%7D%5B%5Csqrt%7Bn%7D%5D&bg=ffffff&fg=333333&s=0&c=20201002) . Using the deduction of DHJ(2.5) from Sperner, we see that the set of wildcard lengths of combinatorial lines whose first two points lie in a dense subset of

. Using the deduction of DHJ(2.5) from Sperner, we see that the set of wildcard lengths of combinatorial lines whose first two points lie in a dense subset of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) also contains a similar difference set. Similarly for permutations. So it comes down to the claim that for three dense sets A, B, C of

also contains a similar difference set. Similarly for permutations. So it comes down to the claim that for three dense sets A, B, C of ![{}[\sqrt{n}]](https://s0.wp.com/latex.php?latex=%7B%7D%5B%5Csqrt%7Bn%7D%5D&bg=ffffff&fg=333333&s=0&c=20201002) , that A-A, B-B, C-C have a non-trivial intersection outside of zero.

, that A-A, B-B, C-C have a non-trivial intersection outside of zero.

This can be proven either by the triangle removal lemma (try it!) or by the arithmetic regularity lemma of Green, finding a Bohr set on which A, B, C are dense and pseudorandom. There may also be a more direct proof of this.

Secondly, I think we may have a shot at a combinatorial proof of Moser(3) – that any dense subset A of![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) contains a geometric line rather than a combinatorial line. This is intermediate between DHJ(3) and Roth, but currently has no combinatorial proof. Here is a sketch of an idea: we use extreme localisation and look at a random subcube

contains a geometric line rather than a combinatorial line. This is intermediate between DHJ(3) and Roth, but currently has no combinatorial proof. Here is a sketch of an idea: we use extreme localisation and look at a random subcube ![{}[3]^m](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5Em&bg=ffffff&fg=333333&s=0&c=20201002) . Let B be the portion of A on the corners

. Let B be the portion of A on the corners  of the subcube, and let C be the portion of A which is distance one away (in Hamming metric) from the centre

of the subcube, and let C be the portion of A which is distance one away (in Hamming metric) from the centre  of this subcube. The point is that there are a lot of potential lines connecting one point of C with two nearly opposing points of B. I haven’t done it yet, but it looks like the number of such lines has a nice representation in terms of the low Fourier coefficients of A, and should therefore tell us that in order to be free of geometric lines, A has to have large influence. I am not sure where to take this next, but possibly by boosting this fact with the Ramsey theory tricks we can get some sort of contradiction.

of this subcube. The point is that there are a lot of potential lines connecting one point of C with two nearly opposing points of B. I haven’t done it yet, but it looks like the number of such lines has a nice representation in terms of the low Fourier coefficients of A, and should therefore tell us that in order to be free of geometric lines, A has to have large influence. I am not sure where to take this next, but possibly by boosting this fact with the Ramsey theory tricks we can get some sort of contradiction.

February 17, 2009 at 8:04 pm |

Here is a nice example I heard from Muli Safra. (It’s origin is in the problem of testing monotonicity of Boolean functions.) Consider a Boolean function where

where  and

and  is a random Boolean function. When you consider strings x and y that correspond to two random sets S and T so that S is a subset of T with probability close to one f(x) = f(y) because almost surely the variables where S and T differs miss the first m variables. We can have a similar example for an alphabet of three letters.

is a random Boolean function. When you consider strings x and y that correspond to two random sets S and T so that S is a subset of T with probability close to one f(x) = f(y) because almost surely the variables where S and T differs miss the first m variables. We can have a similar example for an alphabet of three letters.

February 17, 2009 at 8:06 pm |

537. DHJ(2.6)

Hi Terry, re your #536: great! this is just what I was going for in #529 & #530… But one aspect of the deduction I didn’t quite get: in my #530, you don’t have the differences from all pairs in , just the ones where you have an “edge”. So do you produce your set

, just the ones where you have an “edge”. So do you produce your set  by filtering

by filtering  somehow? Or perhaps I’m missing some simple deduction from the proof of Sperner.

somehow? Or perhaps I’m missing some simple deduction from the proof of Sperner.

February 17, 2009 at 8:20 pm |

538. Fourier of line avoiding sets.

Thanks a lot for the details, Ryan, it is very interesting. I think that using Maple is the way a real mathematician will go about it!

As for my vague (related) suggestions: There is a nice Fourier expression of subsets of without a combinatorial line with one wild cards. But already when you assume there is no lines with one and two wildcadrs its get messy. (In particular these scare expressions

without a combinatorial line with one wild cards. But already when you assume there is no lines with one and two wildcadrs its get messy. (In particular these scare expressions  .)

.)

Maybe there would be a nice expression for sets in without a combinatorial line with one wildcard. This can be nice.

without a combinatorial line with one wildcard. This can be nice.

Another little (perhaps unwise) question regarding avoiding special configurations of “small distance” vectors. Suppose you look at subsets of without two distinct sets S and T so that S\T has precisely twice as many elements as T\S. Does this implies that the size of the family is

without two distinct sets S and T so that S\T has precisely twice as many elements as T\S. Does this implies that the size of the family is  ? (Again you can take a slice.)

? (Again you can take a slice.)

April 10, 2011 at 8:24 am

The problem about Sperner theorem which is mentioned in the the last paragraph was completely reolved by Imre Leader and Eoin Long, their paper Tilted Sperner families http://front.math.ucdavis.edu/1101.4151 contains also related results and conjectures.

February 17, 2009 at 8:24 pm |

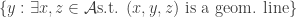

538. Moser(3).

For this problem I think it might be helpful to go all the way back to Tim.#70 and think about “strong obstructions to uniformity” of the following form: dense sets for which

for which  is not almost everything.

is not almost everything.

February 17, 2009 at 9:06 pm |

540.

Re: Sperner: if A is a dense subset of![[2]^n](https://s0.wp.com/latex.php?latex=%5B2%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , then a random chain in this set is going to hit A in a dense subset of its equator (which is the middle

, then a random chain in this set is going to hit A in a dense subset of its equator (which is the middle  of the chain, which has length n). If A hits this chain in the

of the chain, which has length n). If A hits this chain in the  and

and  positions, then we get a combinatorial line with j-i wildcards. This is why the set of r arising from lines in A contain a difference set of a dense subset of

positions, then we get a combinatorial line with j-i wildcards. This is why the set of r arising from lines in A contain a difference set of a dense subset of ![[\sqrt{n}]](https://s0.wp.com/latex.php?latex=%5B%5Csqrt%7Bn%7D%5D&bg=ffffff&fg=333333&s=0&c=20201002) .

.

Incidentally, I withdraw my claim that the joint intersection of A-A, B-B, C-C can be established from triangle removal – but the arithmetic regularity lemma argument should still work.

Finally, for Moser, I agree with Ryan’s 538 – except that I think one should restrict y to be very close to the centre of the cube

of the cube  , otherwise y is only going to interact with a small fraction of the points of the cube and I don’t think this will be easily detectable by global obstructions. I still haven’t worked out what goes on when y is just a single digit away from

, otherwise y is only going to interact with a small fraction of the points of the cube and I don’t think this will be easily detectable by global obstructions. I still haven’t worked out what goes on when y is just a single digit away from  but I do believe it will have a nice Fourier-analytic interpretation.

but I do believe it will have a nice Fourier-analytic interpretation.

February 17, 2009 at 9:07 pm |

Metacomment.