This post is one of three threads that began in the comments after the post entitled A combinatorial approach to density Hales-Jewett. The other two are Upper and lower bounds for the density Hales-Jewett problem , hosted by Terence Tao on his blog, and DHJ — the triangle-removal approach, which is on this blog. These three threads are exploring different facets of the problem, though there are likely to be some relationships between them.

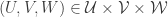

Quasirandomness.

Many proofs of combinatorial density theorems rely in one way or another on an appropriate notion of quasirandomness. The idea is that, as we do, you have a dense subset of some structure and you want to prove that it must contain a substructure of a certain kind. You observe that a random subset of the given density will contain many substructures of that kind (with extremely high probability), and you then ask, “What properties do random sets have that cause them to contain all these substructures?” More formally, you look for some deterministic property of subsets of your structure that is sufficient to guarantee that a dense subset contains roughly the same number of substructures as a random subset of the same density.For example, suppose you are trying to find a triangle in a dense graph. (We can think of a graph with vertices as a subset of the complete graph with

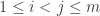

vertices. So the complete graph

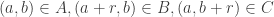

is our “structure” in this case.) It turns out that a quasirandomness property that gives you the right number of triangles is this: a graph of density

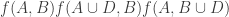

is quasirandom if the number of quadruples

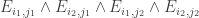

such that

and

are all edges is approximately

. That is, the number of labelled 4-cycles is roughly what it would be in a random graph. If this is the case, then it can be shown that it is automatically also the case that the number of triples

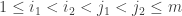

such that

and

are edges is approximately

, again the number that you would expect in a random graph.

This might seem a slightly disappointing answer to the question. We are trying to guarantee the expected number of one small subgraph and we assume as our quasirandomness property something that appears to be equally strong: that we have the expected number of another small subgraph. How can that possibly be a good thing to do?

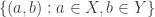

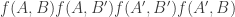

A first answer is that if you have the right number of 4-cycles then it doesn’t just give you triangles: it gives you all small subgraphs. But an answer that is more pertinent to us here is that we can draw very useful consequences if a graph does not have the right number of 4-cycles. For example, we can find two large sets of vertices and

such that the number of pairs

such that

is an edge is significantly larger than

(which is what we would get for a random graph). Note that this is a global conclusion (an over-dense bipartite subgraph) deduced from a local hypothesis (the wrong number of 4-cycles). We can turn this round and say that we have an alternative definition of quasirandomness: a graph of density

is quasirandom if for any two large sets

and

of vertices, the number of pairs

such that

is an edge is about

. This definition turns out to be equivalent to the previous definition.

This sort of local-to-global implication is extremely useful. The local property can be used to show that sets have substructures. And if the local property fails, then one has some global property of the set that can be exploited in a number of ways.

It is not too important for the purposes of this thread to understand what all these ways are. But one of them does stand out: a global property is useful if it allows you to find a substructure of the main structure such that the intersection of with that substructure is denser than it would be for a random set. In the graphs case, our substructure will be a large complete bipartite graph. The obvious substructure to hope for in the density Hales-Jewett set-up is a combinatorial subspace (which is like a combinatorial line except that you have several different variable sets, with the number of these sets being called the dimension of the combinatorial subspace). However, as I shall argue in a comment after this summary, we will probably need to go for something more complicated than a combinatorial subspace.

So one question that it would be very good to answer is this: what on earth do we mean by a quasirandom subset of ? Or rather, what local property would guarantee roughly the expected number of combinatorial lines in a dense subset of

? (Technical point: even this is probably not quite the right question, since we ought to work not in

but in a suitable triangular grid of slices, so that the uniform measure is a sensible one to use.) Of course, we would also like to understand what global properties would guarantee this, but that is a topic for the next section.

Obstructions to uniformity.

If one wants to devise a proof that depends on a quasirandomness property, then one wants that property itself to have some properties. These are (i) that it should have a local definition that allows one to show that quasirandom sets contain many combinatorial lines; (ii) that every non-quasirandom set should be non-quasirandom for a global reason (such as intersecting a large structured set more densely than expected); and (iii) that every set that is non-quasirandom for a global reason like this should look “too dense” in some substructure that is similar to the main structure.

One of the main purposes of this thread is to understand (ii) in the case of the density Hales-Jewett problem. What are the global reasons for a set not to have roughly the expected number of combinatorial lines? These are what is meant by obstructions to uniformity. The dream is to be able to say, “It’s clear that a set with one of these global properties will not necessarily have the expected number of combinatorial lines. But in fact, the converse is true too: if a set does not have the expected number of combinatorial lines then it must have one of these global properties.”

How might we work out what the obstructions to uniformity are? There are two possible approaches, both of which are valuable. One is just to think of as many genuinely different ways as we can for a set to fail to have the expected number of combinatorial lines. Another is to devise a local quasirandomness property and to see what we can deduce if that local property does not hold. So far, all the discussion has centred on the first approach, though in the end it is the second approach that one would have to use (as it is in other contexts where the theory of quasirandomness is applied).

There was quite a bit of discussion about the first approach in the “obstructions to uniformity” thread on the combinatorial approach to density Hales-Jewett post. It was summarized by Terence Tao in his comment number 148, so I won’t give lots of links here (though I will give some). But let me briefly say what the obstructions we now know of are, and add one that has not yet been mentioned.

Example 1. One fairly general class of obstructions is obtained as follows. Let be some fixed function from

to

, let

be a positive integer, let

be a structured set of integers mod

(I’ll say what “structured” means in a moment), let

be integers mod

and let

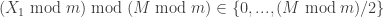

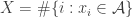

be the set of all sequences

such that

.

Suppose now that is a combinatorial line in

, with the variable coordinates being 1 for

, 2 for

and 3 for

. Let

be the variable set. Then

and

. Therefore,

. Now we already know that

, so if there is a significant correlation between

and the dilation

of

(let’s assume for simplicity that

is invertible mod

), then knowing that

and

belong to

makes it more (or less if the correlation is negative) likely that

will belong to

. Such correlation is possible if

is a set such as an arithmetic progression or a Bohr set, and the fraction

has reasonably small numerator and denominator when written in its lowest terms. (Incidentally, Boris Bukh is the expert on sums of sets with their dilates.)

The example given is what one might call an extreme obstruction. If is an extreme obstruction constructed in that way, then a less extreme obstruction derived from

would be any set

such that

is significantly different from

. A simple example of such a set

is any set such that the number of sequences

with

is significantly different from

. Here we are taking

for

,

,

for every

,

, and

. (These are not the unique choices that give the example.)

Example 2. The next class of obstructions is closely related to obstructions that occur in the corners problem. Suppose that and

are two subsets of

, with density

and

, respectively. Then

has density

in

. Now suppose that we have two points in

of the form

and

for some

. Then the point

also belongs to

. Therefore, if you have the two 45-degree vertices of a corner, you automatically get the 90-degree vertex. The effect of this is to give

more corners than a random subset of

of density

. This is an important example in the corners problem, because if

is a structured subset of

and the sets

and

are random subsets of

, then

will have density roughly

inside

as well, unless

is very small. The original proof of Ajtai and Szemerédi accepted this problem and used Szemerédi’s theorem to pass to a very small substructure with a density increase, and I think we should (initially at least) follow their lead, because it is likely to be much simpler. Shkredov, in his quantitative work on the corners problem, defined “structured set” to mean something like a set

, where both

and

are highly quasirandom subsets of a structured subset of

.

These facts about corners naturally suggest the following class of obstructions in the DHJ problem. This class is a generalization that I should have spotted of a class that was suggested in my comment 116 . It also relates very interestingly to some of the discussion over on the Sperner thread about simpler forms of DHJ. (See for example comment 335 and comment 344. )

Let ,

and

be subsets of

. Then let

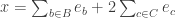

be the set of all sequences

such that the 1-set of

belongs to

, the 2-set of

belongs to

and the 3-set of

belongs to

. Suppose that the resulting set

happens to be dense (which is not necessarily the case even if

,

and

are dense). Then

has a tendency to contain too many combinatorial lines, however random the sets

,

and

are, basically because if

is a combinatorial line then you know things like that if the 1-set of

lies in

, then so does the 1-set of

.

The discussion over at the Sperner thread has almost certainly already given us the tools to deal with a set of such a kind. The idea would be to find a partition of almost all of

into combinatorial subspaces with dimension tending to infinity. The proof would be a development of the proof of DHJ(1,3), which it looks as though we already have. This would be an imitation of the Ajtai-Szemerédi approach to corners, we will find ourselves wanting to use DHJ(1,3) as a lemma in the proof of DHJ(2,3). (Actually, as I write this, I begin to see that it may not be quite so straightforward. We would probably need a multidimensional Sperner theorem, and I now realize that it is thoughts of this kind that motivate Jozsef’s attempt to find a density version of the finite-unions theorem—see comment 341 and several subsequent comments.)

Example 3. This is an intriguing example introduced by Ryan O’Donnell in comment 92. He considers (amongst other similar examples) the set of all sequences

that contain a consecutive run of

1s, where

is chosen so that the density of

is between

and

, say. (A simple calculation shows that you want to take

to be around

.) Now if two points in a combinatorial line contain a run of

1s, then at least one, and hence both, of those points contains a run of

1s in its fixed coordinates, which forces the third point to contain a run of

1s as well. So once again we get the wrong number of combinatorial lines, because you only have to find two points in a combinatorial line to guarantee the third.

It is easy to see that a number of variants of this example can be defined. (For example, one can permute the ground set, or one can ask for a run whose length varies according to where you are, etc. etc.)

What next?

If we are going to try to prove that a non-quasirandom set must have an easily understood obstruction to uniformity, our task will be much easier if we can come up with a single description that applies to all the examples we know of. That is, we would like a single global property that is shared by all the above examples (and any other examples there might be) and that allows us to obtain a density increase on a substructure. In comment 148, Terry did just that, defining a notion of a standard obstruction to uniformity. However, I do not yet understand his definition well enough to be able to explain it here, or to know whether it covers the more general version of Example 2 above. Terry, if you’re reading this and would like to give a fully precise definition, it would be very helpful and I could update this post to include it.

The main questions for this thread, it seems to me, are these.

1. What would be a good definition of a quasirandom subset of , or rather of some suitable union of slices in

?

2. Have we thought of all the ways that a set can have too many combinatorial lines? (Ryan’s example is troubling here: it seems to contain an idea that could be much more widely applicable. One might call this the “or” idea.)

3. Do we have a unified description of all the obstructions to uniformity that we have thought of so far, or, even better, a description that might conceivably apply to all obstructions to uniformity?

Comments on this post should begin at 400.

February 8, 2009 at 6:08 pm |

400. Quasirandomness.

In comment number 109, I asked for the go-ahead to do certain calculations away from the computer. Nobody responded to the request, which suggests to me that making requests that demand blog comments for their replies is not a good idea. In future, if I have such a request I will do it via a poll. (If anybody else wants me to fix up a poll for them, I think I now understand how to get such a poll to appear in a comment, so I’d be happy to do it for them.) However, given that I have not been granted permission to do these calculations, I am going to do them in public instead. The result may be a mess, but I hope that if it is, then at least it will be an informative mess.

What I want to do is prove a counting lemma for triangles in a special class of tripartite graphs, where the vertices are subsets of![\null[n]](https://s0.wp.com/latex.php?latex=%5Cnull%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) , and any edge has to join disjoint sets. (This class has already been much discussed.) Such a lemma would say that if all three parts of the graph are quasirandom, then the number of triangles is roughly what you expect it to be. At this stage, we do not know what “quasirandom” means, so we might seem to have a serious problem. However, this may well not be a problem, since the existing definitions of quasirandomness didn’t just spring from nowhere and turn out to be useful: one can derive them by attempting to prove counting lemmas and seeing what hypotheses you need in order to get your attempts to work. That is what I plan to try to do here.

, and any edge has to join disjoint sets. (This class has already been much discussed.) Such a lemma would say that if all three parts of the graph are quasirandom, then the number of triangles is roughly what you expect it to be. At this stage, we do not know what “quasirandom” means, so we might seem to have a serious problem. However, this may well not be a problem, since the existing definitions of quasirandomness didn’t just spring from nowhere and turn out to be useful: one can derive them by attempting to prove counting lemmas and seeing what hypotheses you need in order to get your attempts to work. That is what I plan to try to do here.

Let me give the definitions that I will need in order to state a counting lemma formally (with the exception of the definition of “quasirandom”, which I’m hoping will emerge during the proof). I shall follow standard practice in additive combinatorics, and attempt to prove a result about![{}[-1,1]](https://s0.wp.com/latex.php?latex=%7B%7D%5B-1%2C1%5D&bg=ffffff&fg=333333&s=0&c=20201002) -valued functions rather than about sets. Let

-valued functions rather than about sets. Let  ,

,  and

and  be three copies of the set of all subsets of

be three copies of the set of all subsets of ![\null[n]](https://s0.wp.com/latex.php?latex=%5Cnull%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) of size between

of size between  and

and  Let us write

Let us write  not for the usual Cartesian product, but rather for the set of all pairs

not for the usual Cartesian product, but rather for the set of all pairs  such that

such that  ,

,  and

and  , and similarly for the other two possible products, and also for the product of all three. (I called this the Kneser product in another comment, and may do so again in this thread.) Here is the counting lemma.

, and similarly for the other two possible products, and also for the product of all three. (I called this the Kneser product in another comment, and may do so again in this thread.) Here is the counting lemma.

Lemma. Let ,

,  and

and  be

be  -quasirandom functions from

-quasirandom functions from  ,

,  and

and  to

to ![\null[-1,1]](https://s0.wp.com/latex.php?latex=%5Cnull%5B-1%2C1%5D&bg=ffffff&fg=333333&s=0&c=20201002) , respectively. Then the absolute value of the expectation of

, respectively. Then the absolute value of the expectation of  over all triples

over all triples  is at most

is at most  , where

, where  tends to zero as

tends to zero as  tends to zero.

tends to zero.

No guarantee that this is a remotely sensible formulation, but it’s a first attempt. To be continued in my next comment.

February 8, 2009 at 6:31 pm |

401. Quasirandomness.

To understand the lemma, it is helpful to bear in mind the example where each of ,

,  and

and  is a function that randomly takes the values 1 or -1.

is a function that randomly takes the values 1 or -1.

Now let’s attempt to prove it, using standard Cauchy-Schwarz methods. To avoid overburdening the notation, I will adopt the following convention concerning whether it is to be understood that sets are disjoint. If I write something like then

then  and

and  are disjoint, whereas if I write

are disjoint, whereas if I write  then they are allowed to overlap. An exception is that if I use the same letter twice then overlap is allowed: so if I write

then they are allowed to overlap. An exception is that if I use the same letter twice then overlap is allowed: so if I write  then

then  and

and  do not have to be disjoint. I will also write

do not have to be disjoint. I will also write  for the set of

for the set of  that are disjoint from both

that are disjoint from both  and

and  , and so on. I hope it will be clear.

, and so on. I hope it will be clear.

With the benefit of many similar arguments to base this one on, we try to find an upper bound for the fourth power of the quantity that ultimately interests us. Here goes:

Before I continue, I need to decide whether it is catastrophic to apply Cauchy-Schwarz at this point. The answer is no if and only if I have some reason to suppose that the function is reasonably constant (by which I mean that it doesn’t do something like being zero except for a very sparse set of

is reasonably constant (by which I mean that it doesn’t do something like being zero except for a very sparse set of  ). It seems OK, so let’s continue:

). It seems OK, so let’s continue:

Now let’s use the fact that is bounded above by 1 in modulus to get rid of it. (This looks ridiculous as we’re throwing away all that quasirandomness information about

is bounded above by 1 in modulus to get rid of it. (This looks ridiculous as we’re throwing away all that quasirandomness information about  . But experience with other problems suggests that we shall need just one of the three functions to be quasirandom.)

. But experience with other problems suggests that we shall need just one of the three functions to be quasirandom.)

Now we multiply out the inner bracket.

Now interchange the order of summation.

Hang on. That was wrong, and the first point where something happens that is interestingly different from what happens in other proofs of this kind. The problem is that there is a big difference between interchanging expectations and interchanging summations round here. In fact, even the very first step, which looked innocuous enough, was wrong. I’ll pause here and reflect on this in another comment.

February 8, 2009 at 6:41 pm |

402. Standard obstructions to uniformity

I’ll try here to clarify my earlier comment 148. Let me start by reviewing the theory of corners in

in ![{}[n]^2](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) , or more precisely

, or more precisely  to avoid some (extremely minor) technicalities.

to avoid some (extremely minor) technicalities.

Say that a dense set is _uniform for the first corner_ if for any other dense sets B, C, the number of corners

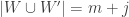

is _uniform for the first corner_ if for any other dense sets B, C, the number of corners  with

with  is close to the expected number. It is standard that A is uniform for the first corner if and only if it does not have an anomalous density on a dense Cartesian product

is close to the expected number. It is standard that A is uniform for the first corner if and only if it does not have an anomalous density on a dense Cartesian product  . There are similar results for the other corners, e.g. B is uniform on the second corner iff there is no anomalous density on a dense sheared Cartesian product

. There are similar results for the other corners, e.g. B is uniform on the second corner iff there is no anomalous density on a dense sheared Cartesian product  , etc.

, etc.

One can of course replace sets by functions: a function f(a,b) is uniform for the first corner iff it does not have an anomalous average on dense Cartesian products, etc.

Now return to DHJ. Given a set A in![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , we can define the function

, we can define the function  on

on ![{}[n]^2](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D%5E2&bg=ffffff&fg=333333&s=0&c=20201002) to be the number of elements of A with a 1s and b 2s (and thus c=n-a-b 3s, which I will ignore here). Let me call this function f the global richness profile for A. We know that one good way to construct combinatorial line-free sets A is to ensure that f(a,b) is supported on a set with no corners (indeed, all the best examples in my own thread on this project come this way). More generally, it is pretty clear that A is not going to be quasirandom for the third point of combinatorial lines if its richness profile f is not uniform for the first corner. (I managed to interchange “first” and “third” in my notational conventions, but please ignore this for the sake of discussion.)

to be the number of elements of A with a 1s and b 2s (and thus c=n-a-b 3s, which I will ignore here). Let me call this function f the global richness profile for A. We know that one good way to construct combinatorial line-free sets A is to ensure that f(a,b) is supported on a set with no corners (indeed, all the best examples in my own thread on this project come this way). More generally, it is pretty clear that A is not going to be quasirandom for the third point of combinatorial lines if its richness profile f is not uniform for the first corner. (I managed to interchange “first” and “third” in my notational conventions, but please ignore this for the sake of discussion.)

That’s the “global” obstruction to uniformity. One can also do something similar using “local” obstructions. Consider a reasonably large subset S of [n], and define the local richness profile of A to be the number of elements of A which have a 1s in S and b 2s in S (and thus |S|-a-b 3s in S). Now, if the local richness profile is supported on a corner-free set, then it is not quite true that A has no combinatorial lines; but the lines in A now must have all their wildcards outside of S, so this should cut down substantially on the number of lines in A (compared with the random set A) if S is even moderately large. For similar reasons, I would expect A to fail to be quasirandom for the third point of a combinatorial line if

of A to be the number of elements of A which have a 1s in S and b 2s in S (and thus |S|-a-b 3s in S). Now, if the local richness profile is supported on a corner-free set, then it is not quite true that A has no combinatorial lines; but the lines in A now must have all their wildcards outside of S, so this should cut down substantially on the number of lines in A (compared with the random set A) if S is even moderately large. For similar reasons, I would expect A to fail to be quasirandom for the third point of a combinatorial line if  is not uniform for the first corner, and analogously for the other two points of the combinatorial line.

is not uniform for the first corner, and analogously for the other two points of the combinatorial line.

Boris suggested replacing the set S with a more general integer-valued weight![w: [n] \to {\Bbb Z}](https://s0.wp.com/latex.php?latex=w%3A+%5Bn%5D+%5Cto+%7B%5CBbb+Z%7D&bg=ffffff&fg=333333&s=0&c=20201002) , which should accomplish a similar effect.

, which should accomplish a similar effect.

One can localise these local obstructions even further, by working with slices of A instead of all of A, e.g. by fixing some moderate number of coordinates and then considering a local or global richness profile for the slice on the remaining coordinates (or some subset S thereof, or some weight w). If this profile fails to be quasirandom for most slices, or its density deviates from the mean for most slices, then this should also be an obstruction to uniformity.

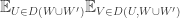

So I guess my formalisation of a “standard obstruction to uniformity” for the third point of a combinatorial line for a set![A \subset [3]^n](https://s0.wp.com/latex.php?latex=A+%5Csubset+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) of density

of density  , would consist of a small set T of fixed indices, then a disjoint large subset S of sampling indices, and dense sets X, Y of

, would consist of a small set T of fixed indices, then a disjoint large subset S of sampling indices, and dense sets X, Y of ![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) (or more precisely

(or more precisely ![{}[|S|]](https://s0.wp.com/latex.php?latex=%7B%7D%5B%7CS%7C%5D&bg=ffffff&fg=333333&s=0&c=20201002) ) such that for most of the

) such that for most of the  choices

choices  of the fixed indices, the local richness profile

of the fixed indices, the local richness profile  , defined as the number of elements of A with coordinates

, defined as the number of elements of A with coordinates  on T, and with a 1s in S and b 2s in S, has density significantly different from

on T, and with a 1s in S and b 2s in S, has density significantly different from  on

on  . (Actually, as I write this it occurs to me that one may need X, Y to depend on

. (Actually, as I write this it occurs to me that one may need X, Y to depend on  .)

.)

Anyway, that’s my proposal for the standard obstruction… hopefully it makes some sense.

February 8, 2009 at 6:54 pm |

403. Standard obstructions to uniformity.

Thanks very much for that Terry. I’ve just read it rather quickly, and I get the impression that because it focuses strongly on the cardinalities of sets, it may not have picked up the full (and new since your comment 148 ) generality of Example 2 in my post above. I’m not over-worried about that, as I have a strong feeling that that example can be dealt with. But if I am right that it isn’t a standard obstruction to uniformity then it would be good to try to generalize the definition.

February 8, 2009 at 7:45 pm |

404. Quasirandomness.

What, then was the problem with the first line of the calculations in 401? What I did was to replace by

by  Here, the first expectation was over disjoint triples

Here, the first expectation was over disjoint triples  while the second took an expectation over disjoint pairs

while the second took an expectation over disjoint pairs  of the expectation over all sets

of the expectation over all sets  that were disjoint from

that were disjoint from  . And there is a huge difference between the two, since in the second we count all disjoint pairs

. And there is a huge difference between the two, since in the second we count all disjoint pairs  equally, whereas in the first we count a pair

equally, whereas in the first we count a pair  far more if the size of

far more if the size of  is smaller, since then there are many more

is smaller, since then there are many more  that are disjoint from

that are disjoint from  and

and  . And since the sizes of the sets are not fixed, this is a serious concern.

. And since the sizes of the sets are not fixed, this is a serious concern.

For now I’m going to restrict attention to the simpler set-up where for some smallish

for some smallish  and all our sets have size

and all our sets have size  . So now the sizes of the sets

. So now the sizes of the sets  will always be

will always be  . However, while this corrects the first line, it doesn’t get us out of the woods, because there is no trick like that that we can pull off to sort out the second interchanging of the order of summation. And that is because there is no getting away from the fact that

. However, while this corrects the first line, it doesn’t get us out of the woods, because there is no trick like that that we can pull off to sort out the second interchanging of the order of summation. And that is because there is no getting away from the fact that  can vary in size.

can vary in size.

However, it now occurs to me that this may not be such a huge problem. The reason is that most of the time is of maximal size

is of maximal size  , since the smallness of

, since the smallness of  makes it rather unlikely that a point will be in the complement of both

makes it rather unlikely that a point will be in the complement of both  and

and  . (What’s more, the probability that the complement has size

. (What’s more, the probability that the complement has size  goes down like

goes down like  while the gain in the number of pairs

while the gain in the number of pairs  goes up like

goes up like  , so the exceptions should be insignificant.)

, so the exceptions should be insignificant.)

I’m not sure that this reasoning is correct, but for now let us pretend that the problem doesn’t exist and that that interchange of summation was after all legal, except that I should have written

So the next stage is to apply Cauchy-Schwarz, which gives us

Now I’m getting worried because fixing determines

determines  , and this feels wrong. So I’m going to start all over again, this time using sums rather than expectations.

, and this feels wrong. So I’m going to start all over again, this time using sums rather than expectations.

February 8, 2009 at 8:00 pm |

405. density-increasing-strategies & quasirandomness

Few remarks (which may be wrong so please correct me or hint if they are) Let me split them and start with one).

a) when we talk about quasi-randomness we often talk about “codimension-one-quasi-randomness” we start with the characteristic function f of the set, we show that if the number of desirable configuration is not what we expect then the function has some large correlation with some other function g; then we use it to somehow show that the set has higher density on a small dimensional “space” (subcube of some kind.)

We can talk about conditions for quasirandomness that refer directly about

low dimensional subspaces. i.e. look at all the various irregular examples that we have and ask: do we see there unusually high density for combinatorial subspace (or some more complicated gadget) of lower dimension – square root of n, log n etc.

(It is true that we do not have any proof-techniqus that jumps directly to low dimensional subspaces or “varieties” without passing through a codimension 1 gadget.)

February 8, 2009 at 8:14 pm |

Dear Tim, maybe rule 6 should be slightly relaxed and people could make off-line few-hour calculations without general concensus; (actually after just reading the rules, I would consider also a no-rules version of this endeavor.) In any case, I expect a wide concensus on letting you do the sum version on-line if you so prefer and perhaps granting you a wider mandate for related calculations. (I vote yes on both.)

February 8, 2009 at 8:34 pm |

406. Quasirandomness.

This time I do everything with sums and try to interpret it later. The disjointness conventions are as before. I haven’t repeated the remarks between lines.

Now we divide by the factor . Having done that we multiply out the inner bracket.

. Having done that we multiply out the inner bracket.

Now interchange the order of summation.

Now the difficulty we face is much clearer. Normally one should apply Cauchy-Schwarz only if a function is broadly speaking reasonably constant. But here the inner sum is far bigger if is small, and in fact it is zero for most

is small, and in fact it is zero for most  and

and  . So let’s try to think what a typical quadruple of sets

. So let’s try to think what a typical quadruple of sets  looks like if we know that all four sets have size

looks like if we know that all four sets have size  , and all six pairs of them are disjoint apart from the pair

, and all six pairs of them are disjoint apart from the pair  . (I’m still assuming, for simplicity, that

. (I’m still assuming, for simplicity, that  for some smallish

for some smallish  and that all sets have size

and that all sets have size  .)

.)

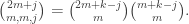

I now realize I made a mistake earlier. If , then the number of disjoint pairs

, then the number of disjoint pairs  in

in  is

is  If you decrease

If you decrease  by 1, the first of these binomial coefficients goes up by a factor of approximately 2, but the second goes up by more like

by 1, the first of these binomial coefficients goes up by a factor of approximately 2, but the second goes up by more like  . As for the number of pairs

. As for the number of pairs  such that

such that  , it equals

, it equals  . The second of these goes down by a factor of about

. The second of these goes down by a factor of about  and the third goes down by a factor of about

and the third goes down by a factor of about  . So it still seems as though the sum is dominated by pairs with

. So it still seems as though the sum is dominated by pairs with  .

.

Ah, I’m forgetting that I should be thinking about the square of the inner sum, and now things look a lot healthier. So if you decrease by 1, then the square of the inner sum goes up by about

by 1, then the square of the inner sum goes up by about  and the number of

and the number of  pairs goes down by around

pairs goes down by around  . So some kind of concentration should occur

. So some kind of concentration should occur that makes these two equal, which is

that makes these two equal, which is  . (I admit it: I cheated and used a piece of paper there.)

. (I admit it: I cheated and used a piece of paper there.)

around the

I need to stop this for a while. To be continued.

February 8, 2009 at 10:48 pm |

407. Quasirandomness.

I think at this point it helps to bring in at least one expectation. Where I’ve written I will now write

I will now write  , where

, where  is the number of disjoint pairs

is the number of disjoint pairs  in

in  , and then apply Cauchy-Schwarz. That gives me

, and then apply Cauchy-Schwarz. That gives me

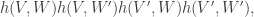

Now the first bracket is just the number of quadruples of sets of size

of sets of size  such that all sets with different letters are disjoint. So let’s drop it and try to estimate the second.

such that all sets with different letters are disjoint. So let’s drop it and try to estimate the second.

In this model, we are assuming that and

and  both have size

both have size  . So I’m allowed to replace

. So I’m allowed to replace  by

by  . That gives

. That gives

which by Cauchy-Schwarz and the boundedness of is at most

is at most

Expanding the bracket gives us

This is a slight mess but it makes me hopeful, for reasons that I’ll explain in my next comment.

February 8, 2009 at 11:29 pm |

409. Standard obstructions to uniformity

Tim, you’re right, Example 2 (where the 1-set, 2-set, and 3-set of A are independently constrained) is quite different from the class I had in mind. It may be that this is the more fundamental example, and I will try to think about whether the other examples we have can be reformulated to resemble this one. It may take some time before I will be able to look at it, though.

February 8, 2009 at 11:30 pm |

410. Quasirandomness.

The above calculation ends up with something a little bit strange, but here’s what I hope might happen if it is tidied up. Given any we can define a bipartite graph whose vertex sets are the set of all

we can define a bipartite graph whose vertex sets are the set of all  that are disjoint from

that are disjoint from  and the set of all

and the set of all  that are disjoint from

that are disjoint from  and whose edges are pairs

and whose edges are pairs  (still the Kneser product). If we restrict

(still the Kneser product). If we restrict  to this bipartite graph, we can “count 4-cycles”, or rather evaluate the sum of

to this bipartite graph, we can “count 4-cycles”, or rather evaluate the sum of  restricted to this bipartite graph. Could one perhaps hope that if this sum is on average small, then the function

restricted to this bipartite graph. Could one perhaps hope that if this sum is on average small, then the function  is sufficiently quasirandom to make the original sum (at the beginning of 406) or expectation (at the beginning of 401) small? The calculations above suggest that something like that is true. But one needs to be wary since the intersection of the neighbourhoods of

is sufficiently quasirandom to make the original sum (at the beginning of 406) or expectation (at the beginning of 401) small? The calculations above suggest that something like that is true. But one needs to be wary since the intersection of the neighbourhoods of  and

and  will get rapidly smaller as

will get rapidly smaller as  gets bigger, and this probably needs to be allowed for somehow. But instead of trying to sort that out, I want to speculate a bit, on the assumption that it can be made to work.

gets bigger, and this probably needs to be allowed for somehow. But instead of trying to sort that out, I want to speculate a bit, on the assumption that it can be made to work.

February 9, 2009 at 12:13 am |

411. Obstructions to uniformity.

As a result of thinking about what sort of global obstruction to uniformity might come out of the failure of quasirandomness of the type just discussed, it has just occurred to me to wonder whether in fact all the obstructions we have so far are special cases of Example 2. That is, if is one of our basic examples of a set with the wrong number of combinatorial lines, can we always find collections of sets

is one of our basic examples of a set with the wrong number of combinatorial lines, can we always find collections of sets  ,

,  and

and  such that

such that  correlates unduly with the set of all sequences

correlates unduly with the set of all sequences  with

with  ,

,  and

and  , and this set of sequences is dense. (Recall that

, and this set of sequences is dense. (Recall that  is the

is the  -set of

-set of  is the set of coordinates i where

is the set of coordinates i where  .)

.)

Let’s try Example 1. To keep things simple, let’s go for the example of the sum of all the coordinates being a multiple of 7. What could we take as ,

,  and

and  in this case? Easy: we can take them all to consist of all sets for which the number of elements is a multiple of 7. (If

in this case? Easy: we can take them all to consist of all sets for which the number of elements is a multiple of 7. (If  isn’t a multiple of 7, then we can find

isn’t a multiple of 7, then we can find  such that

such that  and

and  mod 7 and ask for sets in

mod 7 and ask for sets in  to have cardinality congruent to

to have cardinality congruent to  etc.) It’s clear that if we had different weights but still worked mod 7 we could do something similar, and I’m pretty sure that generalizing to structured sets in Abelian groups doesn’t make things substantially harder. Of course, all this is to be expected because of Terry’s comments about standard obstructions.

etc.) It’s clear that if we had different weights but still worked mod 7 we could do something similar, and I’m pretty sure that generalizing to structured sets in Abelian groups doesn’t make things substantially harder. Of course, all this is to be expected because of Terry’s comments about standard obstructions.

How about Ryan’s example? There we could simply take ,

,  and

and  to consist of all sets that contain a run of

to consist of all sets that contain a run of  consecutive elements.

consecutive elements.

Based on that, here is a conjecture.

Conjecture: Let be a subset of (the union of a suitable triangular grid of slices near the centre of)

be a subset of (the union of a suitable triangular grid of slices near the centre of) ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) that does not have the expected number of combinatorial lines. Then there are set systems

that does not have the expected number of combinatorial lines. Then there are set systems  ,

,  and

and  such that for a dense set of sequences

such that for a dense set of sequences  we have

we have  ,

,  and

and  , and such that, setting

, and such that, setting  to be the set of all such sequences, the density of

to be the set of all such sequences, the density of  in $B$ is significantly greater than the density of

in $B$ is significantly greater than the density of  .

.

This conjecture is a direct analogue of a fact that is known to be true for corners. (This fact was basically mentioned by Terry in comment 402.) It’s a slight generalization of what Terry was suggesting, and I think there’s a definite chance that it could have dealt with question 3 in the main post.

If anyone can think of an obstruction to uniformity that is not of this form, then they will have demonstrated that DHJ is different in a very interesting way from the corners problem. Such an example would give a big new insight into the problem. But I’m an optimist and at the moment I am guessing that the conjecture is true.

[Note: if this comment seems to ignore Terry’s comment 409, it’s because his comment appeared while I was writing it.]

February 9, 2009 at 7:49 am |

[…] A third thread about obstructions to uniformity and density-increment strategies is forthcoming alive and kicking on Gowers’s […]

February 9, 2009 at 8:13 am |

412. The conjecture in 411 is, I am afraid, too optimistic. Observe that there are at most wildcards in every combinatorial line that is contained in a union of slices near the center of

wildcards in every combinatorial line that is contained in a union of slices near the center of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) . That is because the number of

. That is because the number of  ‘s does not vary by more than that.

‘s does not vary by more than that.

Thinking of an element of![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) as a word of length

as a word of length  in

in  , let

, let  be the number of occurrences of subwords

be the number of occurrences of subwords  , and

, and  respectively (“subword” means a sequence of _consecutive_ letters). Let

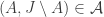

respectively (“subword” means a sequence of _consecutive_ letters). Let  . Note that typically

. Note that typically  varies in the interval of length

varies in the interval of length  around origin. Thus, if

around origin. Thus, if  , it is reasonable to suppose that

, it is reasonable to suppose that  is going to be distributed uniformly in

is going to be distributed uniformly in  . Assume it is. Choose

. Assume it is. Choose  for the sake of definiteness (we need it to be greater than

for the sake of definiteness (we need it to be greater than  for what follows). Define

for what follows). Define  by the condition

by the condition  . The density of

. The density of  is

is  , but I claim that the number of combinatorial lines is close to

, but I claim that the number of combinatorial lines is close to  .

.

Indeed in a typical combinatorial line, the letters adjacent to wildcards are not wildcards themselves. By symmetry, as change value of wildcards the expected change in is equal to expected change in

is equal to expected change in  . Since both

. Since both  and and

and and  are concentrated in an interval of length

are concentrated in an interval of length  (recall that the number of wildcards is

(recall that the number of wildcards is  , we infer that typically

, we infer that typically  does not change by more than

does not change by more than  . Since

. Since  , if a combinatorial line contains a single element of

, if a combinatorial line contains a single element of  , then it is very likely to be wholly contained in

, then it is very likely to be wholly contained in  .

.

It seems intuitively clear to me that there is no density increment on of the kind desired in comment 411.

of the kind desired in comment 411.

February 9, 2009 at 8:56 am |

413. Correlation with “Cartesian semi-products”

I found a weird variant of the conjecture in 411 that I don’t know how to interpret. Let be a dense subset of

be a dense subset of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) without combinatorial lines, or equivalently a collection of partitions

without combinatorial lines, or equivalently a collection of partitions ![{}[n] = A \cup B \cup C](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D+%3D+A+%5Ccup+B+%5Ccup+C&bg=ffffff&fg=333333&s=0&c=20201002) of [n] that does not contain a “corner”

of [n] that does not contain a “corner”  .

.

Now pick a random subset C of [n] of size about n/3 (up to errors) and look at the slice of

errors) and look at the slice of  with this C as the third set in the partition. This is basically a set system in

with this C as the third set in the partition. This is basically a set system in ![{}[n] \backslash C](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D+%5Cbackslash+C&bg=ffffff&fg=333333&s=0&c=20201002) , which we expect to be dense on the average. Now, following the proof of Sperner as in 331, let us pick a random maximal chain

, which we expect to be dense on the average. Now, following the proof of Sperner as in 331, let us pick a random maximal chain ![\emptyset = A_0 \subset \ldots \subset A_{n-|C|} = [n] \backslash C](https://s0.wp.com/latex.php?latex=%5Cemptyset+%3D+A_0+%5Csubset+%5Cldots+%5Csubset+A_%7Bn-%7CC%7C%7D+%3D+%5Bn%5D+%5Cbackslash+C&bg=ffffff&fg=333333&s=0&c=20201002) . On the average, we expect this chain to be dense in the equator (the part of the chain with cardinality

. On the average, we expect this chain to be dense in the equator (the part of the chain with cardinality  . Thus

. Thus  contains roughly

contains roughly  triples of the form

triples of the form ![(A_j, [n] \backslash (A_j \cup C), C)](https://s0.wp.com/latex.php?latex=%28A_j%2C+%5Bn%5D+%5Cbackslash+%28A_j+%5Ccup+C%29%2C+C%29&bg=ffffff&fg=333333&s=0&c=20201002) where

where  . Let J be the set of all such j.

. Let J be the set of all such j.

Since avoids all combinatorial lines, this means that

avoids all combinatorial lines, this means that  must avoid the “Cartesian semi-product”

must avoid the “Cartesian semi-product”

which implies that the set

avoids the Cartesian product . In particular, it fails to be quasirandom with respect to such products, and so by two applications of Cauchy-Schwarz, it must have an anomalously large number of rectangles. Averaging over all choices of C and over all choices of maximal chain, this seems to imply that

. In particular, it fails to be quasirandom with respect to such products, and so by two applications of Cauchy-Schwarz, it must have an anomalously large number of rectangles. Averaging over all choices of C and over all choices of maximal chain, this seems to imply that  contains an anomalously large number of “rectangles”

contains an anomalously large number of “rectangles”

where and

and  all have cardinality about

all have cardinality about  . If it were not for the additional constraints

. If it were not for the additional constraints  ,

,  , this would start coming quite close to the conjecture in 411. As it is, I don’t know where to take this next.

, this would start coming quite close to the conjecture in 411. As it is, I don’t know where to take this next.

February 9, 2009 at 9:11 am |

414.

I was going to add that all the examples I mentioned in 148 happened to be of the right form for the conjecture in 411, but Boris in 412 seems to have created a totally new species of non-quasirandom set that needs to be understood better. This example is of course consistent with what I just discussed at 413, by the way: in a typical “Cartesian semi-product”, the 132 and 123 statistics don’t vary by much more than , and so such semi-products are likely to either lie totally in

, and so such semi-products are likely to either lie totally in  or totally outside of

or totally outside of  .

.

(Incidentally, these Cartesian semi-products are closely related to the sets S discussed in Tim’s 331.)

February 9, 2009 at 10:24 am |

415. Obstructions to uniformity.

No time for serious mathematics at the moment, but I did want to give my gut reaction to the last few comments. First, my usual experience when making an overoptimistic conjecture is to go to bed, wake up, and see why it’s nonsense (perhaps not immediately, but at some point when my mind wanders back to it and I’m in a more sceptical mood). With this collaboration it’s different: I wake up and find a counterexample on the blog!

Having said that, I did very much like that 411 conjecture, so when I get the time I plan to do two things, unless someone has already done them. First is to try to define some set systems that do, contrary to expectation, give rise to a correlation with Boris’s example. But at the moment I share his intuition that the roles of the coordinate values are somehow too intertwined to make this possible, so my main reason for doing this would be to try to get at a proof that it really is a new obstruction, or at least a sketch of a proof. (That may not be too difficult to achieve, so I’ll check from time to time to see if someone else has already done it.)

Other things that one could do in response to the example are to try to formulate a yet more general notion of obstruction to uniformity that includes his example. I don’t have any idea yet what that might look like, but it would be great if we could come up with something simple. It would also be good to find a combinatorial subspace in which his set is unusually dense. (Maybe Terry has done that above — I haven’t yet followed his comments enough to be able to see.) And finally, I would like to think hard about what obstructions to uniformity would arise naturally from a set’s not being quasirandom according to the definition that half-emerged from the calculations leading up to 410. (In 411 I just jumped to conclusions, and it now looks as though I did so prematurely.) Here I would take my cue from the way I proved one version of the regularity lemma for hypergraphs. I was in precisely this situation: there were a lot of bits of local non-uniformity (in small neighbourhoods) and one somehow had to add them up to obtain a global non-uniformity. It turned out to follow because the neighbourhoods with the local non-uniformities were nicely spread about. I think there’s a good chance of pulling off a similar trick here. (An argument of the type I’m talking about appears in Section 8 of my 3-uniform hypergraphs paper in the run-up to the proof of Lemma 8.4, but our situation might be slightly simpler as we’re talking about graphs rather than hypergraphs.)

Terry, I’m looking forward to understanding Cartesian semi-direct products better, but I’m coming to see the kind of thing you’re doing. Actually, the more I think about it, the more I wonder if it is the kind of thing I was hoping to get to in the calculations at the beginning of this thread. If so, then the remarks towards the end of the previous paragraph of this comment could be helpful in taking things further.

February 9, 2009 at 10:26 am |

416. Obstructions to uniformity.

It also occurs to me that Boris’s thought processes could be extremely helpful here. Did that example just jump out of thin air? If not, then were there some preliminary “philosophical thoughts” that led to it? If the latter, then it could be useful to know what they were so that we have a better chance of making correct guesses about how a proof might go.

February 9, 2009 at 11:45 am |

417. quasirandomness. (405 (point a) continued)

So a concrete question is this: suppose that a set of density c has density at most 2c on all combinatorial subspaces of dimension 100 log n (or log^2 n; or n^{1/1000}) does it necessarily have the right number of combinatorial lines? (Is any of our non quasi random example violate it?)

(Of course in the next procedural remark by “on-line” I meant “off-line”.)

February 9, 2009 at 5:08 pm |

418. Answer to 416: My thought process was simple: First the analogue of Tim’s conjecture for Sperner is false (if one thinks of Sperner as DHJ(2,1), then it is not true that obstructions come from DHJ(2,0) because there is no DHJ(2,0)), and hence the conjecture should be false. Second, one needs some example where coordinates are somehow linked together. Third, if we try to impose a condition on number of pairs 12, then the only condition I really know how to impose is a modular condition. It fails to give a counterexample since if one element of the combinatorial line has some number of these, it tells us next to nothing about the number of 12’s in other elements of the combinatorial line because the number of 12’s decreases by a lot when we change wildcard from 2 to 3. Well, then I thought about compensating for this huge change by forming some linear combination. My first construction was to count number of 12, 23, and 31 and add them weighted with third roots of unity. Finally, I simplified the construction.

Comment regarding to 417: If we take any known obstruction to the uniformity of density and take a union with a random set of density

and take a union with a random set of density  wouldn’t we obtain an example Gil seeks?

wouldn’t we obtain an example Gil seeks?

February 9, 2009 at 6:57 pm |

419. Just a quick comment, will respond at length later when I am less busy: Boris’s 412 example has the property that all coordinates have extremely low influence: modifying a 1 to a 2, say, is unlikely to move you from A to not-A, or vice versa. As a consequence, it is easy to find lots of subspaces with a density increment, just by selecting a moderate number of indices and jiggling them from 1 to 2 to 3 independently.

Presumably one can more generally push the density increment argument to reduce to sets A in which all indices (and maybe sets of indices) have small influence, but I have not thought about this carefully.

February 9, 2009 at 7:02 pm |

420. Obstructions to uniformity.

Boris, many thanks for that — as I hoped, it was very illuminating. In particular, your initial step is thought-provoking. My first reaction to it is to try to prove you wrong by formulating DHJ(2,0), but with no particular hope of success. If indeed your reasoning is right, then it’s good news in a way, because it suggests that we can get a better understanding of obstructions in DHJ(3,2) by thinking a bit harder about obstructions in DHJ(2,1).

Here’s what DHJ(2,0) might say. (In order to do this I’m keeping open comment 344 in a different tab and trying to extend the definition of DHJ(j,k) downwards to DHJ(0,k).) For every empty subset![E\subset[k]](https://s0.wp.com/latex.php?latex=E%5Csubset%5Bk%5D&bg=ffffff&fg=333333&s=0&c=20201002) you have a set

you have a set  of functions from

of functions from  to the power set of

to the power set of ![\null[n]](https://s0.wp.com/latex.php?latex=%5Cnull%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) such that the images of all points in

such that the images of all points in  are disjoint.

are disjoint.

So far so good: there is precisely one such function, so is a singleton that contains just that trivial function.

is a singleton that contains just that trivial function.

Now we define to be the set of all ordered partitions of

to be the set of all ordered partitions of ![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) into

into  sets

sets  such that for every empty subset

such that for every empty subset  of

of ![{}[k]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bk%5D&bg=ffffff&fg=333333&s=0&c=20201002) the sequence

the sequence  belongs to

belongs to  . But that happens for every ordered partition of

. But that happens for every ordered partition of ![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) into

into  sets, so

sets, so  corresponds to the set of all sequences.

corresponds to the set of all sequences.

So I claim that DHJ(0,k) is the assertion that![{}[k]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5Bk%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) contains a combinatorial line!

contains a combinatorial line!

This doesn’t really affect the substance of what Boris is saying, but it allows us to reexpress it. The conjecture DHJ(0,k) (which, come to think of it, I think I see how to prove), suggests a class of 0-level obstructions: you may well not get the expected number of combinatorial lines if you have the wrong correlation with the constant function 1 — that is, if you have the wrong density. So the real point that Boris is making is that having the wrong density is not the only obstruction to Sperner (whereas it is to the trivial one-dimensional analogue of corners, since the number of one-dimensional corners is precisely determined by the density of the set).

Thus, Boris’s thought processes do indeed contain a valuable new insight: that we should think hard about obstructions to Sperner, because these will feed into DHJ as an additional source of obstructions to DHJ(2,3), over and above the ones that come from DHJ(1,3). In this way, we see that there is something about DHJ(2,3) that genuinely goes beyond what happens for corners.

Unfortunately, it is still completely mysterious to me what that extra something is, but equally it is clear that what we must try to do is generalize Boris’s obstruction as much as we possibly can and hope that we can reach a new and more sophisticated conjecture. A good start will be to see what we can do with obstructions to Sperner. (And perhaps there is some hope here, as people already seem to have thought about it. If anyone feels like giving a detailed summary of what is known, they will be doing me at least a big favour.)

February 9, 2009 at 7:55 pm |

421. Boris, i do not think your example is a counter example. If you take the obstructions for pseudorandomness (at least the 2 I checked) you gets large combinatorial subspaces in the set. E.g. if the number of 1,2,3 coordinates is divisible by 7 or if there is a long runs.

OK i see, what you probably suggest is this: start with a obstruction with high density say 7/8 take a random subset of it which has density c and then even in the large combinatorial spaces the density will be below 8/7. (You do not need to add anything random) Right.

Anyway, we can still ask: If the number combinatorial lines is more than 10 times or less than 1/10 times the expected number, must we have a combinatorial subspace of dimension 100logn or log^2n or n^1/100 that the density on it is more than 1.01c.

February 9, 2009 at 10:00 pm |

422. This is response to Terry’s #413.

Let me just try to rephrase it in my own language. Let be a subset of

be a subset of ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) of density at least

of density at least  .

.

Form a random “subcube” as follows: Pick a random string by choosing each coordinate to be

by choosing each coordinate to be  with probability

with probability  and

and  with probability

with probability  . Let

. Let  be the subcube

be the subcube  , given by taking all ways of filling in

, given by taking all ways of filling in  with

with  or

or  . (Here

. (Here  is the number of

is the number of  ‘s in

‘s in  .) For a given string

.) For a given string  , let’s abuse notation slightly by writing

, let’s abuse notation slightly by writing  for the associated string in

for the associated string in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) .

.

It is easy to see that with probability at least over the choice of

over the choice of  , the density of

, the density of  on

on  is at least

is at least  , a positive constant.

, a positive constant.

So we can apply some kind of souped-up Sperner. In particular, I believe it should be relatively easy to show that contains many “half-lines” on

contains many “half-lines” on  . I.e., if we look at pairs

. I.e., if we look at pairs  such that

such that  and

and  , it should be that the set of

, it should be that the set of  ‘s involved in such half-lines has positive density in

‘s involved in such half-lines has positive density in  , and the same for the

, and the same for the  ‘s.

‘s.

But since was random from the right distribution, we conclude the following: Look at all “half-lines” in

was random from the right distribution, we conclude the following: Look at all “half-lines” in  ; i.e., pairs

; i.e., pairs  with the potential to be the

with the potential to be the  – and

– and  -points of a combinatorial line. Then the

-points of a combinatorial line. Then the  ‘s participating in these half-lines have positive density in

‘s participating in these half-lines have positive density in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , and same for the

, and same for the  ‘s.

‘s.

Now it almost seems like we’re there, because we have a pretty dense set of half-lines. We just need to find one point in to cap off a half-line. I guess the trouble is that, while the set of points capping off a half-line has positive density in

to cap off a half-line. I guess the trouble is that, while the set of points capping off a half-line has positive density in ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , it still might completely miss

, it still might completely miss  . So perhaps the goal should be to show that either these capping points are *very* dense, or else we can see some structure in them out of Sperner.

. So perhaps the goal should be to show that either these capping points are *very* dense, or else we can see some structure in them out of Sperner.

February 9, 2009 at 10:31 pm |

423. Re Boris’s example #418:

One thing I do not quite understand: What is the definition of “typical combinatorial line”?

February 9, 2009 at 10:55 pm |

424. Re Terry’s #419.

I’m still a bit confused by this density-increment search. One finds counterexamples with the “wrong” number of combinatorial lines, but then one says, “Aha, but if I fix these coordinates this way, I get a large density increment.”

Now Boris gives a counterexample in which each coordinate has low “influence”. This is the only way to beat the above density-increment parry — if your example has coordinates with large influence, then you can of course fix these coordinates to get density increment.

But now in #419 Terry says, “Aha, if the coordinates all have *low* influence, we should be able to find a density increment.”

It seems like we can always find a density increment! This is related to my comment #123.

February 9, 2009 at 11:00 pm |

425. Answer to Ryan’s question in #423: it is not important, as long as the definition is invariant under permutation of![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) . The only thing the construction uses is that no combinatorial line uses more than

. The only thing the construction uses is that no combinatorial line uses more than  wildcards. Then if we take an orbit of any combinatorial under the action of symmetric group on

wildcards. Then if we take an orbit of any combinatorial under the action of symmetric group on ![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) most elements of the orbits will have virtually no adjacent wildcards.

most elements of the orbits will have virtually no adjacent wildcards.

February 9, 2009 at 11:03 pm |

426. I do not follow Ryan’s argument in #424. If there are variables of large influence, it does not mean we can fix them to get density increment. Think of XOR function on the Boolean cube. All coordinates have large influence, but on every coordinate subcube the density is still .

.

February 9, 2009 at 11:04 pm |

427. Re 424,419

Now I’m confused by Ryan’s comment. If is a random set, then doesn’t every coordinate have large influence? And you can’t get a density increase. Apologies if this shows that I don’t properly understand what “influence” means.

is a random set, then doesn’t every coordinate have large influence? And you can’t get a density increase. Apologies if this shows that I don’t properly understand what “influence” means.

February 9, 2009 at 11:08 pm |

428. Obstructions and possible technical simplification.

I would like to draw attention to my comments 365 and 366 because they could be relevant here. Boris, if we use this alternative measure on![\null [3]^n](https://s0.wp.com/latex.php?latex=%5Cnull+%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) , then does your example disappear?

, then does your example disappear?

February 9, 2009 at 11:27 pm |

429. Answer to #428: I do not think it disappears. It has density 1/2 on every . This example seems to be rather hard to kill since as mentioned in #425 the only thing it really uses is invariance under permutations of

. This example seems to be rather hard to kill since as mentioned in #425 the only thing it really uses is invariance under permutations of ![{}[n]](https://s0.wp.com/latex.php?latex=%7B%7D%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) .

.

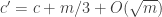

Actually, now thinking about it, there is a way to simplify/modify the example from #412 somewhat. I considered , but I could consider

, but I could consider  or simply the number of subwords

or simply the number of subwords  . Yes, these quantities do vary by a lot, but this variation is predictable: it has some mean

. Yes, these quantities do vary by a lot, but this variation is predictable: it has some mean  that can be computed explicitly, and is concentrated in the interval around

that can be computed explicitly, and is concentrated in the interval around  of length

of length  . Choose

. Choose  so that

so that  is distance at least

is distance at least  from zero (large sieve should tell us that this can be done for most

from zero (large sieve should tell us that this can be done for most  , and maybe there is some elementary argument to do it for all

, and maybe there is some elementary argument to do it for all  that I do not see). Then define

that I do not see). Then define  by condition that

by condition that  .

.

February 9, 2009 at 11:31 pm |

430. Influence vs. Bias

Ah, where is Gil when you need him :-). There is a distinction between influence and bias for, say, a boolean function of n boolean inputs.

of n boolean inputs.

We say that the input has large influence if, whenever we flip

has large influence if, whenever we flip  , we have a high probability of flipping

, we have a high probability of flipping  . Having low influence means that f is close to being invariant in the

. Having low influence means that f is close to being invariant in the  direction, where

direction, where  is the standard basis.

is the standard basis.

We say that there is large bias in the direction (or more precisely, the basis vector

direction (or more precisely, the basis vector  in the dual space, but never mind this), if f has a density increment on the hyperplane

in the dual space, but never mind this), if f has a density increment on the hyperplane  or on the hyperplane

or on the hyperplane  .

.

There is only a partial relationship between the two properties: low influence implies low bias, but not conversely; for instance, as pointed out above, a random boolean function has low bias but high influence for each variable.

High bias leads directly to a density increment and so we “win” in this case. We also “win” if there are many directions of low influence, because f is close to constant on subspaces generated by these directions and is therefore going to be unusually dense on some of them. But there is the great middle ground of low bias, high influence sets (such as the random set) in which we have to do something else.

February 9, 2009 at 11:47 pm |

431. Obstructions.

Boris, in 425 you said you used the fact that the set of wildcards has size . With the measure where all slices are the same size, I think the size of the set of wildcards of a typical line becomes

. With the measure where all slices are the same size, I think the size of the set of wildcards of a typical line becomes  instead. So I allowed myself to be hopeful, because you needed

instead. So I allowed myself to be hopeful, because you needed  and you also needed

and you also needed  to be bigger than the square root of the size of the set of wildcards. But I admit that I still don’t feel as though I fully understand your example, so there may well be an obvious answer to this question.

to be bigger than the square root of the size of the set of wildcards. But I admit that I still don’t feel as though I fully understand your example, so there may well be an obvious answer to this question.

February 9, 2009 at 11:52 pm |

431. Locality in the edit metric.

Something just occurred to me that, in retrospect, was extremely obvious. We know already that we may as well restrict our dense sets to have 1s, 2s, and 3s, which means we may as well restrict our combinatorial lines to have at most

1s, 2s, and 3s, which means we may as well restrict our combinatorial lines to have at most  wildcards.

wildcards.

This implies that the points on our combinatorial lines differ by at most in the edit (Hamming) metric. In contrast, the diameter of the cube

in the edit (Hamming) metric. In contrast, the diameter of the cube ![{}[3]^n](https://s0.wp.com/latex.php?latex=%7B%7D%5B3%5D%5En&bg=ffffff&fg=333333&s=0&c=20201002) is n.

is n.

Because of this, our obstructions to uniformity need to be at the scale of rather than n in the edit metric. Boris’s example is a good example of this; it’s basically a union of Hamming balls of radius m for some

rather than n in the edit metric. Boris’s example is a good example of this; it’s basically a union of Hamming balls of radius m for some  .

.

So maybe conjecture 411 can be recovered by somehow localising it to Hamming balls of size ? There is a major technical issue coming from the fact that the metric is hugely non-doubling at this scale, but perhaps if one is careful one can still localise properly.

? There is a major technical issue coming from the fact that the metric is hugely non-doubling at this scale, but perhaps if one is careful one can still localise properly.

February 9, 2009 at 11:53 pm |

432. Aha…. I missed the point about typical line having length with your measure. It does seem that the example disappears.

with your measure. It does seem that the example disappears.

February 9, 2009 at 11:56 pm |

433. Locality in the edit metric cont.

(The numbering on my previous comment should be 432.)

To illustrate what I mean by localising the obstruction, consider the problem of obstructions to length three progressions in . Fourier analysis tells us that the obstructions are given by plane waves

. Fourier analysis tells us that the obstructions are given by plane waves  on the whole interval [N].

on the whole interval [N].

But now suppose one is only interested in counting progressions of some much smaller length O(m) (e.g. ). Then the right kind of obstructions are given by local plane waves – waves which look like a plane wave

). Then the right kind of obstructions are given by local plane waves – waves which look like a plane wave  on each interval

on each interval  of length m, but whose frequency

of length m, but whose frequency  can vary arbitrarily with j.

can vary arbitrarily with j.

Of course, one just zoom in on an interval of length m where the original set A is quite dense and get rid of this technicality; similarly here, we may have to zoom into a Hamming ball and work locally on that ball.

February 10, 2009 at 12:15 am |

434. We can assume that the density is uniform across all smallish Hamming ball. That is because if we get a density increment on a smallish Hamming ball, we get a density increment on a tiny combinatorial cube by double-counting analogous to comment #132 from the original thread. Thus, we can increment density on Hamming balls until we are stuck.

February 10, 2009 at 12:29 am |

Re 426, 427, 430, distinction between influence and bias:

Right, my mistake. Most of the examples I’ve been thinking about have been “monotone”, in which case the notions coincide.

February 10, 2009 at 3:31 am |

436. Quasirandomness

To answer Gil’s question: if we manage to settle DHJ(1,3) (or more generally to find lots of combinatorial lines in our “obstructions to uniformity”), we should presumably be able to iterate and also find lots of combinatorial subspaces in all of the obstructions to uniformity. If we double count properly, this should (hopefully) tell us that any set which has anomalous density on an obstruction to uniformity has an anomalous density on at least one combinatorial subspace, and then we “win”.

February 10, 2009 at 3:37 am |

437. Soft obstructions to uniformity

One possible route to keep in mind, by the way, is to back away from the “hard” goal of explicitly classifying all “obstructions to uniformity” in a very concrete manner, but instead settle for a “soft” classification which expresses these obstructions as something like a “dual function” from my primes in AP paper with Ben Green. In some cases, one can tools such as an enormous number of applications of the Cauchy-Schwarz inequality to deal with these sorts of dual functions.

Another example of a “soft” obstruction to uniformity, this time for length three progressions in [N], is that of an almost periodic function: a function![f: [N] \to {\Bbb C}](https://s0.wp.com/latex.php?latex=f%3A+%5BN%5D+%5Cto+%7B%5CBbb+C%7D&bg=ffffff&fg=333333&s=0&c=20201002) whose shifts

whose shifts  are “precompact in